Cynthia Breazeal MS '93, ScD '00

INTERVIEWER: Cynthia Breazeal, you're both an alumni of MIT and an associate professor at the MIT Media Lab, where you founded and direct the Personal Robots Group. You've been acknowledged as a pioneer of social robotics. And your book, Designing Sociable Robots published in 2002 by MIT Press, is considered a seminal work in the field.

Today, among other topics, we'll talk about your research exploring expressive social exchange between humans and humanoid robots. You're also the founder and chief scientist of the company Jibo Incorporated, which is bringing to the mass consumer market the world's very first family robot, Jibo, which, or whom, you've described as the meta of your work at MIT in the field of social robotics. Welcome, Professor Breazeal.

BREAZEAL: Thank you.

INTERVIEWER: We'll start chronologically with your early years. We'd love to know what it was like growing up in New Mexico and California, your family, your inspirations. What did you do for fun?

BREAZEAL: Sure. Yeah, so I was born in New Mexico. My father was a computer scientist at Sandia National Labs. But we moved to Livermore, California when I was three. So he was basically transferred from the Sandia branch in New Mexico to Livermore, California.

And my mother was also a computer scientist working at Lawrence Livermore National Labs. So I grew up in a very technologically scientifically rich experienced family. I think growing up it's quite different now than it was back then. Now, Livermore, California is considered an extension of Silicon Valley. So it's much bigger, obviously much more technology. When I was growing up, it was a lot of cow pastures. We made a joke that it's a lot of cowboys and physicists.

But again, because of the National Labs there, a very unique environment in that a lot of the parents were scientists, engineers. And so there were a lot of opportunities for me. So growing up, not surprisingly, my parents, back in the day first they'd be bringing home these punch cards. That's how you programmed computers back then.

So I was exposed to the ideas of computers and technology very, very early. They'd take us to science museums and exploratorium and things like that. We would always get the earliest computers or gadgets. So I remember going to school being the first student to come in with my essays printed out by a computer. So technology was just part of growing up for me.

And my parents always really encouraged me to think about a career in science and technology. Because they understood it was tremendous opportunities, very interesting work, which of course, was different. Because a lot of my friends weren't necessarily getting that kind of encouragement. So I think I was fortunate in having both parents as really powerful role models in that.

I also spent a lot of time in competitive sports. I was a very competitive athlete in a number of sports. And in fact, every now and then when California does a Title IX celebration, they often invite me to come--

INTERVIEWER: What were your sports?

BREAZEAL: --and give a speech. So I did soccer. I did tennis. I did track. I did some swimming, but really was mostly-- tennis was my big sport in high school. Soccer and track, when I was younger.

But I also credit that experience as being really formative, because-- I guess in two ways. So the first was I believe through being a part of a team you learn about teamwork. You learn about the importance of playing your position to the best you can. You learn about how to work with other people even when things might be going really rough, as well as when things are going really great.

You learn dedication and training, and I think especially for me for track, with wind sprints, you definitely learned to endure the pain and discomfort. Because that's what it takes to really be great at something. So I learned a lot of, I think, tenacity, and persistence, and a sense of never giving up no matter how daunting or challenging something may be. And so I think that was really important.

I think also, in retrospect, because I was an athlete at a high level, I often competed with guys, as well as girls. So I was always comfortable competing with and against guys. Which I think when I went to college and going in engineering, of course, it's a very male dominated area, I always felt very comfortable. And I think that helped me in a lot of ways. And I think the sports helped create a common ground that has really served me not only through undergraduate and graduate, but even in business life now. So sports was really, I think, a really formative experience for me.

INTERVIEWER: Yeah, I get it.

BREAZEAL: So between the sports and between all the technology, I guess it's not that surprising how I ended up where I am. But that definitely got me started on this path. And then I often talk about why social robots.

I saw Star Wars when I was 10. And I just fell in love with R2D2 and C3PO. It became like my theme of that year in school. Everything was about those droids for me.

INTERVIEWER: Although I gather you wrote an essay. There's something when you were in third grade.

BREAZEAL: In third grade. Yes, no it wasn't enduring. Robots that were much more like helpful companions than autonomous task drudging automaton or tools. Robots that would relate to us in this interpersonal way, robots that were, again, this aspect of relatability, this human element that could connect to us beyond the utility, but the emotional side always fascinated me and always captured not only my mind, but my heart.

INTERVIEWER: Does it fill a void somehow? Why would you think of a robot doing that as opposed to a friend?

BREAZEAL: Oh, I had lots of friends. No, I think because I loved animals. I loved animation. I loved characters and stories. And I think for me robots just fit into that. It wasn't about a hole at all. It was more about this whole new way of experiencing connection. And I think we as human beings are driven as part as our nature to want to connect, not only to other people, but to other things, companion animals to nature. And so for me, robots was just a really other dimension for that, that was really compelling.

I think there's something very special about connecting to the not quite human other. And I think that really captured my imagination. So as I went through school, it wasn't as if I'm like I'm going to build robots for the rest of my life. It was-- I did sports. I wanted to be a doctor.

There's a time in college I wanted to be an astronaut. And so I thought in order to be an astronaut I need to get a doctorate in a related field. And so I chose space robotics. And I did my undergraduate at UC Santa Barbara. I was majoring in electric and computer engineering.

And at the time, there was an NSF Center of Excellence in Robotics. It was a very fortuitous time for me. So I was starting to learn about robotics in a big way as an undergraduate.

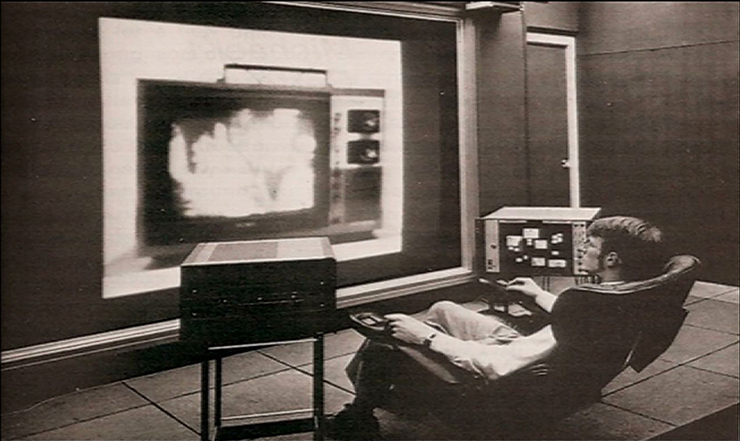

And as I was applying to graduate schools, I of course applied to MIT. And it turned out there was a brand new project that Professor Rod Brooks was starting around planetary microrovers. And at that time-- this is in the early '90s. Rod of course, is a pioneer founder of autonomous robots. But all the robots when I first came to the lab were very much inspired by insect models of intelligence.

So they were-- I remember the day I came in to lab, and I was in one of the admitted graduate students. You come, and you look at the lab. And you meet the students, and you talk to the professors. And I remember this moment coming into Rod's lab and seeing these little insect-like robots going around doing their various things. And I remember it's like that Star Wars moment just rushed over me.

I remember thinking if we are ever going to see robots like that in our lifetime, it's going to start in a lab like this. In fact, it may start in this very lab. And so I'm like, I have to be here.

And that was really the moment I have to say that sparked that Star Wars dream could actually happen in a place like that. So it was this latent thing. But it really-- it was that moment like, oh my God. You could actually try to do that.

INTERVIEWER: You were in the right place.

BREAZEAL: I was in the right place. So obviously that's why I came to MIT.

INTERVIEWER: What was it like? What was the atmosphere there besides the robots? What were the people like?

BREAZEAL: Yeah, I felt like I was finally among my people. So I did my undergraduate at UC Santa Barbara. It's a gorgeous campus. It's on the beach. It's amazing in so many ways.

But the intellectual vibrancy, and curiosity, and just that willingness to work yourself into a stupor about going after something that fascinated you just was not part of that culture. But it was very much a part of MIT culture. And I really felt finally I'm in a place where I can be like this. And it's like people are psyched. And they're doing it with you. And so it was really-- it almost felt like a homecoming, I think, coming to MIT.

So I came in, and our group, which was then called the Mobot Lab, really had a fabulous culture. And there was--

INTERVIEWER: Did you say mobot?

BREAZEAL: Mobot for mobile robot lab. Because at the time it was basically about autonomous robots. But a lot of them were mobile robots. And there was a woman, Anita Flynn, who was a research scientist at the time. She also became a PhD student.

But she really was the person who helped set a really amazing culture in that particular group. So she set policy. She's like everyone digs in and helps each other. If there's a big push to get something done, we're a family. Everybody helps everyone. Or she'd arrange these fun events.

She was the instigator of the AI Olympics, and she got the whole lab involved in doing these silly things, silly competitive events, from athletic to chair races down the hall to hacking a competition. But she spearheaded all that, became a big part of culture in the AI Lab. But she was just that kind of person. And coming into that lab with Rod, of course, who was so famous-- but then also Anita, who was always there, and was really creating this amazing culture among the group. Really made it a very special time and a very special place to be. And it was also interesting that at the time I joined the lab, I think it was 50% women. So Rod was always a huge supporter of women in AI and computer science.

And coming into that environment with Anita in such an important role, also extremely technically competent. You could always go to Anita to ask her questions. But the students doing that with each other, it's a culture that I've definitely tried to replicate and foster in every other organization I've done since then. So with that sense of family, that sense of play, that sense of passion and love for what you're doing.

Never squashing each other's ideas. Being able to give thoughtful critique, but in a supportive way. It was amazing. It was amazing.

INTERVIEWER: That sounds pretty fabulous. Were there are a lot of interdisciplinary-- were people coming in from different areas? How did you, as a group, or you personally get into cognitive science? All the other things one learns. And besides electrical engineering, computer science, what were you studying? What did people bring to it?

BREAZEAL: It was an interesting path. So I can tell you the day I really started thinking seriously about social robots-- and I didn't know it would be called that at the time-- but it was the day that NASA landed Sojourner on Mars. And I was a graduate student at the time. Of course, Rod was one of the people who really was an early proponent of the idea of small planetary microrovers for planetary exploration.

He wrote this famous paper, "Fast, Cheap and Out of Control: A Robot Invasion of the Solar System." So I was fortunate to be in the lab in a time when it was really pioneering that whole idea. And of course the culmination of that, in so many ways, was NASA landing these small rover on Mars. And I remember thinking huge success for, not only not NASA, for all of robotic, and thinking OK, now we sent robots into the oceans, and we send them into volcanoes, and we even send them to Mars but they're not in our homes yet. Why is that?

And I remember thinking, when you think about the complexity of the human environment, especially in the home, it is so radically different than the context and environments we had thought about when you think about exploring someplace far from people. So in the home you have people, and people's behaviors not governed by laws of physics. They're governed by-- people have minds. It is the minds that give rise to all of these behaviors.

INTERVIEWER: Personalities.

BREAZEAL: And beliefs, and thoughts, and emotions, and no one was really seriously tackling that question of, how do you design a robot that could interact with anyone, regardless of education, or life experience, or age, or whatever, and have that be successful interaction that people can get value out of? So in many ways I liken it to that moment when computers were these expensive things that only experts use. And then finally someone thought, what would it mean to actually have anyone be able to use a computer? So it was that moment for me. And we already knew that people tended to anthropomorphize these autonomous robots because it just triggers these very low-level cues of animacy.

And so we thought, well so maybe the social interface is kind of the universal interface. That if people are already inclined to try to understand robots in these terms, we should design them to support that mental model. And I knew that in order to dig into that problem, you couldn't start by trying to essentially simulate an adult. That's too complicated. People have amazing social cognitive capacities, amazing emotional richness.

Charles Darwin said human beings are the most social, and the most emotional of all species. So I thought, well so what is the simplest creature, in some sense, that you could try to model that could still get into this dynamic with a person, with an adult. Understanding that through that interaction from those experiences the robot could learn and could develop-- almost much like how we socially develop-- could be pulled along this developmental trajectory, become more sophisticated, until eventually someday you could reach the adult state.

So that was always the underlying motivation of a lot of that early work. Very inspired by developmental psychology. And I had no background in developmental psychology. So I just started reading as much as I could, attending classes.

It was really a crash course. And not just for human development, but even for other species, other animals. Looking at different theories and models. And it was a very fortuitous time as well because the field of in psychology of understanding emotion was kind of going through this renaissance. So there are more theories down from the behavioral to neuroscience on emotion and interaction.

So I was able to pull from all these different disciplines. And it was fascinating because it was almost a process of taking a theory from here, a theory from there. Some of it combining stuff from comparative psychology to animal behavior, some of it coming from developmental psychology to create this artificial creature that became Kismet.

INTERVIEWER: I was going to say, how do you start? There's so much that you're talking about tackling. Where did you actually start?

BREAZEAL: I started basically getting that inspiration from what am I trying to model? What's kind of that simplest thing I can start with? Because nobody had done anything like this before. The simplest thing that I feel I would allow me to explore that phenomenon I'm trying to get at. And the phenomenon was this interpersonal interaction between people and this non-human thing, but where those interactions were rich enough and of a sort that the robot could actually benefit from them, in the sense of being able to learn from those interactions.

And what are those core initial behaviors that you would have to design that system with? And so a lot of that was inspired by developmental psychology. What are their earliest behaviors that get the infant and the mother or father in that dynamic? And then starting to abstract, in some sense, what is the right level of abstraction for that model? So it was very much combining in weaving the science in with the technical and in the engineering.

But it was also a real fascinating design process. Because as you interacted-- and I knew as you would interact with this robot-- you have to want to interact with it. You would have to want to invest your time. You would have to want to, in some sense, nurture it. It's this process of wanting to teach and play the same way that we would with children. So how the robot appeared was also really important.

INTERVIEWER: To make it engaging.

BREAZEAL: To make it really engaging and to pull on-- [INAUDIBLE] talks about Kindchenschema. So this baby scheme that you see in dolls and toys and Disney characters. But the design of the robot would have to naturally put the person in this mode of, oh, it's a young creature. It's adorable. I want to play and teach.

It's just pulling on what's already I think innate within us. And so the design of Kismet was also very inspired, both by animation, as well again as development psychology area. So there was a reason why Kismet had these big eyes.

INTERVIEWER: Stephen Jay Gould has written about that. Something about the big eyes.

BREAZEAL: And the proportion of the head to the body. So all of those things were designed to really get people naturally into the right mode of interaction that the robot could then modulate and dynamically interact to make this dance happen. One of the paradigms that was so fascinating to me, reading a lot of the literature was talking about mother-infant or parent-infant interaction was a dance. It's this constant adjustment simultaneously. This kind of attuned reciprocity.

Which is so unlike when we think about how we interact with Siri or other systems. Like you say something, and it responds. It's like moves of chess. They're discrete. Whereas our interpersonal interaction, it is this dance.

It's this rhythm. It's these micro adjustments. That's what I wanted to capture because in some sense, that serves as the vehicle for a lot of other stuff. It sets the rapport. And so a lot of the early work with Kismet was really trying to understand that.

And so, not only the social cues, but the emotive cues and the motivational systems. And not just kind of the decision making, but these underlying drives and motives that would shape and bias all the other behaviors the robot would engage in to try to sustain, in some sense, this communicative dance with you. And through that dance if it wanted to try to get interactions or experience about a certain kind, it would, in some sense, nudge the person to get them to engage in those behaviors with it through these emotive social cues. Much like how babies are so good at having us do that. So it was that game for Kismet. So it was exploring--

INTERVIEWER: Kismet was the first?

BREAZEAL: It was the first. It's recognized as the first social robot. And it was really the first to explore this human robot dynamic interplay on the social, emotional, physical, expressive dimension. And again, all of that was in place because it became the substrate for learning and development. That was the whole point.

INTERVIEWER: Now you had an evolution of various-- there was Kismet and there were others. And were you trying to answer specific questions with each type? With each robot?

BREAZEAL: Absolutely. I think every project, not surprisingly, inspires a whole host of follow-on questions and ideas. And one of the really truly amazing things about being at a place like MIT, is that you can then build the next robot-- in some sense it becomes your experimental apparatus, to explore the next question. Whereas other colleagues of mine tend to buy robots because they can't build them, it almost becomes not the greatest match between the scientific question they're trying to answer and what they can actually access. Whereas at MIT, you can design the actual robot that you think is going to be best suited to get at the underlying science that you're trying to do.

So I think that's been a real asset of being able to do that work here. So we would design a different robot in many cases to explore a different sort of question, about either human robot interaction, or understanding something about social intelligence about the machine, or emotional intelligence about the machine, or some kind of social learning. Learning by imitation, learning by social referencing, learning by tutelage. All these different questions and models around really thinking about human robot interaction as this tightly coupled partnership that robots of the future were going to interact with us, not as tools that were teleoperated, but as partners, autonomous partners, that would be able to understand us, be able to learn from us, be able to communicate in ways that are natural for us, that treat us like human beings, as opposed to other technologies or rocks to be navigated around.

So it was all about this intimate partnership that really was informed deeply by human behavior, human psychology, our minds, the way we experience things. And trying to come up with the best match where it was never about trying to build a robot that was identical to people. It was a very different tact of saying the thing that is so fascinating, and the value proposition of this work is that robots are not human. Because of that they have different capabilities that can really complement ours.

So we can work as a team that can up the capability of the team. But you need to get that engagement right. You need to get that interface right.

INTERVIEWER: So the design was another thing you played with.

BREAZEAL: It's always been at the intersection of design, of psychology, of engineering. I'd even say of art, and expression and animation. It's always sat at that critical intersection. Because your fundamentally dealing with people. And people have all of these dimensions.

And I think one of the big epiphanies of the work over time has been, as we've created robots to explore different context domains, like robotic health coaches, or learning companions for children, or thinking about social robots as a new kind of telecommunications medium beyond Skype or something like that. Where you have a physical embodiment in place, or elder care. All these domains. What we're coming to realize is the better a technology can really support richly human experience, not just around the cognitive, which is where a lot of technology is focused today, but the cognitive, the social, the emotional, and the physical.

It deepens our engagement. We're able to bring more of ourselves into that interaction and experience. And as a result, we as people, are more successful. So with the physical, social weight robot health coach, people were more successful with the robot then they were with a computer that had the same dialogue model, gave the same advice.

In some sense, the quality of the information was identical, but that relational other dimension of support was missing. And so people had greater engagement. They had an emotional bond with the robot but they didn't form with the computer, that led to again, this sense of-- this robot is really-- the computer-- I do my taxes on the computer, I do email, I do all these things on the computer. But this robot is here to help me with something that's really important.

INTERVIEWER: What did that one look like?

BREAZEAL: It was a robot that combined a flat screen on a body. It had a head. It was very simple, but it did a couple really critical social cues. So it was able to make eye contact with the person. It was able to share attention on the screen. So it would look at the person or it would look at the screen.

But it was like an appliance, in some sense, in the home. As you can imagine, you'd put it on the counter, it'd have a persistent presence. It could do face detection, so it knew you were there. It would talk to you. So it gave you that social engagement, and that persistent presence, versus a computer that, when it's off, it's off.

So I think that sense of persistent presence was also a fascinating different element that the computer didn't offer. But the bottom line is when you talk about something like weight management, the question isn't your ability to lose weight, because people lose weight using all kinds of crazy diets. It's really about the long term engagement and keeping it off. And we know from the human social psychological literature, this social support is known to be very effective for people.

And so we basically are applying that to say let's create a technology that's capable of also providing social support. And then we compared that form of social support with just a screen versus the robot. And again, the social presence of the robot seemed to make just a quantitative and qualitative difference in people's experience with the system.

INTERVIEWER: And the art and animatronics part of what you've been doing also, how much were the choices about being cute? How important was that in engaging people? And did you find that that was an important ingredient? The big eyes, all the way through the various robots you did? Or was there something childlike that really works? What were the design elements that you played with?

BREAZEAL: Again, the design of the robot is always informed by the context. What's the scientific question you're trying to understand? What is the nature of the relationship even, between the person and the robot you're trying to facilitate? So the way that you would design a learning companion robot for a preschool aged child, not surprisingly looks different than maybe one that you would design for an adult who's trying to lose weight.

So maybe then it's more streamlined. It looks more like a high-end consumer electronic, whereas for a young preschool child, it may be more toy-like, more fanciful. It always has to be appealing. So the design has to always feel good for a person.

Otherwise they won't engage with it. So the aesthetics always matter. But again, depending on the question and the population, you have to consider all these things carefully.

INTERVIEWER: And then what you've talked about with the weight loss robot, is sounds like the one that you're working on, is it Jibo? Jibo Incorporated. Could you describe that? Because that's something you've left MIT to launch. And I'd love to know how that's working. And what it is.

BREAZEAL: I think a lot of the insights that I've been able to gain from the research at MIT over the years, and exploring these different kinds of social robots in a multitude of different contexts-- when we think about robots today, largely, and I mean kind of the general mindset, is that the value proposition of a robot is physical labor. It can do physical work of some sort. So whether that's manipulating something. Or whether that's navigating from point A to point B. What can you do with that physical affordance?

And so we see many robots today that are really based around the task they perform around that physical capability. And social robotics is really taking a very different mindset and saying, well actually, the core value enabler is engagement. The ability to engage people in a much richer deeper way. And so social robots, as we've designed these again, robots with these different context, I started to think of robots as a new medium. And new medium for content.

And that became this kind of epiphany because right now when you think about robots, they're kind of limited around a certain set of tasks they can do. Because it is around this physical confidence. So you have high-end robots that are for manufacturing, or surgery, or things like that. You have robots in the home that are very niche.

Vacuum cleaning robots, pool cleaning robots, and things like that. Maybe pure toy, entertainment robots. But there's not a robot yet that can do many things for you. But once you start thinking about a social robot as a platform for content, now suddenly you have a robot that can do many, many, many different things for you. Because of its ability to give you deeper engagement.

So it's like content on screens that now is liberated to come alive in this whole new way that's much more holistically supporting all of our attributes. So again, the physical, the social, the emotional, the cognitive as opposed to just a screen that displays with information. It's a social presence. So Jibo, in many ways is the meta. It's a platform.

We think about it as a platform for content. Much like how we think about tablets and iPhones today, it's a platform for all kinds of content where you're leveraging the engagement and personalization and the relational attributes of this medium to be able to make that content and those services that much more engaging for you, and therefore helping you be that much more successful with that content. And so Jibo is about really anything that any developer anywhere would want to create for that robot. So we call them skills versus apps. But we envision Jibo helping people in all kinds of ways.

People of all different ages, from kids around playful learning, or people wanting to age in place in their homes, or family caregivers trying to help manage their remote family care-giving tasks. Or even just getting content like entertainment, sports, weather but in this whole new enlivened way, where the robot, because it is in your home and can interact with you over time, can learn about you and personalize itself for you to give this highly personalized experience of the information and content that you're able to get.

INTERVIEWER: So for example, aging in place. Just give me some specifics. How would we be interacting with Jibo?

BREAZEAL: We did a lot of research interviewing both caregivers and people who are aging in place, as well as institutions who serve those communities. And there it's a fascinating intersection of help me be independent-- I want to do things myself for as long as possible, but I wouldn't mind a little bit of help and support. So whether it's just make it easier for me to get groceries, get a car, keep connected to the world around me, help me stay connected to my children and my grandchildren in a really fun engaging way. So addressing the social as well as the more logistical sorts of things.

For the family caregivers it was also help me make that easier, more frictionless-- help me be able to-- through telepresence be able to engage with my aging mom or dad, to be able to make sure they're OK. But also just to have them be able to engage with my family, with my kids. So a lot of it is around helpful support, but also reinforcing the family unit, the family bond, and the family connection. And this is a really important point to make, because there's a lot of concern or pushback assuming that whenever you talk about a robot, you're trying to replace someone. You're trying to take a person out and put a robot in.

And I think the more lightened view of robots today-- and I think it really started with social robots-- is it's about teamwork. It's about supporting the human network. It's not about replacing anyone. It's about the fact that it adds this other element that helps the whole team be more effective.

So whether that's effective in a tasks sense, or whether that's more effective in a social sense, it's really about creating a technology that supports what people already want and need to do. So we see robots as basically being again, this sort of helpful companion. For the first time bringing together this helpfulness with this companionship, which we're finding is really important to people. They don't necessarily want cold sterile technologies in the home.

Home is special. Home is where the heart is. Home is about family. The family's most important thing in your life.

You want a technology that contributes to that feeling of home. You want a warm feeling technology. So that's really what's so different about Jibo.

INTERVIEWER: Sounds fabulous. Actually in general, all the things you've talked about, you talked about the robot learning too. And just because I'm just naively-- How do robots learn? Just tell me about that. Because I can understand how they're giving to us, but how does that process work?

BREAZEAL: I guess very much inspired and formed by how people learn. Robots learn in a lot of different ways too. There's learning from examples, where the robot may be told, this is an instance of something correct, here's an instance of something incorrect. They get enough of these instances, and they can start forming a model for like what's the idea or the concept of the task that I'm trying to learn. Based on being able to recognize things that belong to the correct category, versus the things that aren't part of that category.

So things like classifiers for recognizing objects falls into the supervised learning paradigm, for instance. You can learn through reinforcement learning, through feedback. So I might want to learn what we call a policy. So given that I'm in a certain context, what's the best action? What's the best thing might I choose to do at this point to help get me the best possible way to the goal I want?

So you're learning kind of this routine, so to speak, of the steps I need to take, and each step of the way, no matter where I might end up, in order that I can successfully achieve my goal. And so the robot maybe getting positive, negative feedback as it goes along and explores, and it's trying things out. And then learns that that was bad, and this was good. And maybe I didn't find anything out here, but later on I discovered something. So I need to figure out how that good thing that happened, or that bad thing can be credited back to the actions that I took. So it's learning again, this little routine, based on positive, negative feedback.

And then the third kind is called unsupervised. So that's kind of the area that's maybe the least developed of the supervised and reinforcement learning. But that's when the system has mechanism, but which is getting a lot of data, and is trying to find structure through the data on its own. So that may be something along using patterns of activity of flow over time of a family, in terms of when are the sweet spots for engagement and activity? So they tend to wake up at this time of day. They're quiet at this time, so the robot shouldn't be making noise at that time of the day.

To kind of fit into the flow of the natural family life. That might be more of an unsupervised learning problem. But again, these are analogs to how we learn. And then we also learn through things like interactional things, like tutelage or training. So you can take these underlying algorithms and you can change how the robot gets that experience.

Whether it's through its own exploration, or whether there's someone explicitly teaching it. What's the nature of that interaction? Is it just positive, negative? Or is it actually much more rich, like the way you might teach someone else? Might there be emotive cues that give that robot a sense of, am I doing the right thing or the wrong thing?

Are they giving me informational cues that really help me build the knowledge base? So you can imagine these learning systems can get much more rich as time goes on. But some of it can be through just straight interaction. The robot may just ask you a question. And that might be a way that it can learn that piece of knowledge. So many, many, many different kinds and ways that a robot can learn and get to know people, get to know about how to adapt and fit into that family better over time.

INTERVIEWER: And then about the science of learning that you are exploring through your robots, can you talk about your recent research, or research in general about working with children and children's learning.

BREAZEAL: When I first started doing the work on social robotics, a lot of the emphasis was on how do you design robots that can learn from people? And really to learn from anyone who's not an expert in machine learning and understands the machinations of the underlying algorithm, but truly anyone. And that's when we looked at all these forms of social learning, like learning from imitation and demonstration. As time went on, basically I think the big moment for me was I became a mom. And I would see my kids grow up.

And again, so much of my work was already so heavily inspired and informed by developmental psychology, but seeing my own kids growing and learning just kind of added a whole other layer of reinterpretation on everything I was doing. And I was becoming much more aware. I mean, I was already aware of it before, but just much more cognizant of the importance and criticality of early childhood learning. And that even if children start kindergarten not ready to learn, they're compromised in their success in school for a potentially a very long time. It's very hard for them to catch up.

And there was a lot of innovation happening with older kids or adults, but not a lot of really groundbreaking innovation happening at this really important critical preschool, kindergarten age. And so my kids were right around that age too. They were three, four years old. And I started developing a whole host of technologies where now the robots facilitated children's development and learning. So from people teaching robots, it was really about robots helping children to learn and grow.

And we explored a whole range of topics from kindergarten readiness, second language learning, oral language development, those critical readiness skills. We looked at ways of thinking about-- my kids were playing video games and it was so frustrating for me because they're sitting there, and they're pushing a buttons and stuff. And I'm like how can we make digital play feel more like playground play? So we started mixing virtual reality, augmented reality environments with projection to physical spaces with robot characters that could literally go "into" the screen into a virtual world and out, to make kids run around and play and move like a playground, but in this world. They're in the virtual world now. Where the virtual world was physical around them because it was all digital projection. To looking at--

INTERVIEWER: No more flat screen.

BREAZEAL: No more flat screen. It's like it's all around you. And then thinking about well, so computational thinking. So can children learn about these concepts at a much earlier age by "programming" these robots, but through teaching them through rules.

So we literally had these vinyl stickers, mediums that children are already familiar with at this preschool age, but being able to almost socially "teach a robot" by literally putting these stickers, that were a syntax, on a vinyl sticker board, and showing it to the robot. The robot would look at the "rule," and then learn it. And that it would do that behavior.

So exposing children to the idea of rules and sequencing, and early concepts of computational thinking. Kids loved it. They just love it, because it's in the context again, of social interaction. It would almost teach the robot how to interact with them.

And so much of preschool is about socialization. So it's a topic and it's a context that's very developmentally appropriate for them. And they just love interacting with a social robot. So we looked at all of these things.

We've been looking at children in the context of pediatrics. Around coping with emotion and stress through social robots. So having a pediatric companion robot that would interact with a child to help alleviate the sense of stress. We know that's so key to, not only a positive experience in hospitals, but to healing and recovery. Even potentially compliance.

So all of these areas we've been really thinking about the context of social robots and children. And I think the sweet spot there was just because social robots are physically present, it's very different than these individual devices of these screens where we all see it. Even my kids, they pull out their tablet, their nose goes in it and they shut out everything around them.

With a social robot, suddenly all that information is co-present in an interpersonal group dynamic way. So we sell parents they could play with their child with a social robot, where it was a group dynamic. Where the robot was almost facilitating the ability for a parent to be able to guide, praise, scaffold basically their own child's learning was like supporting that social dynamic. So for me, it's a very exciting medium for children because it brings the social world together with the digital information. That's much more are in line with what children do and need as they're learning.

So being physically present, being socially present with others around them, but facilitated by the flexibility of digital media and the ability to capture data about what they're doing to be able to form models about understanding what they're learning, where their areas of strength are, where you may want to guide them next to have these personalized learning experiences. So a lot of the themes that we've been exploring is technology has the opportunity to bring the power of that one on one personalized interaction to any child to kind of optimize their learning trajectory. And to do that again, in the social context that's so critical.

And that's very different again, for this lecturing paradigm that we're all familiar with. It's like through AI we can bring that personal experience of personal tutoring, which we know is much more effective than just broad lecturing, if you can do it. AI can bring that to any child. So looking at the personalization aspect of it-- and then interestingly what we've also been discovering is because these robots are modeled not as tutors, but as learning companions, they're actually modeling a peer to peer interaction, you see these really intriguing dynamics where children learn a lot of things from each other.

So there's the content. There's the curriculum, like vocabulary. But there's also things like learning attitudes. So we did a study where we created a curious robot. So a robot that, as a learning companion, as a peer, interacting with a child, would exhibit pro-curious behavior.

If something was wrong it would say things, oh, that's okay. That's how I learn. Or, oh, that was cool! I love discovering that. So communicating this positive attitudes about learning.

And we had a control condition where a robot would do the task and say positive things, but it wouldn't be these pro-curiosity behaviors. And lo and behold, what we found is that when children interacted-- and we did a randomized control trial-- children who got the curious robot themselves exhibited more pro-curious behaviors than those who didn't. So again, modeling the robot.

And we've seen this even through storytelling. As a robot engages the child in a storytelling task, first the robot may tell a story, and then it invites the child to tell a story. And you could imagine over time, you could start to customize the stories a robot tells to the child, and vice versa, to optimize their vocabulary learning.

What we were also starting to see is children were starting to model the robot stories. So it's like just because of this is peer-to-peer dynamic, that's almost with this sort of like furry robot companion that is kind of like your dog, but got much smarter in some sense. It sets up this really fun, not afraid of being embarrassed, not afraid of losing face by making mistakes in front of this other creature, but being able to explore new things together where they actually learn again, not just the curricular aspects, but these other attitudinal and these other kind of behavioral things.

So it's really fascinating to think about how to again, design these systems to really support children's learning and development. We've been working with a number of schools now in the Boston area around storytelling, around vocabulary, around second language learning. Tremendous interest by the teachers in doing so. So that's been work that's been extremely rewarding. And again, I see these robots adding a really fascinating important new dimension that you don't see when it's just a flat screen.

INTERVIEWER: And I want to explore that more, but I'm just curious just to flip it back a second, and how this work-- you said the big epiphany was when you became a parent. How has your parenting been affected by all the things you've been thinking about all these years? Did you see the children in ways that you-- well, it's hard to know what you would've otherwise-- but your experience with them as infants? And did you have things you wanted to make sure to do with them because of all the things that you'd been doing at that--

BREAZEAL: I mean certainly, again, even back when I was developing Kismet, I had read so much developmental psychology, I think I was much more informed than I would have been otherwise as I became a mom in terms of how children learn, and how they develop, and how critical these experiences are. It's kind of a funny story, but kind of a side thing that I've definitely learned is for kids, it's actually good when they're bored. I worry about these digital devices that become almost this very low cognitive way of addressing the boredom issue. If you've done any of these little Twitch games, where it's not a richly engaging, kind of high impact activity. But it scratches that boredom itch.

It's good for kids to be bored because when they're sufficiently bored they will actually invest in doing something more meaningful like going outside and playing. And drawing something, or just doing something that requires them to invest more of themselves in it. But you got to wait for that boredom threshold to get to a point before they'll motivate themselves. So I think that fostering that drive of curiosity, that drive of empowerment, in terms of boredom is actually not a bad thing, but it's actually a good thing. Because it means that I'm going to be motivated now to go do something about it.

And one of my biggest worries about again, digital media is it's almost like too much of a mindless itch to that boredom itch, rather than allowing it to build to a point where you'll actually engage yourself in a more meaningful, more richer activity. So I want to create technologies that really get to that richer engagement, that richer interaction that challenges at the right level. I think striking that balance is really critical.

INTERVIEWER: And dealing with children versus dealing with your robots and adults with your robots. Do you find there are differences in terms of how these interactions happen?

BREAZEAL: Oh yeah. I would say although these social robots interact within ways that are definite-- they have simulators and these have analogs to the way that we engage with each other. But they're robots. And again, for me, my whole philosophy has always been the magic and the real value proposition again, of robots is that they're not human. They're different from us. And I think that makes them intriguing for us, and it makes them able to complement us.

And we have friends, and family, and people. We don't need to be trying to replicate exactly what we can already get from our human networks. So they interact with us in a way that's familiar and natural, but not exactly human. And that's on purpose.

I know there's a lot of dialogue out there about are people getting too much exposure to interacting with things that aren't human, that they're somehow being negatively impacted by that? And I think there's not enough evidence to say that's happening, but it's an important point to be raised. Because we as human beings, we need a breadth and diversity of experience. Of course we do. Children certainly, of course they do.

And I think technologies can have a really powerful enabling opportunity for children. But not at the expense of other critical activities they also absolutely must be engaging in like going outside and playing or being with friends. They absolutely need that balanced diversity of interaction.

Within that context of understanding it's critical to establish that balanced diversity, for the portion of the technology interactions, I think there's a lot of potential. A lot of room to grow to make that better and better and better and better for kids. And it's a very exciting time, because as we're able to learn about how kids learn, and learn how they learn with these kinds of technologies that are actually different than how they learn from each other, or from teachers, or from other technologies, but are also effective, I think is really ripe and really fascinating.

INTERVIEWER: So we're going to talk about funding for a second. When you started Kismet, I guess you had research funding by ONR, Office of Naval Research, DARPA, Department of Defense Advanced Research Projects Agency, and Nippon Telegraph and Telephone Corporation. And now with Jibo, you're doing crowdsourcing. Is it Indiegogo that you're using?

BREAZEAL: We did use Indiegogo, yeah.

INTERVIEWER: And so how is that all different? In other words, did the sponsors affect what you were doing before? And is it different now that it's a general public funding source? Or has that impacted anything, would you say?

BREAZEAL: Of course, funding in the commercial world is quite different than funding in the research world. So I think I've been very fortunate in that I've been able to really choose the kind of research that I want to do, and then can find the right funder who's also excited about that research. I have not felt that the nature of my own research was overly-influenced on a path other than what I wanted, which is fantastic. Because I think for anyone to do their best work, they have to be passionate about it. So it's really important to find that match.

Writing grants, doing the academic side of fundraising, it's a very different process. And of course, for instance with the National Science Foundation, it's peer reviewed. It's just a very different process. It's on a much different time scale.

When you go into the commercial world-- there's a number of ways that you can of course, fund from work in the commercial world from angel investors, to crowd funding, to venture capital, and the like. And each of it is a different kind of funding that's appropriate at a different kind of stage. So crowd funding is filling this sort of very early stage funding-- I'm talking more about the hardware space right now-- but where you can see if there's a market demand for your product before you actually have to go through all the investment and money and time to build a product and launch it into market, at which point, it's almost too late.

So it's been a really critical market validation piece, which is really powerful. It also allows you to start to build your earliest community and learn about who is excited about what you're doing. And really learning about where the sweet spot of that is. And in the case of Jibo particularly, we had a very big PR media push associated with.

It allowed other companies even, who felt that Jibo was something that they would be very interested in helping to also partner with to bring to the world. So it became a door opener putting us on the radar of a lot of corporations that could help accelerate Jibo's path to the marketplace. So it was very beneficial in a lot of ways. But I would say the crowd sourcing is definitely for this very early stage funding.

To get venture funding, it's got to be a market and a business opportunity that's sufficiently large to merit that. And the time scales are much shorter, I would say, in terms of what you need to be able to deliver on a time scale than in the academic research community. So the pace is much faster. And it's incredibly exciting. It's incredibly exciting.

But I feel that it's a very different enterprise so to speak. A very different endeavor than what goes on in academia. And I'm learning a lot. And I'm feeling that I'm growing in ways. So I wanted to do Jibo for a number of reasons.

One was obviously to bring the work to the world where it can do profound good. It's time-- the technology landscape, the consumers landscape. It was just time to be able to bring a social robot to the world. And for me, it's almost poetic that Jibo was launching around the same time that Star Wars is coming out with their next movie, which is actually a sequel to the 1977 movies.

You got through all that cycle and now it's actually real. It's not just in movies. Like bam! There's Jibo. So that's very rewarding for me to have that journey from 1977 to 2016. So it's amazing.

INTERVIEWER: You've done a lot of work in public understanding and with Hollywood. How did it feel to be a scientific spokesperson with Steven Spielberg when he sought you out for the AI film?

BREAZEAL: It was very exciting. So I believe Kathleen Kennedy was the one who saw an article in Time Magazine. That was when I was just finishing up my doctoral work with Kismet. And of course, the movie AI is about this robot, emotions, the whole questions of robot in society capable of emotional attachments and all of that. It was really fascinating.

I had done interviews before that, but it was the first time that I really engaged with the media in the context of-- not only the movie, but really thinking far, far, far, far, into the future. And a lot of the interviews I had done prior to that was much more scoped around the work that I'm doing now. And the media next steps. This was really a much bigger, bigger art of looking forward to the future. So it was a lot of fun. It was a lot of fun.

Obviously, I think you just have to understand as engage with Hollywood, their number one priority is telling a great story. I mean the scientific accuracy is really kind of secondary or tertiary.

INTERVIEWER: Is that challenging for you to have to see that?

BREAZEAL: I think you just have to acknowledge that what they're trying to do is tell a great story. And to the extent that you can help them make it a little more plausible, if that's their mindset, that's great. That's great. And there's certainly some cases where they actually are very interested in trying to make it much more accurate. But in the world of science fiction and robots in the future, it's usually not their top priority.

INTERVIEWER: But can science fiction sometimes help public understanding? Does it help move people toward an understanding of technology?

BREAZEAL: I think science fiction obviously first and foremost, it inspires. I definitely credit Star Wars for being the experience that sparked my imagination, my passion for this area. And it's not just me. You could talk to almost anyone in my field. Why did you get into AI robotics? They'll talk about often some piece of science fiction that inspired them.

INTERVIEWER: You had a role in the Star Wars and the exhibit at the Museum of Science in Boston.

BREAZEAL: That's right. And that was great because that was again, kind of one of those full circle moments of first seeing the movie as a kid, and now being actually part of the exhibit as real world robotics.

INTERVIEWER: What were you doing in that exhibit?

BREAZEAL: There were a couple of ways that they used interviews that I had done on social robots. And I wrote a chapter-- there's a beautiful hard book cover that was done and called Star Wars: Where Science Meets Imagination. I did a chapter for that, in terms of human cyborg relations. Or human robot droid relations, which was a lot of fun.

But the pinnacle was there was a sand crawler part of the exhibit. And you go into the sand crawler. And there are all these robots. And it was a conversation between me and C-3PO. And Kismet was actually one of the robots in the sand crawler, along all the other droids.

So it's like, when you saw the sand crawler and you saw all of the droids in the movie. It was like that. But there was Kismet. And so I did an interview with C-3PO about AI, what it is today and where it's going to go in the feature. So that was fabulous.

That was fun. And so that was also obviously a great opportunity to leverage the cultural fascination of Star Wars to help educate people about where the science is today and what's possible. So it was a great experience.

INTERVIEWER: It's just full circle, isn't it?

BREAZEAL: Yeah, it's funny how sometimes that works out.

INTERVIEWER: Are there things about working with robots that helped you think about philosophical questions about humanity, and what does it mean to be human? And what can robots teach us in that vein?

BREAZEAL: Again, going back to science fiction movies and our literature, if you think about our fascination with robots, it really goes much further back than that. So if you go back to ancient Greece and Hephaestus, in many ways he was building robots. He talked about maidens made of gold who could sing and speak to you. Or chariots and everyday objects that would move on their own, almost like the enchanted castle in Sleeping Beauty. So the idea of robots has been with us for a very, very, very long time.

And it's interesting that you can now almost map with each big technological innovation around the ability to make much more sophisticated mechanics, or of course, computation. It's not much long after that that the next generation of scientists and designers create the next generation of machines in our image. And then it goes into our science fiction. And I think the bottom line is, why do we do that? It's because robots have always been sort of the not quite human other for us.

And because of that, they are almost a mirror we hold up to ourselves to think about and to self reflect. What does it mean to be human? Do we have convictions over our values today when we put up a robot as being in that position? So robots have always been a really powerful thought experiment for us to reflect upon ourselves, to reflect upon society. And I think that's really been our enduring fascination with them.

It's perhaps the only technology I can think of that has been such a long part of our cultural dialogue across humanity and civilization. And so now that we're kind of reaching this next generation of robots that are really starting to capture aspects of those real dreams, I think it's a fascinating time. And people talk about the exponentiality of course of computation. And the rate at which we're connecting, not only people through networks, but things, and the internet of things, and the accumulation of big data and digitization. And then these recent advancements in AI and machine learning to make sense of that data. And to make these artifacts smarter, and more adapted to us. And then companies starting to take those characteristics, and not just holding them inside, but building them into platforms and allow other people now to take these very advanced capabilities and put it into their products and services.

So there's this rapid expansion of these new capabilities that are impacting our lives in a whole myriad of ways. And becoming increasingly affordable, increasingly accessible. So I think we are at a very special time where the joke in robots of course, is it was the technology that was always kind of trying to be, but always kind of a little ahead of where the technology was. I think it's all catching up now. And I think we're really on this new trajectory of AI and robotics. Because of all these other factors, this convergence of all these other factors is happening right now.

INTERVIEWER: Is there a line that we should be aware of not crossing? Or is there something that we should be cautious about as we proceed along this route?

BREAZEAL: I think we always need to be mindful. I think with any new technology, because it's new, there's the opportunity to do tremendous good. And really for people like me of course, that's why you do it. You do it because fundamentally, you believe this is going to make the world a better place. This is going to help people in profound ways.

But there's always a flip side to that. And so what are the risks that are exposed by these new methods as well? It's important to be very conscious of both of those sides. And to have certainly at first, a very open dialogue about it. And then as things progress, being able to take more actions if they make sense to do so.

I think right now there's a lot of fascination and projections around superintelligence, and the risks and opportunities associated with that. For me, that's still pretty darn speculative. I think we know that these trends are such that technology will get smarter and smarter and smarter.

INTERVIEWER: What do you mean by the superintelligence?

BREAZEAL: Superintelligence is basically what happens when their intelligence of machines supersedes human intelligence. And then because machines aren't governed by wet wear, so to speak, our brains, can it keep going more, and more, and more? So there's been a number of recent books and articles written about superintelligence, or the singularity. And if and when, how we get to that point, it's still a lot of speculation. I think that the question that always I focus on really is, how can technology really support human flourishing?

So when we think about our human values, how can we make sure that technology really helps to support our human values? And we think about the kind of people we want to be, the kind of society we want to be, how can we create technologies that help us get there? And I think technology can play a tremendous role in helping us get there if we design it in the right way to work with again, the broader fabric of society. I'm a strong believer in the democratization of that.

And it's clear that education is becoming-- I think we're at the point where now it's a basic right as much as food, clothing, and shelter. To be able to take advantage of all the knowledge made available through the internet and so forth, it's clear that education is absolutely fundamental. And because of that, and the power and the opportunity of AI to bring high-caliber learning experiences to all-- I have projects that are now really focusing on that. So thinking about the most under-resourced children of the world, or communities of the world, how we can bring technologies to them. Because technology is coming to that in a way that can help address literacy. If you can learn to read, you can read to learn. All the knowledge that's being made available, the democratization that will now be accessible to them.

INTERVIEWER: Where are you doing some of those projects?

BREAZEAL: So this is a project-- it's a collaboration with Tufts University and Georgia State University. And we recently spun off a nonprofit called Curious Learning. And we've made deployments targeting, again, we think about who are the most under resourced contexts.

Can we show real positive learning outcomes in those extreme contexts? So we have been in countries like Ethiopia and Uganda, where children live so remotely, the entire village is illiterate. And school is literally a three hour walk.

So the bottom line is they have no literacy at all. Can we bring in technologies, solar chargers, and show that children on their own, because there's no adults who can teach them. It's all driven by their own curiosity and their desire to learn. Can they learn these literacy skills? And we've been able to show that's the case.

We've now replicated those findings in overcrowded schools in South Africa where the student teacher ratio is at least 50 to 1. Up to 100 to 1. So although they're in a school, although there's a teacher, there's minimal teacher intervention for a classroom of 100 children. So the tablets supplement the class. And we've been able to show learning gains there as well.

We've done deployments also in the United States. So looking at poverty, at-risk communities, where preschool might be done by lottery. So the result is a lot of children don't go to preschool. So they come into kindergarten and they're not ready to learn. And so we've used this technology essentially as a virtual preschool.

And able to show that having children use these tablets at home, as a virtual preschool, can rival learning outcomes that children who are enrolled in a Head Start program achieve. So these are all early, early results.

INTERVIEWER: And preschool is the target that you're working with?

BREAZEAL: Exactly. We're targeting early childhood learning. And the goal of the project obviously is you start with-- obviously you got to start with the preschool pre-literacy skills. But we want to pull them to the ability to learn at a third grade level. And then at fourth grade, there's that transition to reading to learn. So we want to pull them the whole trajectory.

But we're here at the starting block, which is around these precursors. But we've been able to show really promising early results for that. And of course, there's a lot more work that can and needs to be done. But I think it's a compelling proof of concept that they've been able to replicate these outcomes in very different kinds of under-resourced environments.

INTERVIEWER: And very different cultures.

BREAZEAL: And very different cultures.

INTERVIEWER: Is it being perceived in the same way, or do you have to do-- are they very different projects?

BREAZEAL: Basically, it's the same experience, the same technology experience. And what we're finding is kids are kids. We purposely don't instruct the children at all. So you just give them the tablets. And you basically say, here's something that might help you learn to read.

And that's it. And the children take the tablets and they explore. And we find that within 4 to 10 minutes, the first child figures out how to turn it on. And then they start sharing and showing the other kids how to turn it on. And then they start poking around in it.

Within the first day, they're opening app after app. I mean, it's like fish to water. It's amazing. And then it's incredibly social. It's not the case of one child per tablet with their nose in it.

They're actually sharing these tablets and discovering things, and talking about it. And then working in small groups. And then maybe one child goes to another group of friends and says, we figured this out. Can you do this? So it's these little learning micro-communities within a bigger learning community that seems to be one of the critical social dynamics that makes this work.

And then the adults who were there just basically give a lot of positive encouragement. And it's that positive reinforcement of all the accomplishments these children are making continue to propel them. So they're inherently motivated by the experience. They're getting social reinforcement of the experience. It's extremely promising.

Because it takes a radically different framework on how you address learning and education when you can't rely on a school and a teacher. There may be a school, but it's not a traditional 20 students to 1 teacher kind of ratio. It's a very different model you understand. And you have to understand how is it that children are learning together on their own to pull themselves along this curricular trajectory? And how do you design a technology that is the vehicle that helps support them on their journey?

INTERVIEWER: Are these more Jibo-like? Or, in other words, more of a unit? Or are these the more anthropomorphic robots that are the intermediaries with these young children? What's it look like?

BREAZEAL: So when we're talking about distributing these technologies out to all the world, so it's literally it's low-cost tablets. Tablets is the technology that you can get out there. And smartphones are proliferating. Eventually it will be things like social robots.

INTERVIEWER: So you would not call this a social robot.

BREAZEAL: I wouldn't call this a social robot, but I would say that a lot of the work that we've done in social robots around kids and learning, are definitely ideas that we can infuse into this kind of experience as well. There's sort of just the practicality of getting a technology that's out there, that you can argue is already starting to proliferate of its own accord. So it's really about the platform. And it's an open platform.

So it's not even just the content that's on the tablets now, but it's framing it as this open platform, by which the world can contribute to help solve this problem. So countries can contribute specific content that they feel is the most appropriate to their communities. But the killer app, so to speak, that we're offering is literacy. That's kind of the core of it. But it's very much designed to be an open platform. And a lot of what I've been learning honestly at Jibo about creating developer communities and platforms is very much helping to inform that work as well.

INTERVIEWER: And how would you connect it to the social robot? I understand why the logistics aren't-- can't be right now. But what is it that you've learned from social robots that makes this tablet so engaging?

BREAZEAL: So it's two things. So on the research agenda, it is this vision of this learning about the child, based on how they're interacting with this experience. And adapting and personalizing it to the child. Understanding that it's not just about the kind of curricular, factual stuff. But it needs to support the social and emotional experience as well.

And understanding that that technology needs to sit in this bigger context that's also profoundly social. That that social interaction of the group of children with these technologies is as much of the engine that makes this work as the content that's on the tablet. And perhaps even more so than the content that's on the tablet. So it's just all the appreciation of how you create experiences, technologically great based experiences for children that really meet the multi-dimensional needs of a learning child in their environment.

And then through the AI techniques, being able to understand how we can capture this data, understand what they're doing, use it to improve the content as well as personalize the content. Because really it's as much about understanding how children learn in this way, as it is about then taking those insights and then creating an even better technological vehicle for them. But again, it's just a vehicle. Children are the ones who have to do the learning. The technology can't do the learning for the children.

So it's really the technology is supporting the innate processes within the child, which is very much-- they're driven to learn. They're wired to learn. They're naturally curious. It's just supporting that in order help them propel themselves along that path.

INTERVIEWER: But how do you use social robots with autism? Just back to the social robots for a moment-- because of the facial connection. Has that been done? Or have you worked with, collaborated with people who might be thinking about that?

BREAZEAL: Absolutely. So I would say one of the earliest domains, from a research standpoint of social robots, has been around working with children on the autism spectrum disorder. And the reasons are simple. So the children on the ASD spectrum find these social robots extremely engaging.

And I think it's fascinating that one of the things that I've learned is that children on the ASD spectrum are often-- they want to interact. They're social. They're just overwhelmed, in some sense, by the complexity of human interaction. So when you present a social robot, it's like a simpler form of that. And it's at that barrier of entry that they can engage.

And so you hear anecdotes again and again, of when a child on the ASD spectrum interacts with a social robot, you start to see social behaviors that they may have never demonstrated before with another person. And in a clinical therapeutic context, that's really critical. Because now the clinician can take that interaction, and now can shape it. So work by another alumni of MIT, Brian Scassellati, has done a lot of work with social robots in the context of children with autism. And he talks about how often these social robots almost act as a sort of priming the social pump.

That when children first interact with a social robot, they become kind of more engaged socially, more expressive socially. So then the clinician can get more out of the session with the child. So we're discovering as a community, a lot about this kind of work. So I have not personally done a lot of research with that particular population. I've been focusing much more on typically developing children. But there's certainly a lot of really amazing research going on with that population as well, with just huge promise.

INTERVIEWER: Well reflecting at the moment now on your teaching at MIT over the years. And you've been here 25 years. Pretty much.

BREAZEAL: Since the early '90s.

INTERVIEWER: And now it's the 30th anniversary of Media Lab. And by the way, I think there's been-- I saw some footage of you as a grad student with Kismet. But what have you noticed about the changes?

It's a big question, but just how has MIT evolved for you over the years? The environment, the culture. It's a reflecting on-- and also from being a student to being a teacher. Has the community changed?

BREAZEAL: Has the community changed? I think the qualities of the community that I'm probably always the most sensitive to are basically is this a community where people can take their passions and make them happen? Is this a place that you can make your dreams come true, so to speak, in an intellectual scientific sense? And are you supported in that? And are students in an environment where they feel that they're able to engage this passion driven inquiry?

And are they given the freedom to explore and take risks? Because sometimes, it's research. And you don't know if it's going to work out. But feel supported and to have the courage to do something that's never been done before. And I was given that opportunity as a graduate student.

And a whole field has happened because of it. And I feel that culture is still very much alive and well today. And I think that's what makes MIT such an amazing place. In terms of the surrounding community, I think obviously this whole Boston area is thriving around the intersection of health and neuroscience and technology. It's obviously thriving around robotics and AI.

And those are obviously-- they're coming together and intersecting in a lot of ways. So again, just the broader cultural context of MIT as this amazing, I mean, truly amazing institution, that's surrounded by other incredible institutions whether they're like MGH, or Children's Hospital, or other companies and other universities. I mean, the intellectual capital in this area, it's truly extraordinary.

INTERVIEWER: And celebrating the 100th anniversary of Cambridge, and how MIT has impacted--

BREAZEAL: Absolutely. And the opportunities that that affords are very special. Very special. And it's going to be fascinating to see how the academic and the entrepreneurial worlds continue to co-evolve and support one another. I think that's going to be a very fascinating thing to watch. But again, you just feel very privileged to be at MIT at this time, with this culture and the community where you are allowed do what's never been done before.

INTERVIEWER: And also to reflect at MIT about how it's changed for women here. Now your own personal experience, you didn't have issues about feeling shut out in anyway. But you have been on the gender equity committee here? And have you seen things that needed changing? And have they been changing?

BREAZEAL: I have to say one of my biggest frustrations continues to be the number of women in computer science. And what I've discovered is that the percentages of women founders and in startups and in VC funded companies is even worse. It's kind of like, whoa, really? So we got a lot of work to do as a nation in changing that. I almost feel maybe there needs to be an XPRIZE in something like this. The first institution, whatever criteria, to graduate, not just admit, but graduate a class of 50-50 in computer science. And I feel like it's such a solvable problem.

INTERVIEWER: So you're say not just getting women in, but--

BREAZEAL: You've got to get them out. You got to get them out. But I think it takes a village. It's not just about the university. It's about the university working with corporations and schools.

It's got to be a holistic, full-court press to encourage young women when they're still in junior high and high school. Bringing them in to do summer internships either at companies or at the universities, to get girls thinking about, this is actually a place that's really cool. That maybe my perceptions of what it would be like are very different. This is actually a great thing to do. Getting summer internships, getting young women in corporations.

Just setting up that whole community of helping frame a different set of mindsets I would say, around stereotypes. And I would say it's a lot of nudges now. I think we're beyond the point of these huge shoving women out. Unfortunately, I still think there are moments when it does happen. But I think as a society, I think we're all on board with we want to see more women doing this.

And now I think the reason why young women aren't pursuing computer science is-- it's just you go through life and you get nudged by all these different influences. And for some reason, the accumulation of nudges right now are such that women are not choosing computer science. Versus the nudges that I got to pursue it. So I think we need to think about what are all of the nudges that we as a whole community need to do to get more young women down that path. Because it is a fabulous career.