Alan Guth, "The Inflationary Universe” - The Worlds of Philip Morrison Symposium (Day 1)

[MUSIC PLAYING]

GUTH: I brought along Snoopy thinking about my favorite equation. By the end of today's talk, if you don't recognize that equation, you'll know what it means. I got into this business of the early universe starting out as a particle theorist.

In about 1978, I and a reasonable number of particle theorists began to dabble in the early universe. A lot of our colleagues, at the time, felt that we were motivated mainly by jealousy of Carl Sagan. But I hope to make it clear during today's talk that there were also some motivations which came directly from what was going on in particle physics itself. What I have in mind is the advent of grand unified theories. And to explain that, I'd like to give you a capsule summary history of what was going on in particle physics over that time period.

When I was a graduate student back in the late 1960s, it was a period that, looking back on it now, really looks like the Dark Ages. At that time, we only really understood one interaction of nature-- electromagnetism. The theory of quantum electrodynamics had been more or less developed since the late '50s, and that theory seemed to provide a, as far as we could tell, perfect explanation of electromagnetic phenomena. But there are three other known types of interactions in nature-- the weak interactions, the strong interactions, and also gravitation. And those interactions were really not understood at all.

However, the early '70s were a period of tremendous progress in particle physics. The work of Glashow, Weinberg, and Salam was synthesized to provide a unified model of the weak and electromagnetic interactions. And as far as we can tell, that model works perfectly to describe those phenomena. At about the same time, the theory of quantum chromodynamics, QCD, was developed to describe the theory of the strong interactions as a theory of interacting corks. And as far as we can tell, that theory also provides a perfect description of the phenomenon that it was intended to describe. So we very suddenly went from a period where we understood only one out of the four interactions of nature to a period where we think we understood three out of four interactions of nature.

That led to an atmosphere of euphoria, really, among the particle physics community. And there was a very strong desire to move further, to try to develop theories that would describe phenomena not yet found, phenomena beyond the range of our accelerators. And the guiding word behind that movement was unification, the idea that it would be much more attractive to have a simpler theory where there was one fundamental interaction to replace these three that we understood. Gravity, all this time, was left along the side. Gravity at the quantum level only becomes important at much higher energies. So it's safe, in a preliminary way, to leave gravity out.

However, at the energies at which we do experiments, the strong interactions are really quite a bit different from the weak and electromagnetic interactions. And what that meant was that in order to build a unified theory, it was necessary that the unification occur at an energy which is very far removed from the energies at which we do experiments. And that's what led to this fantastic energy scale of about 10 to the 14 GeV, which is a characteristic energy scale of these grand unified theories.

Now, 10 to the 14 GeV, that's about three orders of magnitude beyond what Ken was talking about in his last talk at the beginning. It's about the energy it takes to light a 100-watt light bulb for a minute, which doesn't sound like that much. But again, to have that much energy on a single elementary particle is genuinely extraordinary.

One way of seeing how extraordinary it is is to try to imagine building an accelerator to reach 10 to 14 GeV. In principle, you can do that by building a very long, linear accelerator. The energy of a linear accelerator is just proportional to its length. So it's an easy calculation, at least, to figure out how long the accelerator would have to be. And it turns out that, using present technology, the length of the accelerator would have to be almost exactly one light year. Now, it seems unlikely that such an accelerator would be funded in the days of the Gramm-Rudman Amendment.

So what that means is that if we particle theorists wanted to try to see the dramatic 10 to the 14 GeV scale consequences of these grand unified theories, we were forced to turn to the only accelerator to which we have any access at all which has ever reached those energies, and that appears to be the universe itself in its very infancy. According to standard cosmology, the universe would have had a temperature with KT equal to 10 to the 14 GeV at a time of about 10 to the minus 35 seconds after the Big Bang. So that is the real impetus for particle theorists to dabble in the early universe.

Now, what I want to talk about today is a scenario called the inflationary universe, and I'll get there towards the end of my talk. If time permits, there's a little bit of new material, which I may or may not get to discuss. I want to begin, though, with basics. I'd like to begin by reviewing the standard cosmological scenario. That is, without inflation. So that I can point out what defects of this model the inflationary model was designed to solve.

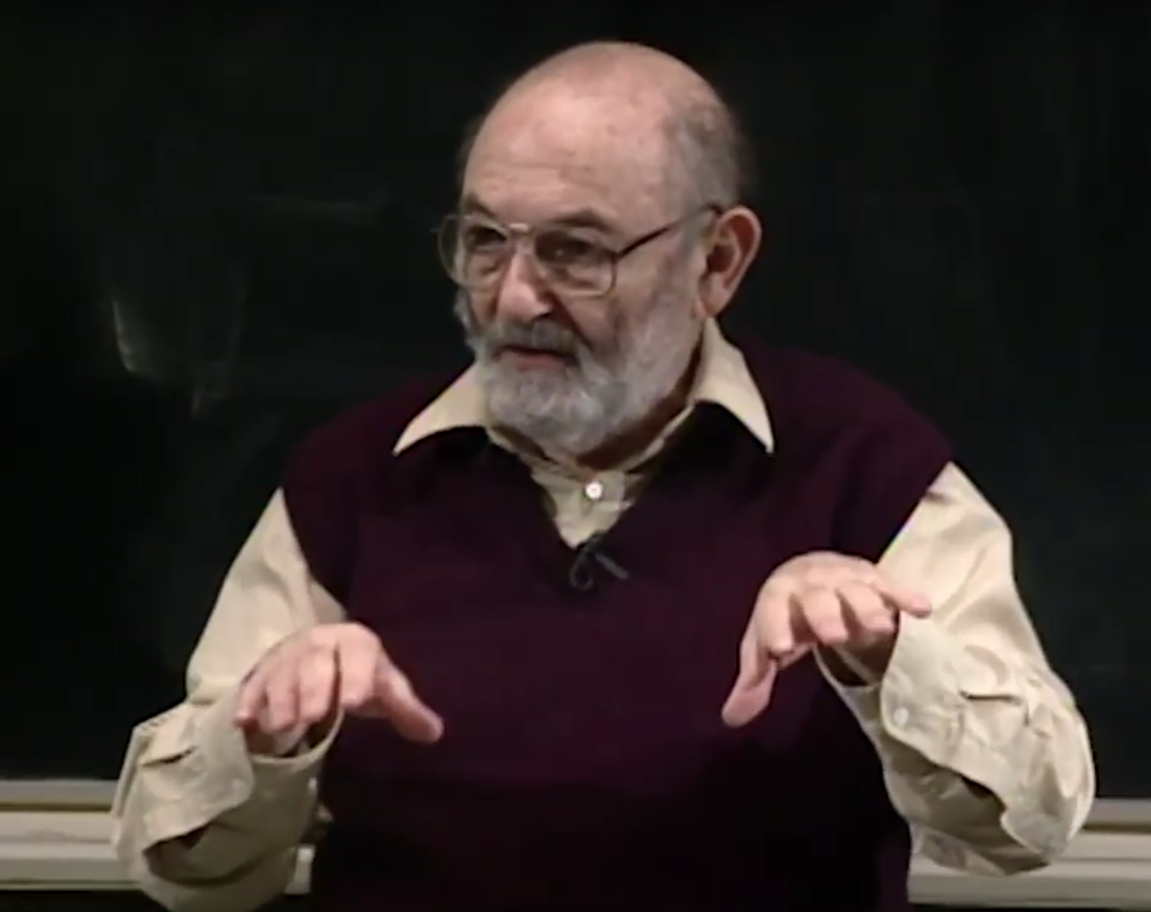

Now, the standard cosmological scenario is something that I learned about in a course that I took as a graduate student once at MIT, a course that was taught jointly by Phil Morrison and Steve Weinberg. I don't remember how well I did in the course. So if I get any of this wrong, it could either mean that I botched it or it may have been one of the lectures that Steve gave. But in any case, we can get it all straightened out, because my teacher is here to answer any questions.

The standard scenario is based on several assumptions, the first of which is that the universe is homogeneous and isotropic. The universe certainly appears to be homogeneous and isotropic. The evidence is, of course, not absolutely compelling. But the assumption that's put into this model to make it simple is that the universe is completely homogeneous and isotropic. The second assumption, which has to do with describing the early universe, which is all I'm going to be speaking about, was that the mass density of the early universe was dominated by the black body radiation of particles which were effectively massless. By effectively massless, I simply mean that their mc squared was small compared to KT so that the masses were negligible.

Third assumption is that the expansion is adiabatic. That is, no significant entropy is produced as the universe expands. The universe essentially remains in thermal equilibrium. Fourth assumption is that we understand the laws of physics, that general relativity governs the gravitational field and the large-scale structure. And the small-scale physics is just dictated by the physics that we know about how particles behave at high temperatures.

When all this is put together, it leads to a very simple picture of the early universe. It leads to a model which you can calculate everything you want to know. The temperature falls like 1 over the square root of the time variable. The scale factor describing the overall length scale of the universe grows like the square root of the time variable. And just to give you some sample numbers that are relevant to people like me, who are interested in applying grand unified theories to the early universe, if we look at the time when KT was 10 to the 14 GeV, the temperature scale of interest, it happened at a time of about 10 to the minus 35 seconds, as I guess I've already mentioned.

The mass density at that time was a colossal 10 to the 75 grams per centimeter cubed. That's the kind number that nobody would have dared speak about when I was a graduate student. Let me remind you that the density of an atomic nucleus, which was about the densest thing anybody would dare talk about when I was a graduate student, is only about 10 to the 15 in these units. So we're up another 60 orders of magnitude. The size of the region that will evolve to become the observed universe at that time was about 10 centimeters. Now that, of course, should not be confused with the size of the entire universe. We have no idea what the size of the entire universe is. It can be arbitrarily larger than 10 centimeters.

Now, even though I'm going to be proposing a modification of this model, I do want to point out that the model has some very important successes. And when we talk about modifying the standard model, it's, of course, also important to make sure that you modify it in a way which maintains the successes that the original model had. One important success is that the standard model explains Hubble's law. If you have a uniform system which uniformly expands, if you work out a little bit of arithmetic, you see that Hubble's law immediately falls out. You get a velocity which is proportional to the distance to any given object.

The second important success, which has already been discussed in Jim Peebles' talk, is that this model explains the cosmic background radiation. Because obviously, if the universe is expanding, it means it's also cooling. The early universe would, therefore, have been very hot. And there would be thermal radiation leftover from this initial hot period. Finally, as Jim Peebles also discussed in much more detail, the model gives rather successful predictions for the abundances of the light elements. You have a detailed scenario that tells you how the universe cooled, which means that if you know particle reaction rates, you can actually compute how many of the different kinds of nuclei should have been produced. And there is one important unknown parameter in that calculation, and that is the ratio of baryons to photons.

But you have a number of things you can calculate. And the fact of the matter is that for a reasonable value of this unknown parameter, you can fit these various pieces of data quite well. So that is, in addition, a rather, I think, spectacular success of the standard model. However, I want to point out that all of the successes of the standard model only pertain to the behavior of the model at times after about one second after the Big Bang. We really have no direct evidence that the standard model is valid at earlier times. So the inflationary model will make use of that fact, and it will modify the standard scenario only at times which are, in fact, much earlier than one second.

Okay, let me speak next about the problems of standard cosmology that the inflationary model was intended to solve. I want a list four of them. The first is probably the best known, the horizon/homogeneity problem. It has to do with the fact that this cosmic background radiation has been very carefully observed. And it's known to have the same temperature in all different parts of the sky to an accuracy of better than one part in 10 to the 4. Now, normally, when you discover an object with a uniform temperature, you can tell yourself that's easy to explain. If you let a glass of water sit on the table long enough, it will come to a uniform temperature.

But in the context of this standard cosmology, that absolutely cannot happen for the early universe. There just is not enough time. One way of phrasing it is you can imagine, regardless of what physical processes were actually taking place, you can imagine you had a network of little green men scattered around the universe whose mission was to arrange for a uniform temperature. And they have little ovens, so they can heat things up when they wanted to and little air conditioners to cool things down when they wanted to. But they can only communicate with each other at a fixed speed. And what's easy to calculate is that in order for them to achieve this feat of arranging for the cosmic background radiation to have a uniform temperature, they would have to be able to communicate with each other at more than 90 times the speed of light. And that is what's called the horizon problem. There just isn't enough time for the communication to take place.

Now, this is not a genuine, absolute flaw in the standard model. The standard model still works. You simply have to assume in your initial conditions that the temperature was uniform at the start, and then the universe will continue to evolve with the uniform temperature. But what it is is a serious lack of predictive power of the standard model. This very dramatic feature of the universe, this incredible uniform temperature throughout, has to simply be postulated. It could in no way be predicted or calculated on the basis of standard cosmology. And that's the horizon/homogeneity problem.

The flatness problem has to do with the mass density of the universe. And I want to speak about the quantity which everybody calls omega, which is the ratio of the actual mass density to the critical mass density. Now, we still don't know very accurately where omega is today. It's somewhere between 0.1 and 2. I think many observers would like to put-- would estimate much tighter constraints than that. But I won't argue with them. I'll just say that, okay, you agree with me it's somewhere between 0.1 and 2. I'm not worry about where within that range it might be.

The point is that omega equals one is an unstable equilibrium point of the standard model evolution. That means if omega's exactly equal to one, it will remain exactly one forever. But if it's a little bit off from one, it will immediately start to move in that direction. So it's like a pencil standing on end. So to be anywhere near omega equals one today, which is a very late time in the history of the universe, it means that at much earlier times, omega must have been very much closer to one. And in particular, if you go back to one second, which is not really an extraordinary time in cosmology-- it's the beginning of the nucleosynthesis processes. At that time, omega had to have been equal to one to an extraordinary accuracy of one part in 10 to the 15 in order for us to be where we are today.

So what that means is that in standard cosmology, at whatever time you want to start the universe-- if you want to start it earlier than one second, you have to postulate omega was even closer to 1 than that. Whatever time you start the universe, you have to postulate that omega at that time was extraordinarily close to one in order for the subsequent evolution to agree with what we observe. As far as I know, this was first pointed out as a problem in a paper by Dickey and Peebles, which I guess came out in 1979, I think. It's a fairly recent consideration that this should be regarded as a problem. Although, I should add that the basic facts here, I'm sure, were known since the time of Friedman in the 1920s.

The third problem I want to discuss I'll call the density fluctuation problem. The point is that although you want to arrange for the universe to be homogeneous on very large scales, on smaller scales, there's certainly inhomogeneities that we observe-- galaxies, stars, clusters, this hotel, and so on. And in order for that structure to form, there has to be seeds in the early universe. If you start completely uniform, the universe will simply evolve and remain completely uniform. In the standard cosmology, there's absolutely no trace of a clue as what the seeds are that form the galaxies. One simply has to put in a primordial spectrum of fluctuations in order to make the model work. So again, essentially, all the important properties of the universe simply have to be put into this model as assumptions about the initial conditions.

Finally, I move on to what I'm calling problem four, the magnetic monopole problem. Of these four problems, this is the only one which is not a problem of cosmology by itself. This is a problem that only arises when you try to combine cosmology with these grand unified theories. The grand unified theories predict that there should be particles, which are magnetic monopoles, that have extraordinary masses, masses of about 10 to the 16 times the mass of a proton. And given the standard cosmology and particle physics, you can try to estimate how many magnetic monopoles would have been produced in the early universe. And the conclusion that you come to is that far too many magnetic monopoles would have been produced. So some modification has to be found somewhere to suppress this production of magnetic monopoles.

That ends my summary of standard cosmology. What I want to do next is to describe some properties of the vacuum, because it turns out that understanding the vacuum really gives you all the physics that you need to understand inflation. The vacuum used to be simple. Back in the old days, the vacuum just meant the absence of anything. And nothing could be simpler than that. Modern physics has changed all that. The vacuum is now about the most complicated structure that we know of, and I'll elaborate on that.

The dictionary definition is something like the absence of matter or space empty of matter. But that never really was the physicist's definition. The physicist's definition is one which obviously at least allows for complexity. The physicist's definition of the vacuum is simply given your theory of what describes the universe, what is the state of lowest energy of that theory? That is what we call the vacuum. And it may be simple if you have a simple theory like Newtonian mechanics. The state of lowest energy is just to take away all the particles. And then you have a very simple description of the vacuum. But in more complicated theories, the state of lowest energy, obviously, has the possibility of becoming more complicated. And that is what happens.

The vacuum started to become complicated, at least so far as I know, with the advent of quantum electrodynamics. In quantum electrodynamics, there are two things that make the vacuum complicated. First of all, you have the electromagnetic field. And the electromagnetic field, in quantum electrodynamics, is constantly fluctuating. The reason is basically that you calculate this by decomposing the electromagnetic field into a series of standing waves. Each standing wave acts exactly like a harmonic oscillator. And as you know from quantum mechanics, the ground state of a harmonic oscillator is not at zero energy. It's one half h bar omega. So for each possible standing wave of the electromagnetic field, you get a contribution of one half h bar omega to the energy density of the vacuum.

How many standing waves are there? Well, there's no maximum wavelength if you have a cavity and just talk about what goes on in that cavity, but there's no minimum wavelength. So you have an infinite number of possible standing waves, an infinite number of contributions of one half h bar omega. The energy density contributed by the zero point fluctuations is infinite. So the vacuum is not only complicated, it already has divergence problems.

This infinity of the energy density is eliminated in the theory simply by crossing it off your paper. You tell yourself that the zero point of energy is not really relevant to this theory, anyway. You can always redefine the zero point at this level of theoretical development. It only becomes a problem once you couple in gravity. So the energy density is simply redefined so that the vacuum has zero energy density.

However, that's not the only complication. In addition to the fluctuations of the electromagnetic field, there are also fluctuations of the electron field, which one can describe as the appearance and disappearance of electron/positron pairs. And since they appear and disappear rapidly, they're given the name virtual particles. And these give rise to very real and measurable effects, effects which go by the name of vacuum polarization. The vacuum can actually be polarized by an electric field because of the appearance of these electron/positron pairs. And that is a very measurable and very real effect. Vacuum polarization also gives rise to infinities when you calculate it.

It turns out, though, that all of the infinities that the theory producers can be absorbed into re-definitions of the fundamental constants. Of course, they're re-definitions by infinite factors. But once you do these re-definitions, then the theory becomes finite. And that process is called re-normalization, and it's a process which is very, very important in our understanding of particle theory today.

Now, all this has a connection to cosmology through the cosmological constant. It was, as far as I know, Zel'dovich that who first realized that Einstein's cosmological constant can be interpreted as nothing more nor less than a energy density attributed to the vacuum, where the two are related by this formula. Now, if you take the empirical limit on the cosmological constant then translate that into an energy density, you get a number of about two times 10 to the minus 8 ergs per centimeter cubed, it should be. Sorry about the cube. Don't have a pen here. 2 times 10 to the minus 8 ergs per centimeter cubed for our bound on the energy density of the vacuum.

Now, actually, Zel'dovich, in his '68 paper, suggested that there may, in fact, be a non-zero contribution to the energy density of the vacuum coming from the QED vacuum. Now, in order to make that work, it's actually very hard, at least from a modern point of view. If there was to be a contribution, you can try to estimate by dimensional analysis how large it would be. And this is a very interesting calculation, which gives what originally, at least, was a very surprising answer. Now it's known to all particle theorists, at least.

Just using dimensional analysis, the theory really only has one characteristic energy scale, and that's the mass of an electron times c squared, which comes to about eight times 10 to the minus 7 ergs. The theory also really only has one characteristic length scale, which is the Compton wavelength of an electron, which is 3.9 times 10 to the minus 11 centimeters. So if you try to make something with the units of an energy density, you really only have one choice. You take that energy divided by that length cube, and you get a number of about 10 to the 25 ergs per centimeter cubed, a number which is absolutely huge compared to the bound that you have.

So if there was some context in which this calculation made sense, you would have gotten an answer that's obviously wrong by a factor of 10 to the 33. So clearly, what's going on is that something is preventing these kinds of mechanisms from contributing to the vacuum energy, to the vacuum energy that we actually measure. Nobody really knows what that mechanism is, and that's really the important point I want to make here.

In the context of QED, most modern particle theorists would have expected an energy density to the vacuum of that order. The actual number is much, much smaller than that, and we just do not understand why. The situation gets even more complicated when we go beyond QED to more modern additions to our theoretical arsenal. The next important addition, and it really is a qualitative addition, is the development of the Glashow-Weinberg-Salam model of the weak and electromagnetic interactions. This is what is called the spontaneously broken gauge theory. It turns out that the only way that we were able to think of-- or not we, they. Steve and Shelly and Abdus.

The only way they were able to think of to build a finite theory of the weak interactions, a normalizable theory of the weak interactions, was to combine it with the electromagnetic interactions and to incorporate in the model at the fundamental level a very deep symmetry which would cancel some of the infinities that would otherwise crop up. This symmetry, though, is not a symmetry which we observe in nature. If the symmetry were manifest, it would say that the mass of the W and Z particles would have to be the same as the mass of a photon. That is, massless. They're not. The W weighs 80 GeV. The Z particle weighs 90 GeV. That's what makes the weak interactions, in fact, weak.

Furthermore, if this symmetry were exact in nature, it would say that an electron would be indistinguishable from a neutrino, also a massless particle. We know an electron is not massless. So the symmetry, which is the fundamental symmetry of the Lagrangian of the theory, the fundamental symmetry of the Hamiltonian of the theory, must not be a symmetry of nature. And that's accomplished by a technique called spontaneous symmetry breaking, it's done by introducing a new kind of scalar field, something called a Higgs field. In this particular theory, the Higgs field actually consists of a complex doublet, two fields, phi one and phi two, each of which are complex numbers.

But these are scalar fields. There's a good reason why you have to use scalar fields to break these symmetries. Because what we're going to arrange is for these fields to have a non-zero value in the vacuum. If something like a vector field had a non-zero value, that would pick out a direction in space and break rotational invariance. Since rotational invariance, we know, is a very good symmetry of nature, if we want to break symmetries, we have to use scalar fields. And the name of the scalar field that does that is called the Higgs field.

And it's done by arranging for the energy density in the theory, as a function of the value of the field, to be a curve that looks something like this. Now, the energy density only depends on the sum of the absolute squares of these two fields. That's imposed by the symmetry. The symmetry says it can't depend just on phi one and not phi two. It has to depend on it the same way. It has to depend, in fact, on precisely this rotationally invariant combination of phi one and phi two.

But what you do is you arrange-- and you have some freedom about this. The theory allows you to adjust parameters that control this potential energy function. And you adjust the parameters so that the minimum occurs not at zero, but it's a non-zero value. Now, this immediately means that the state of minimum energy, the vacuum, is no longer unique. Because you can minimize this curve by letting the real part of phi one have a value of 250 GeV and all the other zero. Or you could minimize this curve by letting the imaginary part of phi two have a value of 250 GeV and everything else zero. Or an infinite number of linear combinations in between.

So there are many vacua, and what happens in this theory is that very early in the history of the universe, at about 10 to the minus 11 seconds, the universe settles into one of these vacua. And the choice that the universe makes at that time is the choice which breaks the symmetry. Once, say, the real part of phi one acquires a non-zero value when the others are all zero, that now breaks the symmetry. You no longer have a symmetry between rotations in phi one and phi two. And that's exactly how the spontaneous symmetry breaking works. And that's the entire purpose of having this field in theory in the first place.

Now, you can estimate how much energy is involved here. I've drawn this curve the way it's usually drawn-- contrived so that the zero point-- excuse me. Contrived so that the minimum lies at zero energy density. But the theory does not impose that. You do that by adding an arbitrary constant to the energy. Once again, the real zero of energy is not really defined. It doesn't become defined until you actually have a theory that couples to gravity. But if you were to estimate the characteristic energy scale that's involved here, how high, for example, do you expect the energy density to be here? And therefore, how precisely did you have to fine tune things for it to be exactly zero there?

The characteristic scale is taken by taking the same formula we used for quantum electrodynamics, where we had the mass of an electron here, and replacing it with the mass of the W particle, the characteristic particle of the Weinberg-Salam model. That has an mc squared of 80 GeV. And when you put it in, you get a number that's about 10 to the 45 ergs per centimeter cubed. So now we're too high compared to the observational limits on the cosmological constant by a colossal factor of 10 to the 53. We're not finished yet.

The next important development is one that really highlights the inflationary scenario-- grand unified theories. In grand unified theories, you have a repeat of the same story. But you have to add a much higher mass Higgs field, a Higgs field with the mass on the order of 10 to the 14 GeV. And when you plug that in to find the characteristic energy scale that you'd expect to be developed by this theory as a contribution to the vacuum energy, you get a number which is too high by 108 orders of magnitude. We're still not finished.

I should mention here that we're finished as far as what we need to know for inflation, so everything that comes from here on in is sort of trimming. And I should also mention I don't really understand what comes in from here on in, either. But I'll give you a brief summary. The next most sophisticated level of theory is super gravity. And the idea of super gravity is that the fundamental scale is the scale of gravity itself, the scale you make out of Newton's capital G, known as the Planck mass, which is 10 to the 19 GeV. And when you plug that into our famous formula here to get converted into a characteristic energy density, you get 10 to the 114 ergs per centimeter cubed, a number that exceeds the experimental bound by a full 122 orders of magnitude.

Now we're finished, as far as I know. Basically, we're 122 orders of magnitude off. So what's going on is that there's some mechanism that we don't understand which is suppressing the cosmological constant by about 122 orders of magnitude below what we would naively expect it to be.

Now I want to comment on what Jim Peebles mentioned, that particle theorists are usually skeptical about any notions that there might be a meaningful cosmological constant that's relevant to cosmology. The argument is, I think, not a compelling argument, but there is an argument. And basically, the way I would phrase it, it goes like this.

If this unknown mechanism exists and suppresses the vacuum energy by 122 orders of magnitude, it seems highly unlikely that it stops exactly there. You would think it would go for at least another two orders of magnitude, or maybe another 10. And any of those would mean that the cosmological constant would be irrelevant. The only way to get a relevant cosmological constant would be for the suppression to be exactly by this 122 orders of magnitude, which just seems unlikely.

Now, as far as how to rate this argument, I would say that it's probably roughly equivalent to the theoretical equivalent to a three-sigma experiment. As we know with three-sigma experiments, you never really know if you should believe them or not. Same, I think, with this theoretical argument.

Now, I want to come to another concept called the false vacuum, which is really what's going to lead us into the inflationary scenario itself. Before, I drew a curve for the energy density of a scalar field, which was on the Higgs field, which looked like this. Let's again consider that we're dealing with the particle theory, which has in it a scalar field which behaves this way. And let's ask ourselves, what are the possibilities? When the scalar field sits on the bottom, that's the state of lowest possible energy. That's what we call, by definition, the vacuum. And the word true has been introduced here just to be absolutely clear that that's the vacuum.

However, you can ask yourself, what happens if the scalar field is perched right here, right on the top of the hill? Well, if this potential is quite flat on the top-- which it can be. It doesn't have to be. Then, that state where the scalar field sits on top here can be metastable. That is, it can live for a certain length of time before the scalar field eventually falls off and rolls down this hill in the energy density diagram. That state where the scalar field is in the metastable state at the top of the hill is what we call the false vacuum. It has an energy density which is fixed by this curve, which is in turn fixed by the parameters of the underlying particle theory. And I'll call the energy density of that state rho sub f. f for false vacuum.

Let me go on to describe some of the properties of this false vacuum. First, as I mentioned, the energy density is fixed, rho sub f. No matter what you do to it, you can't change its energy density. Because its energy does it just depends on the fact that the scalar field is sitting on top of that hill. If you move the scalar field, you can change the energy density. But what we're going to be talking about is changes which happen too fast for the scalar field to move. So we'll consider the scalar field to be temporarily frozen on the top. And given that, the energy density is fixed.

Next thing to notice is that this state is Lorentz invariant. That is, if you have a scalar field which everywhere in some region has the same value, if you were to move through that region and measure the scalar field, there's no way you can detect your motion relative to that scalar field. You would still find that every place where you measure the scalar field, it had that value, electron is zero. So it's Lorentz invariant. Motion through the state cannot be detected. And that's really the excuse for calling it a vacuum in the first place. False vacua and true vacua are the only states of nature which have that property, that you cannot detect motion through them.

Finally, and this is what will really be important for inflation, the pressure of this false vacuum state is negative. And in fact, it's equal to minus rho sub f.