David Baltimore, “Building a Comunity on Trust" - Ford/MIT Nobel Laureate Lecture Series

[MUSIC PLAYING]

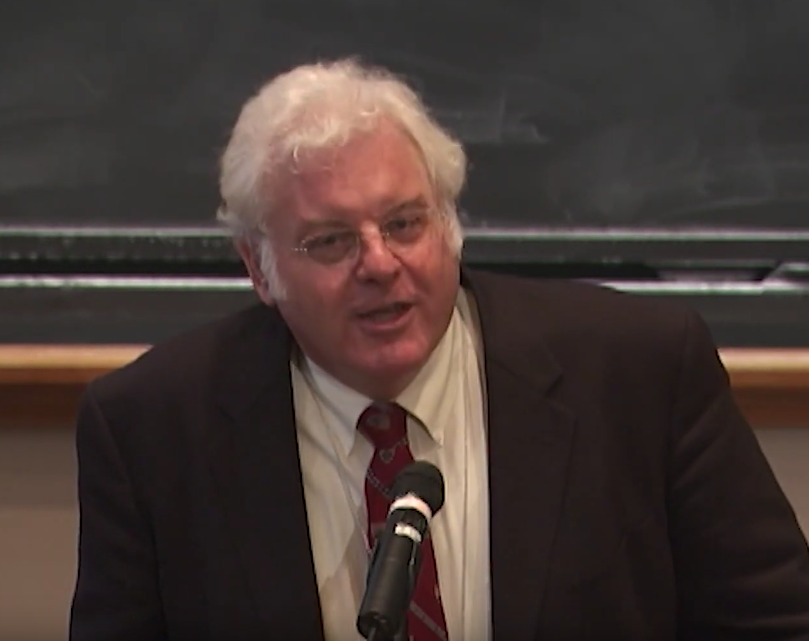

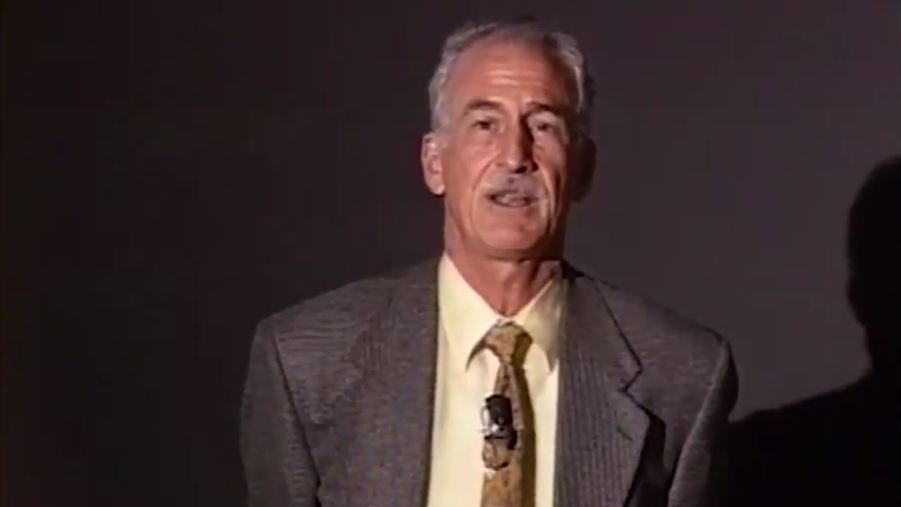

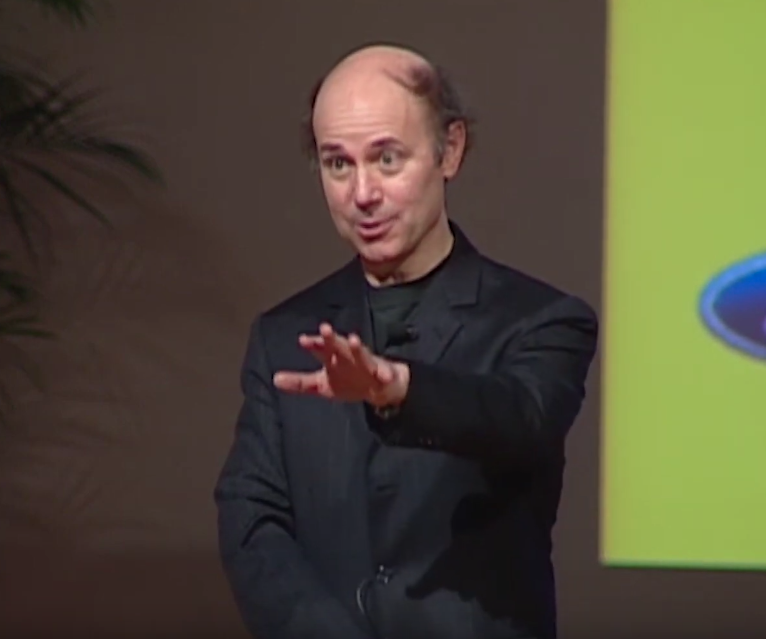

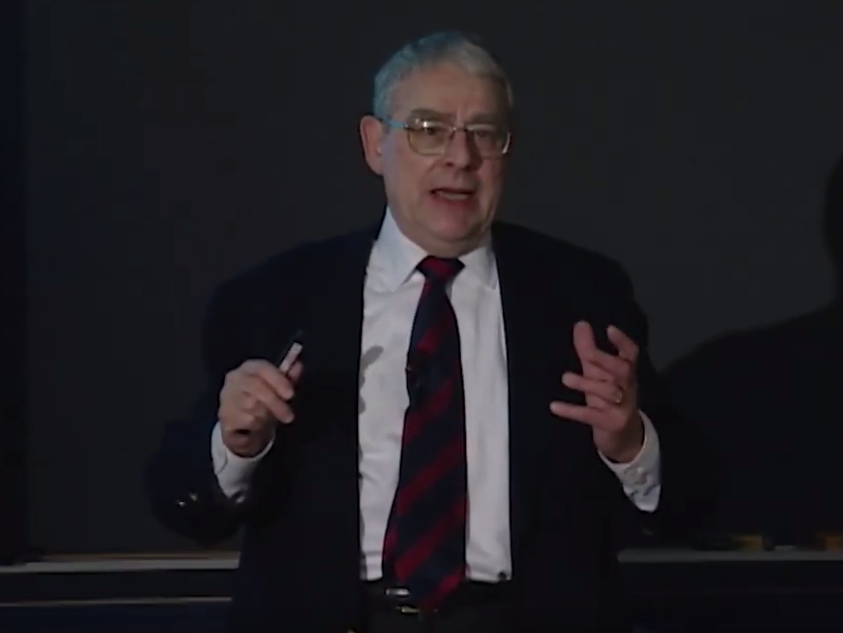

MODERATOR: Good evening. I would like to welcome you to the fourth in the series of the Ford/MIT Nobel Laureate lectures, and we would like to thank Ford Motor Company for their sponsorship of this series. Tonight's speaker is Professor David Baltimore, president of California Institute of Technology.

Professor Baltimore is not a stranger to MIT. He was a professor of biology in the Cancer Center at MIT for many years, and was the founding director of the Whitehead Institute for biomedical research at MIT. And in that , capacity, and in other capacities as president of Caltech, he has made great contributions, both to biological research and to National Science policy. In fact, he told me he still has a lab at Caltech.

Doctor Baltimore helped to pioneer the molecular study of animal viruses and his research has had profound implications for understanding cancer and AIDS. He was awarded the Nobel Prize in 1975 for his work in virology, specifically for the interaction between tumor viruses and the genetic material of the cell.

Dr. Baltimore has been a major figure in the US as head of the NIH AIDS Vaccine Research Committee. He was awarded the 1999 Medal of Science in recognition of his research achievements, his excellence in building scientific institutions, and his ability to foster communication between scientists and the public.

I'd like to also remind you there'll be a reception after the lecture in the lobby. His talk tonight is entitled, Building a Community on Trust. David.

[APPLAUSE]

BALTIMORE: It's a great pleasure to have the opportunity to be back at MIT, to be back in Kresge Auditorium-- one of the great venues in the world-- and to see many old friends. I'm particularly happy that Dr. Zella Luria came here tonight, and I thank her. Her husband Salvador Luria, as many of you will know, played a central role in my life, played a central role in the life of MIT, and was himself a Nobel Laureate.

When I was asked to do this, I faced a difficult choice because although I have ascended-- some might say descended-- to the role of President of a university, I still find it easiest to discuss molecular biology. It's second easiest to discuss Caltech. Because those are things that I know very well. But neither of them seemed right for this occasion

Chuck Vest and Bob Sylvie had certainly not invited me because they wanted to learn about transcription factors or about signal transduction in the immune system. I think they were looking for something with a wider interest. And I don't think they wanted my fund-raising pitch for Caltech.

[LAUGHTER]

So in this period of talking about something that I personally know well, I thought I would take on a topic that has been a concern of mine for a very long time, which is the critical role of trust in human relations. I hasten to assure you that I know that this is turf on which I am crazy to tread. Trust is at the heart of philosophical theories about human motivation, about which my own knowledge is minimal. So I approached this as an amateur.

Trust is a central part of our lives, but an ephemeral concept. It is like the golden rule-- you can trust me if I reciprocate and then we have an effective relationship and community. But trust has been falling out of fashion. With the methods of surveillance we have today, with the power of computers to detect fraud it might seem that we can enforce moral behavior. We can put our trust in our methods of control, not in people.

But I think September 11th and the Enron scandals have raised serious doubts about our ability to use technology to assure transparency and moral behavior. As far as I can see trust, is more important than ever, because technology may help in monitoring behavior but it also provides the tools for obfuscation-- that's the hardest word in the English language to say-- chicanery, and downright fraud.

Two of the formative events in my life took place at MIT and both centrally involved trust. The first was the formation of the Whitehead Institute, which is now 20 years ago. And for those of you who do not remember, let me remind you that when Jack Whitehead offered to fund this Institute with $135 million of his own money, the response was not joy but suspicion.

It was fueled from various fronts, including the Boston Globe and certain members of the MIT faculty. And I had been tapped by Jack to try to build this Institute, but he wasn't particularly committed to MIT as its host. It was I who desperately wanted to be here, and so there ensued a year of negotiation when I tried-- ultimately successfully-- to convince the MIT community that Jack Whitehead was not trying to hijack its essence to his own ends, but rather was trying to build an institution that would add to the intellectual power of MIT.

We crafted an affiliation agreement that governs the relationship between these two institutions to this day, but we all knew that no piece of paper could define all of the interactions. There had to be trust. The year was actually spent developing that trust.

I remember the final faculty meeting where the relationship was cemented, and the argument that carried the day was that MIT was built by taking chances-- meaning that we had only developed the minimum level of trust necessary. And it took years more before it was evident that the Whitehead Institute, wanted nothing more than to be a productive member of this community.

The other formative event was the affair of Thereza Imanishi-Kari. This evolved over 10 years and has been chronicled in books, and so I will not attempt to recap it here. However, at its heart was the trust necessary to carry out collaborative scientific work. As I will say later, I think this is a fundamental aspect of the scientific enterprise, especially today, as science is becoming more interdisciplinary.

That she was ultimately vindicated in her assertion of the rectitude of her behavior showed that my trust in her was well-placed. However, it underlined a critical aspect of any collaborative activity, which is that both sides have to satisfy themselves that their trust is justified.

It's a tricky business, because it involves recognizing that a bit of suspicion is appropriate, even while the relationship must be a trusting one. A friend of mine who writes about how organizations function puts trust at the center of his analysis, but he says that trust is something you have to give upon entering a relationship with another person.

In science, it is true that we assume that a colleague is trustworthy, and only in extremis do we doubt his or her honesty. While a colleague whom we may never have met, when such a colleague comes to give a seminar, we don't ask that the colleague prove his or her veracity, we assume that. But in a collaboration, where both parties will ultimately have to vouch for the integrity of the results, testing the trustworthiness of a collaborator-- generally in subtle ways-- seems a good idea.

These days, I continue to direct a laboratory, as was just mentioned. But I have limited time, as you might imagine, to spend with my trainees. Therefore, I just have to have a deep trust in them. For instance, we just published a paper describing a new method of making transgenic mice, which is an unusual area of investigation for me to be involved in. On the other hand, I had no reason to doubt the findings. It's, after all, hard to fabricate a green mouse or rat, which is what we made. But I was glad that there were a few people working closely together on this project.

And actually, it is really rare to come across fraud in science. Many postdocs and graduate students with whom I've worked have been searingly honest. Checking, on the other hand, is not a poor idea. In world affairs, trust is not in vogue today. Its antithesis is war, and we are living with war. Rather than the open and trusting society we had not long ago we now have airport guards screening our shoes, border guards screening us at every portal of entry to the country, and an Olympics of FBI agents.

We are grateful for the protection, because we have been shown that the world harbors enemies who will use trust as a shield to gain entry to our country, to our facilities, and to our transportation systems. The wonderful thing about the academic world is that trust remains a foundation of all of our activities. It goes beyond the trust of colleagues that is the foundation of any intellectual community. An important part of the academic process involves the trust that students place in their teachers.

I'm sure we all remember the terrible case of Joseph Ellis-- the Mount Holyoke historian who invented his tour in Vietnam, presumably to present himself in a more exciting light to his students. When it became known that he had never had the experiences he recounted the whole academic community was horrified.

He had not lied in his teaching or his scholarship, but even in just lying about himself he had broken the trust that students have for their teachers. Honesty in the relationship of students and teachers is rarely questioned, with trust freely given and the rarity of cases like that of Ellis justifies that trust.

Another component of the trust of academia is the trust that parents give that their offspring will be treated with care and respect when they send them into the academic world. Caltech and MIT get a very similar sort of student, and they're often headstrong and difficult to manage. But they are our responsibility, and living up to the trust placed in us is a challenge we cannot shirk.

Trust plays a very special role at Caltech, because both undergraduate and graduate student, life is built around an honor system. The honor code of Caltech states that, quote, "No member shall take unfair advantage of any member community." It's an awkward phrase, but it works. As our catalog says, it is more than merely a code applying to conduct and examinations. It extends to all phases of campus life. It is the code for behavior governing scholastic and extracurricular activities, relations among students, and relations between students and faculty. It's adjudicated by a board of control made up by students.

Is it perfect? No. But we in the Caltech community live the honor code, by giving all our examinations as take home exams to be governed by whatever the particular set of rules that were laid down by the examiner-- open book versus closed book, time for completion of the exam, et cetera.

Now, I suppose there is a little cheating, but basically it works. And alumni say that the honor code is the most important lesson of a Caltech education, because it sets a standard for their behavior for the rest of their lives. They have seen a community based on trust and on personal integrity, and they know the value of trust in creating a community of interests.

Trust does break down in the academic world when there's misrepresentation, plagiarism, or fraud. And many of us can remember that in the early 1990s, this was a big issue, because politicians were arguing that fraud was rampant in science, and the press picked up the issue. That climate was at the heart of the personal events I lived through.

The suspicion of the rectitude of scientists has quieted down, at least partly because the community put in place procedures for dealing with allegations of fraud. My belief is that it was never a big problem, that probably the rate of incidents has neither increased nor decreased, but that the visibility of the problem has dropped away.

It is interesting that today, not science, but history is the field where both plagiarism and personal misrepresentation have been major issues. Personal integrity is crucial in the field of history, because the historian is always making choices about what facts to emphasize, and is often interpreting the motivations of historic figures.

It's important to remember that science too has a subjective component. That scientists select data to present. Science may be more objective than history, but all intellectual endeavor depends on personal integrity. Trust plays different roles in different types of science. A single investigator working on a problem can do personal science requiring trust in no other individual.

However, so much science today is group science, where many people contribute to a single result and group science depends on trust. This dependence becomes magnified as the number of interacting people increases. In projects that qualify as big science, hundreds of scientists may collaborate. Actually, the word "science" subsumes many different kinds of investigations. A simple categorization might distinguish three types of scientific investigation-- observational research, hypothesis-driven research, and technology development. Let's look at them individually.

Observational science is the modern incarnation of the chief mode of studying the world in early times. Primitive people looked around, saw the world given them, and began to catalog it. They named the plants and animals. They named the constellations. They made hypotheses about how the world came about, which then became enshrined in myth or religion. But rather than testing such ideas, they accepted them and allowed them to shape their worldview.

Today, instead of these kinds of observations, we observe molecular structures, we observe gene structures, we observe protein-protein interactions. We still do a lot of science with the goal of describing the world, but at finer and finer scales of resolution. Our accelerators and our observatories are often designed to answer particular questions, but the rationale for their construction is also to extend the catalog of observations to new energies of interaction, for instance, or to new depths of the universe.

Observational science today, is done on a very large scale. For biologists, we have long looked into microscopes and gasped at the intimacy of nature. The scale of today's observational science represents a qualitatively new mode of investigation. The most massive enterprise of course, is the Genome Project, which is giving us catalogs of information about the genetic inheritance of many organisms of this earth. It involves the work of hundreds of scientists, all of whom must trust each other.

At Caltech, we are involved in one of the great projects of 21st century observation. We're part of a consortium making a virtual observatory that will link the observations of the sky, made at all different wavelengths, by different instruments, in one terrifyingly huge, but widely distributed database.

When it is complete, you will be able to pick a spot in the sky and get all of the information available about that spot, in an orchestrated search of many individual databases held in institutions around the world. It will include data at visible, infrared, ultraviolet, radio, and X-ray frequencies. From recent work at Caltech and elsewhere, we may even include the microwave background variation, giving evidence of what that region of sky looked like in the years just following the Big Bang.

I should add that Caltech and MIT are jointly involved in creating one of the most imaginative new observatories-- LIGO the Laser interferometry Gravitational-wave Observatory. This will look for a new form of radiation-- gravity waves, that have yet to be seen, but have been firmly predicted by Einstein as a consequence of relativity. Firmly means everybody believes they will be seen.

Information from LIGO will ultimately become part of the virtual observatory, but for the moment we're just hoping to see a signal of some sort when it starts collecting data next year. There are many theories predicting results, but since no one's ever seen the evidence of a gravity wave, this is the ultimate form of observational science, where just making an observation of any sort will be considered a huge victory.

The trouble with big science is also its great strength-- it involves many people working together towards a particular end in a collaborative enterprise in which the contribution of the individual is often obscured. Furthermore, it often removes from consideration one of the chief motivations from becoming involved in science-- the ability for an individual to choose the questions he or she wishes to work on.

Actually, when students come to me and ask what the difference is between university-based science and corporate science I think that's the main difference to point to. The ability in academia when you're your own boss, to do anything you wish, with the only limitation being finding the money to do it. In companies, you're usually constrained to work on just those problems that contribute to corporate goals.

By contrast, early on in the development of biotechnology, companies scientists had a lot of freedom. But that freedom has been severely restricted because the industry is maturing. So big academic science and corporate science have homologies. And one thing is for certain, if you want to develop your own individuality in science, you're better off working in a small science framework. Big science projects are not good venues for training the next generation of scientists.

I suggested before there are three modes of science. The second-- hypothesis-driven science-- is often done in a small-scale mode by an investigator working closely with a small group. But high-energy physics, the quintessential big science, is generally hypothesis-driven. In biology, the hypotheses may come from prior observational science, from an earlier hypothesis-driven experiment, or even occasionally from theory. In high-energy physics, theory is often the driver.

Interestingly, astronomers use enormous observatories that are run by dedicated staffs, and that are very expensive to maintain. But whether they're developing catalogs of celestial elements, or are testing particular ideas, they work in a classic small science mode, with a single investigator making observations. Today, that investigator is no longer in a freezing cold chamber open to the sky, but in a cozy computer room, often at a significant remove from the chilled mirror collecting the light.

The third mode of science I differentiated before is one that is rarely emphasized. Technology development is often considered to be engineering and separated from science. But when I came to Caltech, one of the discoveries I made about my job was that I was responsible for operating the Jet Propulsion Laboratory.

There, 5,000 people labor to produce spacecraft that either orbit the Earth or that go out into the solar system. These spacecraft are designed to do science. The Hubble telescope is the most notable of such spacecraft, but there are lots of others working at other wavelengths and looking at other phenomena.

There are spacecraft observing in Mars. There are one circling the earth looking for meteorological events-- for instance, El Niño. And there's even one up there collecting the particles of the solar wind to return them to Earth, so we can understand what the sun is made of. These spacecraft are all doing science so their design and manufacture is a central part of the scientific enterprise.

In the 1980s, Lee Hood, then a professor at Caltech, had the idea that technology could advance biology, much as technology was at the center of Space science. So he developed a group to build hardware. And their most notable achievement was the automated DNA sequencer. Again, science was the goal-- the ability to sequence large amounts of DNA, but technology development was the activity that they pursued. And the Human Genome Project, which depended entirely on having automated sequencing, was the proof of how right Hood was to concentrate on this then very unfashionable element of biological science.

For spacecraft, technology development involves very high-end engineering, because of the unique constraints of working in space. The most obvious of these constraints is the cost of a single satellite, which ranges from a few hundred million dollars to a billion or more dollars. With that kind of investment in just one instrument, it has to be built to very high tolerances to minimize the possibility of failure.

Because of the enormous propulsive forces that are needed to leave the Earth's atmosphere, or even to get into orbit around the Earth, another constraint is weight, with a huge premium put on miniaturization. Finally, power availability on the spacecraft is a key constraint, making low-power instruments a necessity.

Thus, literally thousands of people's work may be embodied in a single satellite. Do they all trust each other? Actually, while they certainly are interdependent in their activities, they have developed engineering systems that check on the functioning of each component. They put each satellite through a battery of tests, often subjecting it to conditions thought to be much harsher than those that they would encounter when it flies in Space. This is not because of a lack of trust, but because at that level of complexity trust is simply not sufficient. You have to be sure.

One reason why spacecraft are so phenomenally expensive is because of the need to monitor each step and to test continually. This was proven in the mid 1990s. You may remember that the then NASA Administrator, Dan Goldin, introduced the mantra of faster, better, cheaper. He wanted more satellites and at a lower cost per mission.

Well, the consequence was the two probes sent to Mars failed, each because of a very minor error. In one, a conversion from English to metric units was missed. In the other, a software loop was missed. The consequences for the Jet Propulsion Laboratory were catastrophic. Each was a tiny error-- 99%+ of the technology worked perfectly.

The lesson we learned was that you could not skimp on testing, because in both cases the kind of extensive testing that had previously been done was skipped in order to satisfy a straightened budget. An expensive lesson, but one that has been learned. So in some kinds of highly interdisciplinary science, trust is simply not enough-- not because of conscious error, but because of the catastrophic effects of even inadvertent error.

But most interdisciplinary science is not so demanding of perfection. It involves a few people bringing complementary skills to a project. But because each has a separate skill, they cannot easily check on each other, and one generally has to accept the work of another as being reliable. Thus, the push to interdisciplinary science-- which is evident today throughout the academic world, particularly when studying questions that are posed in biology-- that kind of interdisciplinary work requires a high degree of trust.

Let me spend a moment on the importance of interdisciplinary work in biology. What are particularly generative of interdisciplinary approaches are the massive observational experiments that are underway. The first of these was the sequencing of genomes, which required the skills of mathematicians, computer scientists, engineers, geneticists, and scientific entrepreneurs to generate and handle the huge volume of data. But now having the genome as a resource, these same complementary strengths are being applied to screening operations that are designed to ferret out regularities that will be suggestive of underlying biology.

And there's much more to interdisciplinary activities in biology. For instance, there's extensive work on new imaging systems that bring physicists and chemists together with biologists. There are efforts to use the brain to control prostheses that meld the skills of roboticists with neurophysiologists. There are efforts at miniaturization of screening methods that bring applied physicists and engineers together with biochemists.

One of the key reasons that trust is more critical than ever in science is that we have powerful new tools that can be used for data manipulation. Photoshop is a paradigm here-- a program that can create an artificial reality. It is interesting how easily we accept the manipulation of reality today when, for instance, we go to the movies. In a sense it is the ultimate realization that photography is an art, not a chronicle of reality.

When Man Ray went into the darkroom and created his rayographs in the 1920s, the art world was shocked that photography could be a medium in which one painted abstractions directly on film. Today, we accept with equanimity computer-generated images as part of the artistic process, whether in still images or in movies.

Basically, we suspend trust when we enter the realm of art, and consciously put ourselves in the hands of the artist. This contrasts completely with the scientific world, where we trust implicitly that reality has been preserved and not created.

The problem is that the tools are so powerful and so pervasive that much data is selected, digitally analyzed, and possibly enhanced or modified for effectiveness of presentation. This underscores the need for trust, and, of course, puts an onus on the investigator to be transparent about the relation of the presented data to the original.

In the larger world, trust is a key to commerce. Recently, the Enron scandal has emphasized how completely investment was a matter of trust. Enron was able to hide from scrutiny its financial manipulations, but people invested anyway taking at face value whatever information was presented by the company. Trust is particularly not in vogue today in the investment world, because so many people got burned by Enron and by other companies that obscured their reality.

I've been involved for years in biotechnology investment, and I know firsthand how difficult it is to get reliable information about any company. One tries to minimize the need for trust, but without becoming an insider and, thus, greatly limiting your freedom as an investor, you cannot get much information about the inner workings of a company. Because trust is an inevitable part of any investment decision, the personal integrity of the management of a company becomes a key element in evaluation.

For, instance one of the darlings of biotechnology recently, has been a company called ImClone, a company that has developed a very promising anti-cancer drug. Of course, validation of the effectiveness of a drug like this must be done by the FDA-- the Food and Drug Administration-- if the drug is to get on the market. And therefore, it's critically important to an investor to have an honest picture of the interactions between the company and the FDA.

However, the investment world knew about ImClone, that they had key personnel in their management with less than a pristine background. The difficulty is that if one simply said, we won't invest in a company unless management can be trusted implicitly, we would have missed one of the best run-ups in the biotechnology market in history. So the investment people I work with took the chance. The stock did appreciate. But then it emerged that the management had apparently not been honest about their communication with the FDA, and we, and lots of others, got burned.

Life provides opportunities for big changes. Life sometimes precipitates us into situations for which our backgrounds haven't even prepared us. The biggest for me was going from managing a laboratory at MIT, to managing first the Whitehead Institute, and then to universities-- Rockefeller University and now Caltech.

The movement from the laboratory to the director's or president's office is a huge one, because you go from directing a unit where you have a long term familiarity with the working parts, to one where there are many elements that are at best vaguely comprehensible-- like the HVAC system, or the payroll system, or athletics, or the mathematicians.

[LAUGHTER]

The latter are particularly incomprehensible. So I'm now in a position of running an almost $2 billion operation when you include JPL. And what you discover quickly when you take on such an enterprise, is that you are deeply dependent on each other to make it go. Each vice president, each head of a department or a division, or even each head of a laboratory, has to be someone in whom you can place your trust. Not just that they will be ethical, but that they will be diligent and effective in their jobs.

Another part of my life is that about two years ago I became a director of Amgen, the world's largest biotechnology company. Shortly after I joined the board, we got a new CEO. And so I've had a chance to watch how he has chosen to manage this $4 billion enterprise.

One piece of it was remarkably simple-- he puts an extraordinary amount of his energy into the people of Amgen. He evaluates them, he promotes them, he interacts with them, he organizes them, and occasionally he even has to terminate them. But he's constantly gauging whether or not they continue to deserve his trust. He was trained at General Electric, where, if I understand correctly, this style of management was honed. He's taught me a lot about how to manage a large institution-- it's the people, stupid.

In thinking about the role of trust in our lives, I must point to our personal lives. Even in our most intimate relationships with our children and our partners trust is crucial, and a breakdown of trust paves the pathway to disaster. The development of the mortal sense of children is an illuminating topic, because a parent is always wondering when children have reached the age or the stage when they can be trusted.

My 27-year-old daughter tells me now how she would sneak out at night when she was an early teenager growing up in Cambridge. I didn't trust her to be out, but she believed she deserved the trust and took matters into her own hands. In retrospect, I guess she was right. At least she lived through the experiences to become an adult.

Let me digress for a moment and consider a more general issue-- trust is an interpersonal interaction, one of a myriad of interactions that make up a society. Some of these are rational many are unexamined. However, it is evident that we're entering a period of scientific investigation of interpersonal interactions, that this is going to have profound implications, particularly for the Social Sciences.

Now, like most hard scientists-- some people don't think biology is a hard science, but I do-- I grew up with the belief that the social sciences had appropriated the title of science, but were unable to provide the output of understanding that, at least, I expected of a science. In spite of the often rigorous use of scientific methodology, especially in psychology, the insights were at best good stories. Even economics, for all of its use of mathematics, was unable to explain how human beings make economic choices.

My mother, actually, was one of the last of the Gestalt psychologists. They were a remarkable group of thinkers who tried very hard to develop a mechanistic base of perception. But they had to fail at this, because they simply did not know enough about the brain to understand how the brain perceives the outside world.

The big change today is that we're learning about where in the brain decisions are made, and we can expect, over the next decade, to learn how decisions are made. In spite of its limited resolution, with magnetic resonance imaging we're able to watch the brains of people-- and also the brains of monkeys-- as they make decisions. This is the beginning of a social science that can explain behavior.

At Caltech, our social scientists are working now closely with systems neuroscientists to begin to generate a science of social interactions. Economists, political scientists, and psychologists are designing experimental systems where humans make choices, and are beginning to see how the brain handles this kind of problem.

Even our professor of finance is now totally consumed by the idea that he can test theories of financial behavior. It all evolved from a school of research called experimental economics, in which economists posed problems to groups of people-- often in auction or market emulations-- and then they see how people behave and how they interact.

It's a classic mode of testing a theory experimentally, and it often shows that the theory needs adjustment. Moving these experiments into the MRI machine is a way of moving into the black box where the decisions actually occur. What it is sure to show is how emotion interacts with rationality.

This type of thinking is becoming pervasive in the arts and humanities as well as in the social sciences. There are now people trying to understand how artistic appreciation is registered in the brain. Actually, they're making more progress than the social scientists, because their issue is easier, since it involves only subjective experience and not interpersonal interaction. Meanwhile, at Caltech, our philosophers are becoming interested in how the brain works, while our neuroscientists are becoming interested in consciousness-- a confluence of investigation that I think has revolutionary possibilities.

I thought I would tell you a little bit about one of the most interesting people working at this intersection of experiment and social science at Caltech-- Jean Ensminger, a cultural anthropologist we hired recently. She came only last year after years that we had spent searching for an anthropologist whom it made sense to have at Caltech, where we have this very experimentally-oriented social science group.

And actually, if any of you read The Wall Street Journal, you might have read about her work a month or so ago, when they featured. What she and a small band of others have been doing is experiments to understand the choice behavior of people in different types of societies.

Jean had worked in a village in rural Kenya. So she started there. And the experiment she did had to be very simple, because this is a pre-literate population. So it was about as simple as you can get. Give a person some money, about a day's wage-- and they actually use real money-- and ask them to share it with another person. Both of them know the amount of money involved. And if the second person does not accept the gift, then neither gets anything. But if the gift is accepted both keep their money.

So say player one gets $10, and offers $1. Player 2 may feel that player 1 is being stingy, and out of a sense of fairness refuse the offer, effectively punishing Player 1 by giving up the 1 dollar. But if Player 1 offers $5, then Player 2 is likely to accept, and both will be $5 richer.

Now, do this experiment in a subsistence community in Kenya, in a small town in Missouri, in a hunter-gatherer community in Tanzania, in fact, in some 15 different settings. What you come up with tells a lot about how societies work and how individuals approach each other.

In the least developed communities the Players 1 offer the least percentage of their money. In an American setting, they offer close to a 50/50 split. A conclusion is, that being in a market society leads to fairness, if not altruism. What would Karl Marx think of that outcome?

Sam Bowles, who's involved in such work, says, quote, "Many people thought markets would make people selfish and amoral. That view is at least too simple, if not just plain wrong. Jean Ensminger says, "The most altruistic and trusting societies are those that are the most market-oriented. And another commentator said that assumptions about trust are built into a market economy.

Contrast these conclusions with the conclusions that the Enron scandal suggests. Enron used the trust that we have in markets to convince investigators that they should put their money in Enron stock. To have doubted their veracity took a deeply suspicious analyst. But trusting is the necessary response of people in a market economy, because without trust the market could not exist. The alternative to trust would be perfect knowledge, and there's no way to get that. In fact, as I noted earlier, if you do have a deep knowledge of a company, then you become an insider.

This experiment that I described, and other experiments like it, are putting some meat on the bones of concepts like trust. They defined such vague concepts in an experimental setting, making them operational terms like the many others that are used in science. For instance, other investigators have been looking at what people do when they act cooperatively.

This has generated the unlikely concept of altruistic punishment. Members of a group will punish those in the group for not acting reasonably, even if the punisher gains nothing by the punishment. I've been skeptical of what has been a controlling notion in biology-- that is the notion of the selfish gene. It's a notion which derives from the belief that a person will act toward another person in altruistic ways if that other person is a blood relative.

And the idea is, that we act to favor our own genes because the genes are driving us to that behavior. I see these experimental economics results raising a very different paradigm, and one that strikes me as more meaningful. They're perhaps not contradictory, but I wonder whether the selfish gene will ultimately succumb to notions of trust and cooperation among relatives.

This talk has been an unusual one for me to give. It came about because I found myself so often facing the issue of trust in my dealings with the world. I hope that I have made all of you just a little more aware of this central element of human relations. At the same time, I hope I've raised the question of whether such a fundamental concept of trust may not soon be a subject of scientific study.

As a university president, I find myself thinking about the directions of scientific investigation, and I think I see that an important direction is the serious encroachment of scientific thinking into provinces where it previously could not be applied. I find this exciting, especially if it leads to more effective human interrelations. And I thank you all very much for your attention.

[APPLAUSE]

MODERATOR: Thank you, Professor Baltimore. And he's agreed to take questions from the audience. And I think there were some microphones around, and we can have people come up.

AUDIENCE: Thank you, Professor Baltimore. I would like to get your opinion regarding altruism amongst one's relatives. I'm a recent postdoc, and I feel that perhaps the greatest erosional force that I worry about contributing to the demise of trust within the sciences, derives not from the fear of incorrect or even fictitious data, but rather relates to communicating valid findings, and is derivative of the incredible pressure put upon researchers by competition for recognition, publication priority, and funding.

In short, it seems that nothing is to be trusted to one's colleagues, nothing is to be shared, until it is published or at the very least in press in a journal with a very rapid turnaround time. Do you have any comments about what we, as a scientific community, can do to stem this tide.

BALTIMORE: That's a very difficult question. I recognize that it is. And it is difficult because of the very strong competition that exists among scientific groups today, something which is easy to rue, but very hard to do anything about.

When I grew up in science, over the last 40 years, it was the ethic of the times to talk about anything you were doing-- published or not. And I remember being very annoyed with a few scientists who never seemed to talk about anything but published data or data very soon to be published. Because, why go to a talk if you can read it? You go to a talk to try to get either a different kind of synthesis or to get more contemporary, and maybe less certain, information.

And I agree, it's harder to get that today. It's harder to find people who are willing to talk about what they're doing, rather than what they've already done. To talk about things which are a little less certain, rather than the things that they've already put their stamp on and put into print. And it is partly-- maybe even largely-- a matter of not wanting the quote "competition" to know what is going on.

It's a sad commentary on science, when it reaches that point. Because we presumably grew up with the notion that science is a collaborative activity, and that competition-- although necessary and humanly understandable-- shouldn't be the driving force. But I'm afraid, with the number of scientists involved, for instance, in the biological sciences-- which I know best-- it's very hard to maintain the kind of civility that you'd like to maintain.

So I think we lose something from the size and actually effectiveness of our community of scientists in incivility, and I don't know how to change it except to behave differently, and to try to prove to people that you can be effective and still be open. And I believe that's correct. But I can tell you that today, when I go to the people in my lab and say, I'd like to talk about the work you're doing at a meeting, and they say well, let's wait until it's published, or let's wait until it's written up. And I understand why they're saying it, but it certainly doesn't make me happy.

So I haven't helped you, but I've agreed with you. And maybe to too great a length. Yes?

AUDIENCE: In the early 80s, there was fairly substantial faculty controversy over the founding of the Whitehead-- a connected, but autonomous institution. Could you comment on the Whitehead as a case study of the role of trust in building such an unusual, and yet, over time, tremendously successful organization?

BALTIMORE: Yes I can. I think the reason that the Whitehead Institute-- and I was talking with some people about it earlier today-- worked so well, was that my interest was in building an institution that would last. And an institution that lasts can't be focused on an individual. It has to be focused on a process which guarantees its continued excellence.

And I think it was putting in place that process, through the affiliation agreement that we had with MIT, as well as through the way we worked it out in practice-- because you can never imagine everything that's going to happen. I think that's what made it work.

And so I'm a great believer that you have to put in place processes which are the underlying strength of institutions. And what Whitehead shows is that those processes can be evolved very rapidly. They don't have to be inherited from your forefathers. You don't have to be Harvard University to have processes in place which can guarantee the strength of an institution.

AUDIENCE: I don't know exactly when, but at sometime in the future it should be possible for perhaps a single graduate student or a postdoc to create in a university laboratory some kind of pathogen that if let loose might cause very grave harm, kill very many people, or worse. And I'm wondering right now we can sort of trust that maybe that's not happening. And I'm wondering what can we do in the future?

BALTIMORE: That's a good question, because I think you've put it in the right context. Today, we are not yet to the point where we have to worry about it terribly. What we're in fact discovering is that pathogenesis is a very complicated concept, and involves the interaction of lots of different components. And so it's not easy to modify it and make things worse.

But we'll understand it well enough, and we'll figure out how to do it. And in fact, in some very simplistic ways, the Russians may have actually been trying to do that in the 80s. But I think what's going on now, which is to try to register the laboratories that are involved in work with pathogens, and to keep some kind of surveillance of what's going on, may be a necessary thing. Doesn't make me happy. But on the other hand, I think we really do have to watch out.

Your suggestion was that a single individual working on their own in a laboratory might be sufficient to do this, and the implication is that nobody around would know what they're doing. So the second thing is openness about what people are doing. The most dangerous thing is secrecy. Now, I really believe that we're better off in dealing with pathogens and whatever to have openness about what's being done rather than secrecy.

Previously, we had secrecy. Biological warfare was done behind barriers of secrecy. Nobody knew what was going on. Turns out, not a lot was going on, but no one knew what it was. Today, we have openness about biological warfare research in principle. But our government today is moving to increased secrecy. You can see in the headlines in the papers every day. And that could put us right back where we were, but with much more powerful tools-- tools of recombinant DNA research-- that will allow, in principle, the production of more pathogenic organisms.

I will remind you that the anthrax that we saw being distributed through the mails probably came from a laboratory. We don't really know where it came from, but probably came from fairly expert manufacture. Yes?

AUDIENCE: New York Times, front page today, top right corner, headline-- the Pentagon wants to start an office, is admitting to starting an office to focus or fabricate information to motivate people, support-- inside and outside the US-- for their war on terrorism, as they call it. Is this a loss of naivety on the side of the public, because, obviously, if I was the Pentagon, I would try to do that.

[LAUGHTER]

Why are they stating that obviously to everyone? Do they want to make it legitimate? Do they want to create a climate where things like that are more tolerated? At the same time, they want more observation, more technology to observe what people are doing. What is going on? I mean--

BALTIMORE: Right. I'm shocked! The Pentagon is putting out disinformation!

[LAUGHTER]

I agree with you completely in the implication of your question. That is, who should be surprised that they're doing it? No one. It's been done for years. Every organization puts out disinformation. Enron, to the fore. And why was there's an article? I think because a couple of hotshot reporters suddenly had evidence that it was happening, and wrote an article about it. I was not at all surprised to discover that was going on. But it doesn't make me happy to know that the Pentagon is trying to manipulate the press-- particularly the foreign press. But, no, I'm not surprised.

AUDIENCE: Trust sometimes, well, more often than not, being a delicate issue of balance, people or institutions find themself in positions where they have to try for trust from different sides. Such as a university is striving for trust from parents who are sending their kids there, as well as from the kids. Now, sometimes it may, sometimes it may not be contradicting things that you would feel you should act like to gain the trust of these sites.

So you have to obtain a balance to gain trust from both sides. Can you comment, or give an example, how Caltech is dealing with that issue and keeping the balance? I mean, in this hands of reassuring the parents that the kids are taken care of and their welfare is assured, and assuring the kids that they have room to grow, that, they have room to unfold themself?

BALTIMORE: You've hit on one of the stickiest problems that we have at Caltech, probably that all institutions have, and that we're just now trying to figure out how to deal with. I'll put a different way-- how do you allow students to have autonomy in the pursuit of their education, and at the same time guarantee both their safety and their growth as individuals?

And it's a delicate balance. We, in the United States, for instance, generally have students in most universities in some kind of a dormitory housing situation, particularly as freshmen. And I know that MIT is going to house all of its freshmen in dormitories. Abroad, people go to college and they live in apartments. And they don't live in controlled circumstance. They make all their own decisions.

So there are various sorts of models of how you take the responsibility that's being handed to you by people sending their children to college. And all I can say is that I'm trying to wrestle with that balance today, myself, and I don't know where it sits. But I do know it's a changing societal framework that we're living with. Changed enormously from the time when I was in college, and all of our activities were second-guessed.

But I don't think we've found the right mix, particularly with the potentially self-destructive things that are available in our society today-- drugs and alcohol being the two major ones. It's very difficult to know where to strike that balance. So another question I haven't answered.

MODERATOR: One more question, I think, and then we'll go.

AUDIENCE: You use the word "trust" to cover everything from someone possibly being dishonest to not checking calculations enough. That's goes beyond what I would. I would say belief, might come into that. Or you could use trust, but the meaning of it spreads out in different directions.

But what really made me wonder was you said that decisions would, in the next 10 years, be greatly better understood by study of the brain. If you are trying to appoint someone to a job, say, where you have all different kinds of trust in them-- from moral, to believing they have talent, to believing they have certain qualities of character that will do the job well. It's such a tremendously complex thing.

How can you read things from the state of the brain that are really going to get you to the heart of that? Particularly considering that if I am looking into your brain, and deciding that you've made a decision because you have certain attitudes about things outside yourself-- the state of the world, the state of another person-- I have to judge whether you're right as well. So it seems like there's a gap there in what can be understood by studying the brain.

BALTIMORE: I think we're mixing up two things. I was suggesting that by studying the brain, we could begin to understand the concepts that are involved in interpersonal relations, and how those are translated into actual brain activity, which is what we can measure today. We'll measure more than that in the future.

I wasn't suggesting that we use that as a diagnostic tool to figure out whether an individual is trustworthy or not. I suppose it might come to that, but I really hadn't even thought of that. And was certainly not suggesting that that's the direction we're going in. I was talking much more about how you scientifically investigate the elusive concepts of social science.

MODERATOR: I think we should thank Professor Baltimore once more, and there's a reception out in the lobby.

[APPLAUSE]