Barbara Liskov, “Practical Byzantine Fault Tolerance” - MIT EECS Colloquium (12/3/2001)

[MUSIC PLAYING]

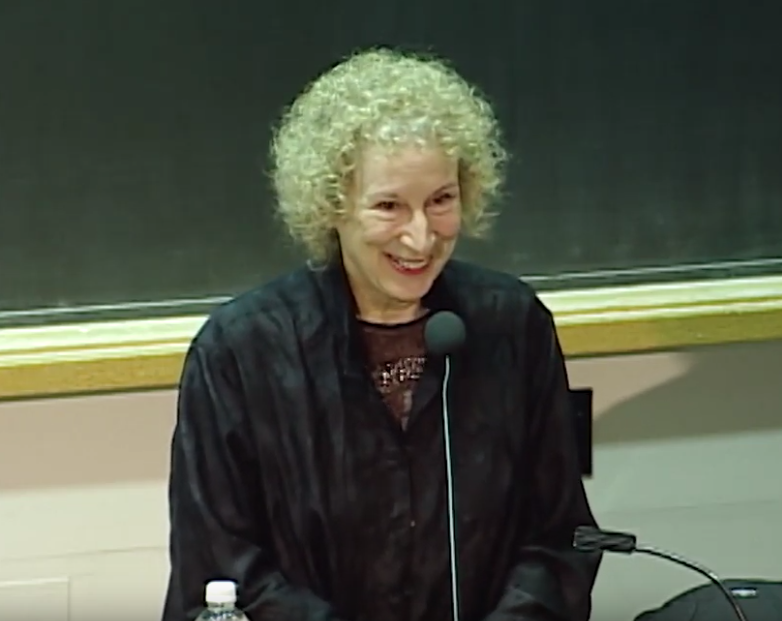

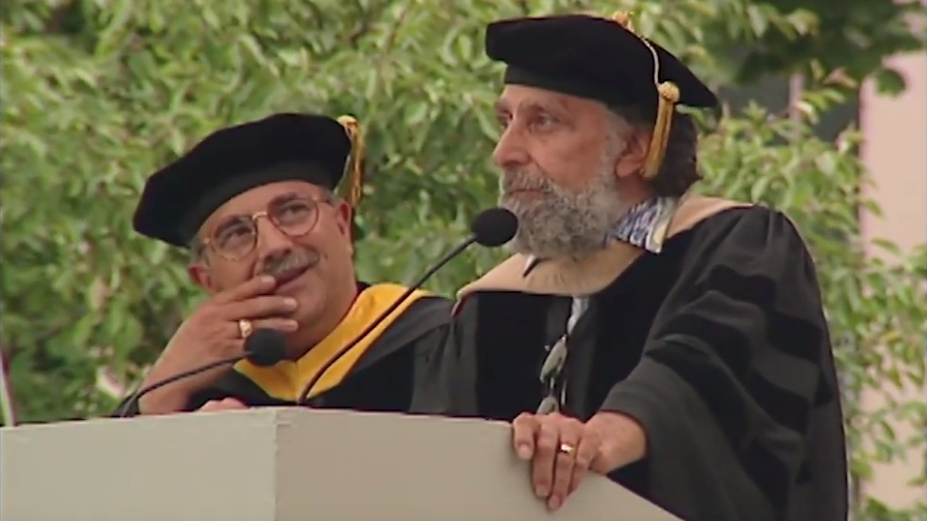

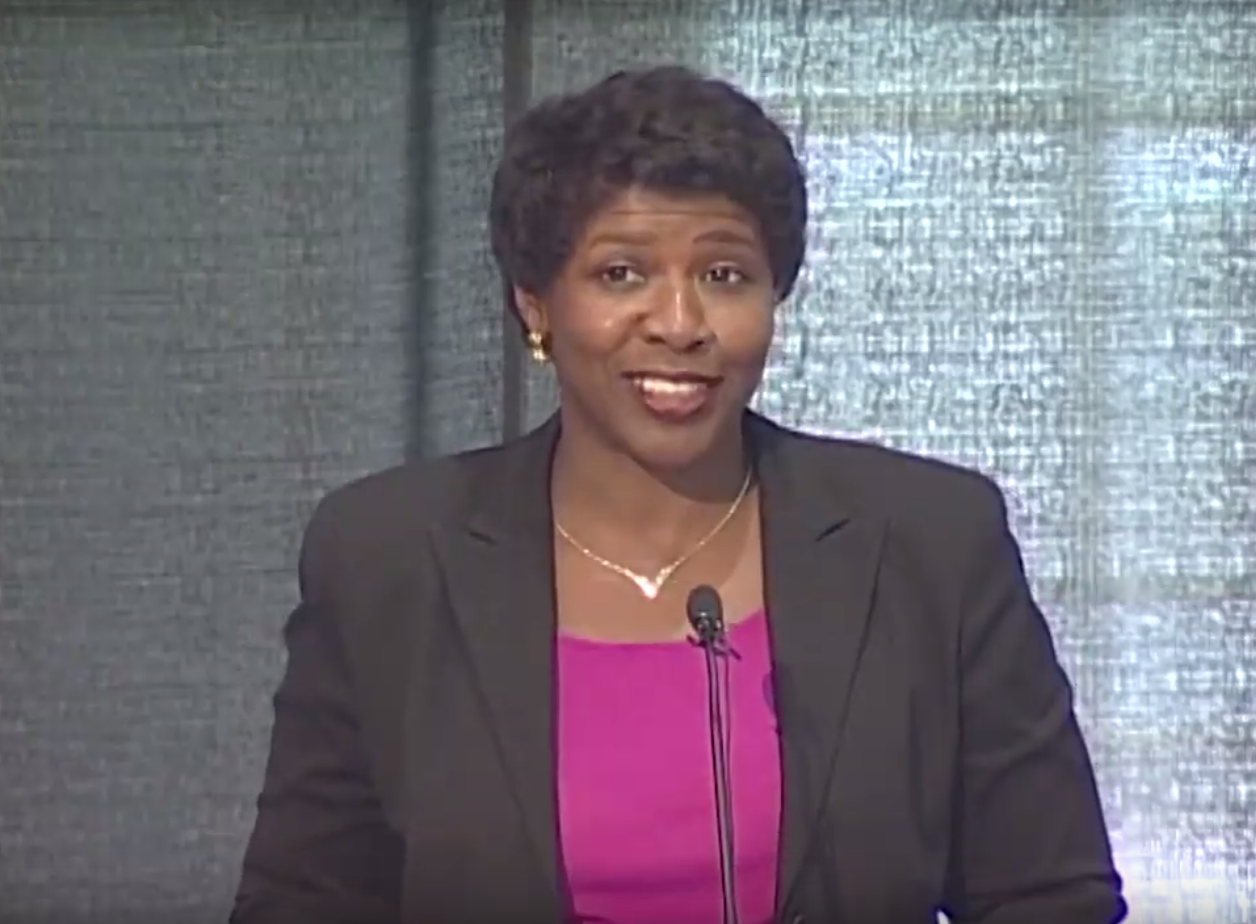

GUTTAG: I'm John Guttag. And today, it's my pleasure to introduce the speaker, Professor Barbara Liskov, who did not give me her bio. So I'm going to have to fake it. Barbara has been on the faculty for, at least, longer than I have. And I've been here about 25 years or so I've been on the faculty-- 26 maybe. She started when she was 15. And--

[LAUGHTER]

--is a well-respected expert on programming languages, distributed systems, fault tolerance systems, and a host of other topics. And I think with that, I'll just turn it over to you--

LISKOV: Thank you.

GUTTAG: --and turn off my microphone.

LISKOV: Okay. So I'm going to talk today about providing secure services on the internet. And as I'm sure you're all aware, we are becoming more and more dependent on information that's stored online and accessed over the internet. Truly critical information is kept online. And it's very important that this information be stored securely.

And I'm particularly interested in two properties of security. So this is not exactly the standard definition of security. I'd like the information that you store to be accessible to you, whatever you want it. And furthermore, I want it to be uncorrupted so that you can actually use it.

And I'd like these properties to be preserved for you in spite of all the kinds of problems that can happen. And in particular, I'm interested in hardware failures, software errors, and malicious attacks. And as you know, malicious attacks particularly are becoming more and more something that happens on the internet. Okay.

So here's some examples of the kind of online information that I'm concerned about. You might have a log of your credit card entries being kept by your credit card company. And it's very important that that information not be corrupted so that, for example, the charges that you think you have incurred don't change mysteriously.

Another example is a key distribution service that's used that allows you to look up somebody's name and get back a public key that you can then use to access some information. Yet another example is DNS, the domain name server, which is the source of all addressing on the internet. And if the DNA-- DNS name servers are corrupted, you won't be able to find anybody that you would like to talk to on the internet.

And then, another example is simply a company's website. For example, recently I read in the news that the Staples website had been hacked into. And everybody that went to look at the Staples website was immediately forwarded to the website of a competitor. So you may not think that the company website is such an important thing. But even there, corruption of data through a malicious attack can be a big problem.

And as a matter of fact, these problems are becoming increasingly evident. And what you see in the news is just a small sort of tip of the iceberg of what's really going on, because when critical data is kept online, the owners of this data are very interested in not having you know that there's been an attack that's caused that data to become corrupted.

Now, my concern in this talk is going to be what's called data integrity. In other words, I want to make sure your data is available. And it's uncorrupted. There are a whole bunch of other security issues that are obviously also very important. For example, privacy-- you would like to be sure that your credit card logs can't be looked at by people that aren't supposed to see them, and access control, the ability to control who has the right to look at certain information. I'm not going to talk about those.

So as I mentioned before, there are sort of three main reasons why integrity might be compromised. First of all, hardware might fail. But in addition, there can be bugs in your software. And finally, we can have malicious attacks. And the way that you survive these kinds of problems is by replication.

So replication-- the basic idea is that if one of the copies of the information is hacked into or destroyed, you can still provide access to it, because there are all those other copies. And of course, for this to work, this means that the replicas that make up the replicated system are going to have to carry out some kind of protocol that guarantees that they are in sync with one another so that the information you get from the backup copy if the primary fails is up to date with respect to what the primary has stored.

Now, traditional fault-tolerance, which was a topic of great research interest in the '80s, was concerned with a particular sort of replication algorithm that was designed to define-- to survive what are called failstop failures. So failstop failures are a very simple kind of failure compared to what we'll be talking about in this talk, because the idea here is that when a failure happens, the way it manifests itself is that things simply stop.

So for example, a computer-- something goes wrong with the hardware. And the computer simply stops functioning. Or well, so that's an example of a failstop failure. And these failures are relatively easy to cope with, because you essentially have to worry only about the question of, what do I do if I can't hear from somebody? And you don't have to worry about the problem of, I'm hearing from this entity. But it's not telling me the right stuff.

So in the '80s, people concentrated on failstop failures. And there were actually two reasons for this. One is at that time, hardware wasn't all that reliable. And so this was considered to be sort of the major thing you had to be concerned about. And the other reason was that this seemed hard enough. We didn't understand how to build replication systems that could survive even these simple failures. And so tackling the much more difficult failures that I'm going to talk about today was sort of way beyond what we could think about doing.

To survive simple failstop failures, you require 2f+1 replicas. And I'm going to show you why that's true. For example, to survive one failure, you're going to need three replicas. And then, when you run your operations, each operation is going to have to sort of check in with at least two of those replicas. And we're going to have to ensure that these operations are totally ordered so that if you, say, modify the replicated service twice, the second replication-- the second modification doesn't sort of forget what happened in the first one. And that's going to require a two-phase protocol.

So in the case of failstop failures, you can get by with 2f+1 1 replicas. And you can carry out a two-phase protocol. And I have a little example here to show you why-- sort of what's going on and why you need 2f+1 replicas. So for example, this green circle might represent the operation that executes right now. And it happens to manage to be carried out at these two blue replicas.

And then, at some time in the future, another operation is carried out. And for some reason, it isn't able to communicate with this replica. But instead-- I guess I should be using-- I see. Yes, that wasn't really very effective, was it?

[LAUGHTER]

All right. Now, if I just knew how to use this--

MAN 1: Just try pointing to the red part of the screen.

LISKOV: Okay.

MAN 2: Yeah, you're pointing at yourself.

LISKOV: Oh, okay. So in this green circle is where the first operation was executed. And for some reason, it only executed those two blue replicas. And then, at a later time, the red operation was executed. And for some reason, it wasn't able to communicate with this replica but was able to be carried out with these two replicas.

The point is that because we had 2f+1 replicas and-- or rather three replicas in this example-- and because each operation happened at two of them, we can be certain that the two operations overlap in one place. And that's going to be sufficient for us to make sure that the later operation reflects the effects of the earlier operation, provided we carry out the proper protocol.

So for example, supposing this was a key distribution center and system, and what was happening was for some reason, two entries were being made for the very same name and a very close period of time. So the green operation inserted some public key for that name. And then, a few seconds later, the red one inserted a different later public key for the very same name. Or rather, let me rephrase that.

[LAUGHS]

Supposing that the first one did it for Name 1 and the second one did it for Name 2, and what's going on in these operations is you're rewriting the database to reflect the most current set of entries, you want to be sure that that second operation writes back the right result for the first operation, as well as the right result for itself. So that's an example of why we need a two-phase protocol. We have to have one phase that finds out what happened before and a second phase that reflects that, as well as the changes that are being made.

I'm not going to go into the various algorithms that people invented to carry out this kind of simple protocol. I just wanted to point out primarily that you need 2f+1 replicas and a two-phase protocol. Yes.

AUDIENCE: Why is it that f+2 won't work?

LISKOV: That f+2 won't work--

[LAUGHS]

--so if we had f+2, then in the case of two failures, we'd have four replicas. Now, so then, you have to ask the question, well, how many replicas are involved in each carrying out of the operation, because the requirement is going to be that you have these overlapping sets in each subsequent operation?

So with four replicas, you would only be able to survive one failure, because you'd have to carry out the operations at-- carrying out at two replicas wouldn't be sufficient to get that overlapping property. So--

AUDIENCE: So you know which one's failed, though, don't you?

LISKOV: No, you don't necessarily know that.

AUDIENCE: Oh, okay.

LISKOV: Okay. What you know is that these are the ones I was able to talk to right now. And you would like to be sure that at least one of them knows about the most recent things that have happened in the past.

AUDIENCE: I thought because it was failstop you know which one's failed.

LISKOV: No, because in the case of a system with-- in the internet, you can't really tell a difference between a failure and a lack of a response.

AUDIENCE: Okay.

LISKOV: Okay. Okay. So all right. So that's how things were in the '80s. And the problem is that those failstop failures are only the sort of the tip of the iceberg. And what we'd really like is replication algorithms to survive much more serious failures. Those failstop failures really only correspond to hardware failures, where the system effectively crashes.

And it doesn't match what happens when you have a software error, which can sometimes cause your system to fail and yet continue to run. And it also doesn't cover what happens when there's a malicious attack, like that example of Staples that I mentioned a few minutes ago.

So those kinds of failures can cause your system to stop acting properly and yet continue to carry out a protocol that can fool the other replicas into thinking that that node is perfectly okay. And what happens when you start to think about failures like this is it becomes very Byzantine.

You sort of have to think that everybody's scheming to make things misbehave in the sort of worst possible way. Whatever answer you get from somebody is a lie. And therefore, you have to have replica-- you have to have replication techniques that are capable of surviving in spite of all those lies, provided there haven't been too many of them.

Okay. And these are, in fact, the majority of faults today. They may not manifest themselves as Byzantine failures. But there's no doubt that failures due to software errors and malicious attacks are the primary reason that we have failures today. Okay. So what I'm going to do today is I'm going to talk about how you can survive Byzantine failures. I'm going to describe a new replication algorithm to you that survives Byzantine failures and has very good survival properties. And furthermore, it's practical, performs well. And I'll show you a little bit of performance evaluation results to show you what's going on.

All right. So the-- in contrast to the simple failstop failures that I talked about a minute ago, when you have Byzantine failures, you require more replicas and more phases in your replication protocol. So you require 3f+1 replicas to survive f failures. So for example, to survive one failure, you now need four replicas, not three. And furthermore, your replication protocol has to have three phases in it, not two phases. Okay. And when we started this work, it was already known that you needed 3f+1 replicas to survive that failures. That had been proved earlier.

Our algorithm is more efficient than algorithms that people have invented before. So-- and I don't know about a minimality proof that would show you require three phases, though I certainly haven't been able to think of a way of doing it with fewer. Okay. Now, the algorithm that I'm going to describe for you provides what's called state machine replication. And this is what you really want. The idea is that you have a service. An arbitrary service typically provides access to its services by means of operations that clients can call.

So for example, in a file system, you can ask to read and write entire files. You can also read and write directories, rename, and so forth. And in a key distribution center, you could store a new pair. You could read a pair and so on. So anything that you think about can be phrased in this form. And really what I'm talking about here is data abstraction. So we're talking about arbitrary services that provide operations that allow users to make use of their services at the level of abstraction that makes sense for that application.

And one of the nice things about state machine replication, in addition to the fact that it sort of matches the way that people really do their business, is that it will allow us to provide service in spite of Byzantine-faulty clients. Now, a client-- this is the node that's trying to use the service-- if the client is storing, for example, bad data in a file, we can't do anything about that. Access controls the mechanism that you would use to try and at least guarantee that whoever is writing that file has the right to write it.

But what we can do with state machine replication is we can make sure that the client doesn't sort of get stopped halfway through and leave the system in such a bad state with its invariance broken and so forth that you're sort of not able to continue your business. So it's really very nice to be able to provide state machine replication rather than the simpler thing that many people have worked on, which is simply the ability to read and write single words.

With reading and writing single words, you have to implement, in some sense, the higher level semantics at the client machine itself. And then, you interact with the service by doing reads and writes in sequence. Okay. So here's basically-- I'm just going to try and show you here what it is we have to do in this algorithm in order to make it work.

So in state machine replication, what you do is you assume that the operations are deterministic. In fact, you require them to be deterministic. And furthermore, you make sure that the replicas all start up at the beginning of time in the same state. And then, assuming that the replicas execute the same requests in the same order, OK, you will know that correct replicas will produce identical results.

So the key thing here is this thing here, because what this is telling me is what my protocol has to accomplish. I have to be sure in my protocol to guarantee that all the non-faulty replicas execute the same operations in the same order.

AUDIENCE: Is it obvious what deterministic should mean in this context? It's not obvious to me.

LISKOV: It's the usual thing. In other words, if you call that operation with the same arguments, I'm sure there's a trick here somewhere, okay, that it should produce the same results. So for example, file systems are sort of deterministic, except that whenever you read a file, you get this time last modified or time last read that gets sent.

AUDIENCE: Right.

LISKOV: That's a non-deterministic effect. And so to do a simple file system, we're going to have to play some sort of trick that makes sure that we-- that all the replicas will set the same time last read. Okay. So there's nothing unusual going on here. Determinism is the same old thing we're accustomed to. To rephrase that, if you're in the same state and you operate the same X operation, you should come out with the same result.

AUDIENCE: At some level of abstraction.

LISKOV: At some level of abstraction-- definitely at a level of abstraction. Okay. So the key is going to be that thing I highlighted. That's what the protocol has to accomplish. And it's not easy. Okay. So what are the clients do? I'm going to start talking about them.

First of all, they carry out a little protocol of their own. They send their request to all the replicas. And then, they wait for f+1 identical replies. And the reason that works is because we're talking about a system that can survive f failures. Okay. So you have to assume that there are most f faulty replicas in the system at this moment.

So f of these replies might be total y's. But one of them is coming from a non-faulty replica. And therefore, we know that we have one replica that said, this is the answer. And we can rely on that. Yeah.

AUDIENCE: If one of them is fault and one of them is good at this stage of the protocol, how do you know which--

LISKOV: I haven't showed you the protocol. Okay. All I'm showing you is what the client does. So yes, we have to ensure that the answer given us by that one non-faulty replica is a true answer. So we're going to need a protocol that will ensure that that's correct.

Now, remember it's identical answers. So we're going to-- we can't rely on any f of these guys. But we can rely on the group of them, because one of them is not telling us a lie. Okay. But I haven't showed you the protocol yet. I just showed you what the client does. Okay.

Okay. So what do the replicas do? Well, they have to carry out a protocol that ensures that those replies from the non-faulty replicas are actually correct. And that requires that enough replicas process each request, right, to ensure that, you know, the non-faulty replicas are processing the same request in the same order.

And in particular, to sort of answer your question, a non-faulty replica will never send an answer to a request that it hasn't achieved by carrying out this protocol. Okay. So it doesn't really matter what those liars said, because we know we can rely-- if we have f+1 answers, at least one must be honest. And the protocol was enough to ensure that that was the right answer.

Okay. So now, I'm going to go into the heart of the protocol and tell you in a sort of a hand wavy sense, you know, how we manage to do this. So the first thing I want to point out is that we are using a particular kind of protocol kind of primary backup scheme.

And what that means is that at any moment in time, the group of replicas as a whole is in what's called a view. A view in this system is a very simple thing. It just means that in this view, one of the replicas acts as the primary. And the remainder are backups.

And actually, we achieve this in a very simple way. A view has a view number associated with it. And the primary is simply the node whose replica ID modulo the view number is 1. You know, so we choose it in a very simple minded way. Okay. So the main point is that the view designates who the primary is.

And the primary is the one that's going to determine the ordering of the requests. The advantage of a primary scheme is that one guy gets to choose the order. Otherwise, we'd have to have some sort of agreement protocol in which we'd all be choosing the orders and somehow voting on it. And that's more expensive.

So the primary chooses the order. But the problem is in a system like this, the primary might be a liar. And so the order that it's choosing may not be the proper order. And therefore, the backups have to ensure that the primary behaves correctly. So the backups are checking up on what the primary is doing. If they don't like the order that the primary is suggesting, they won't go along with it. And then, that will trigger what's called a view change, which will go to a higher view number. And a different node will become the primary in that new view.

So the way we survive failures in this system is by doing these view changes, which change who the primary is. And the system will work as long as no more than f replicas are faulty. Okay. So now, the system is based on two notions of quorums and certificates.

A quorum-- I actually showed you a quorum earlier when I talked about the simpler system. Yes, Ron.

AUDIENCE: I want to make sure I understood the model. Are you talking also about client failures or malicious clients or just malicious servers?

LISKOV: I'm really only talking about malicious servers.

AUDIENCE: The client tries to mess up the database.

LISKOV: The client can mess up the database in some ways and not in others. Remember, it could write bad data into the file. And we'd have to solve that problem through access control. But the client isn't able to cause file reads to misbehave in some fundamental sense, because it doesn't have the ability to execute a file read. It just is able to ask for a file read to happen or a file write.

AUDIENCE: Do you think the writes can cause inconsistencies in ways that--

LISKOV: He can't cause inconsistencies. He could write bad data to a file. And this would show up consistently at all the replicas. He can't cause a file to become inconsistent with the replicas. And that's precisely what you're getting out of the fact that this is a state machine replication system. Okay. So we can sort of survive faulty clients, but not really, because of course, it's very bad if you write bad data to a file. But that's no different from an unreplicated system in which somebody writes bad data to a file.

Okay. So in my earlier discussion of the non-Byzantine failure case, remember we had 2f+1 replicas. And every operation had to be carried out at two of them, or rather at f+1 of them. And that's what's called a quorum. So a quorum in these systems is a set of replicas that's big enough that it overlaps with the next set of replicas that carries out the next operation.

Now, in this system, quorums have to have at least 2f+1 replicas. And to understand why that's true, we need to look at what happens when you execute an operation. And so again, what I'm showing you here is a system with one-- that will survive one failure. So it's got four replicas in it. And we have our little green operation there that executed at these three replicas. Okay. And so that was a quorum. And it succeeded in executing.

And then, we executed our second-- doesn't really matter which is which-- operation later, using a different set of three replicas. Okay. Now, since we have 2f+1 replicas in a quorum, this means two quorums will intersect in f+1 replicas. Okay. And that's very important, because at any moment in time, one replica could be faulty. Okay. So that guarantees the quorums always intersect in at least one non-faulty replica. And that's what it is that guarantees that whatever happened in the earlier operation can be reflected in the later operation, because that truth will be carried through the one non-faulty replica.

Okay. Another way of looking at this picture is think about what happened when we executed this operation here. It looked like three replicas carried it out. But this gray guy might very well have been a liar. Okay. And the blue guy that we didn't hear from might simply have been a slow, you know, but non-faulty replica. Okay. So the fact that we got a quorum doesn't mean that everybody in the quorum is telling us the truth. And so we have to have big enough quorums to take care of that problem.

AUDIENCE: Is the operation initially to talk to everybody, or do they talk to a quorum like [INAUDIBLE]?

LISKOV: They actually talk to everybody. And I'm going to-- in the next slide, I'm going to walk through how we sort of carry out this protocol. Okay. So that's what a quorum is. And they're big enough so that quorums always intersect in at least one non-faulty replica.

Okay. The other thing that we use in the algorithm is what's called a quorum certificate. And that's just a set of messages that a replica accumulates from a quorum-- identical messages from a quorum. So all messages in the quorum will agree on the same statement. And I'm going to show you what these statements are like when I go to the next slide.

Okay. So here's how we order requests. Here, we have a request coming in from a client. And the client sends that request to the primary. Now, in reality, the client might send it to some of the other replicas also. But it goes to the primary. And the primary is responsible for choosing the sequence number.

So the sequence number for the requests is going to be the order in which they're executed. So the primary receives this request. It says, this is going to be request 123. Okay. And it broadcasts that information to all the other replicas. So that's what's happening in this part of the picture.

Now, this little thing down here indicates that the whole thing is encrypted. Okay. And in fact, we use cryptography on every single message that's exchanged in this system. One thing that happens is even this request, m, that's coming from the client is encrypted with a secret key that is known to the replicas for that client.

And here, we're using the secret key that the primary uses to communicate with the other members of the group. Okay. In fact, we use what's called an authenticator there, because there's a different secret key for every member of the group that the client-- the primary is talking to. And we're going to broadcast this message.

And we're using secret key cryptography here, because it's much cheaper than public key cryptography. But these secret keys need to be changed periodically. And so they're backed up with public keys and private keys that can be used to exchange new keys.

Okay. So the primary picks the sequence number for that message and ships it off to everybody. And it's all encrypted so that we can make sure that when it arrives, it's uncorrupted. And it came from the primary and so forth. Okay. Now, when a replica receives one of those messages, it carefully checks it over to make sure that it likes it. And it will never-- a correct replica will never accept two messages that pick the same sequence message-- sequence number for different client requests.

So if a replica ever receives from a primary another thing saying here's another number 127, it won't accept the second one. Okay. And it also checks other things about it and makes sure that the client message is really uncorrupted and so forth and so on. Yeah.

AUDIENCE: Are the sequence numbers global or just within a view?

LISKOV: They're global. They're global. So the view change is going to have to preserve the sequence numbers going forward. Okay. All right. So the primary has chosen that number. And now, let's imagine that it arrived at some replica. And the replica looked at it. And everything looked okay. It had never received a 127 before. The client message was in good condition and so forth.

So then, what it does is it immediately sends out messages to every other replica, saying, I'm happy with that number for this client request. So that's what's going on here. A replica is sending out-- in fact, what I'm showing you here is various replicas are sending out their acceptances. Each replica is making its decision independently. Each one is broadcasting its decision to the other replicas in the group.

And in this particular picture, one of the replicas is shown as being failed. So it's not responding. And the primary is not bothering to do this, because it already sort of said it accepted it since it was the one that chose the number. Okay. Now, the next thing that happens is the replicas wait for these acceptance messages. And they're going to try and collect a quorum certificate containing one-- you know, I select number such and such for this client request and the others, 2f of them, saying, I accept that.

Okay. So this is what I meant by quorum certificate. They're identical in the sense that they're all agreeing on the same statement. Okay. And of course, one of these is probably coming from the replica itself. So it knows its own acceptance. And it's waiting to hear from-- it heard from the primary to sort of get itself started. And it's waiting to hear from the other-- a sufficiently large number of other replicas that they also agree.

Okay. So then when it has that, we say that the request is prepared, okay, which means that-- simply that everybody has managed-- that this replica has managed to collect enough messages that agree that it has a quorum for that prepare. Yes.

AUDIENCE: Could the primary send a different message to each backup?

LISKOV: Absolutely.

AUDIENCE: So you have to--

LISKOV: That's part of the problem.

AUDIENCE: --be able to create the prepared certificate.

LISKOV: That's right. Some of you will. You know, this replica might. But you need enough of them to create-- each of them have to create their own prepared certificate in order for us to actually carry out the request. And what Mike is getting at is sort of what's really fundamentally different about this system from the system we saw before. Anybody might be lying. The worst possible one to lie is the primary, because it can mislead the other replicas in arbitrary ways.

And at this point, all a node knows is that things looked okay to it. But there hasn't been any global agreement yet that that's all right. And so it wouldn't be appropriate to execute the message right now, because you don't know for sure that it's really going to happen. So we have to go on.

Okay. So here we are. We just found that we had a prepared certificate at some replica. So when a replica has accumulated a prepared certificate, then it sends a message out saying, I've got one. Okay. And it sends it to everybody. Okay. And again, each replica is doing this independently.

And now, a replica is waiting to see whether it can't hear from enough replicas that they all are prepared. And when it has heard about-- when it receives a quorum certificate for these prepares, then it commits the operation. Now, it knows that the replica group as a whole has agreed that this is a good thing to do.

Okay. And so at that point, it's ready to actually execute the message, send the answer back to the client. One thing is you don't execute a request until you've executed all earlier requests. And this particular protocol is actually operating asynchronously. So there can be many messages in the process of being processed. And when you finally decide that it's okay to do number 127, you'll wait at that point in case number 126 hadn't been done yet. Yeah.

AUDIENCE: So is there any specific restrictions for replicas to execute other requests in terms of the sequence number where it has to execute a sequence number [INAUDIBLE] execute--

LISKOV: Absolutely. Each replica-- non-faulty replica will execute them in order.

AUDIENCE: Okay.

LISKOV: Okay.

AUDIENCE: So--

LISKOV: So non-faulty replicas carry out this protocol without flaw. Okay. So they wait to collect the certificate. They send out the message. They wait to get the-- all those prepared certificates, those prepared messages-- they sort of create their commit certificate. Then, they wait to make sure that they execute the operation in order. They execute the operation and send the answer back to the client.

AUDIENCE: Okay. Okay. Sorry.

LISKOV: Yeah.

AUDIENCE: My other question is, what if the primary is faulty and it sends a request to f [INAUDIBLE] processes and [INAUDIBLE] processes and leave the other f there? They don't execute anything. And later on, how will they keep up with the other replicas?

LISKOV: Okay. So first of all, there was one too many numbers in your numbers.

[LAUGHS]

So if the primary is faulty, there can only be f-1 other faulty replicas. And that's important for the algorithm to work properly, because there can be f total. Basically, you were trying to ask me what happens if there are f failures? Will this algorithm be sufficient to guarantee that the correct result obtains?

AUDIENCE: Basically, I want to ask about--

LISKOV: Yeah.

AUDIENCE: --some non-faulty replicas are in some states while the others are in-- are not in the same state of these other replicas. How will they later keep up with--

LISKOV: I'll get to that in just a minute. Okay. Yeah.

AUDIENCE: [INAUDIBLE]. The primary as a single point could be indicated by the client. Am I correct?

LISKOV: Well, actually, no. But it doesn't really matter. Okay. I mean, so here's what the client actually does. There are various forms in which you can do that. It sends it to the primary. If it doesn't hear after a while, it sends it to everybody.

AUDIENCE: Okay. What if it--

LISKOV: Yeah.

AUDIENCE: --sends it to the primary--

LISKOV: Yeah.

AUDIENCE: The primary is a malicious hacker. And the primary deliberately changes-- let's say it's a [INAUDIBLE].

LISKOV: Yup.

AUDIENCE: It deliberately changes that to modify the data.

LISKOV: It can't.

AUDIENCE: And it can do it perfectly well.

LISKOV: It can't do that, because the message from the client itself is encrypted. And it not only is encrypted, but it has a client base sequence number. So the replicas can tell--

AUDIENCE: But it's encrypted [INAUDIBLE] multiple encryptions inside [INAUDIBLE].

LISKOV: If I go backwards-- I mean, I didn't show you this detail. But if you look here on-- up here, you see this sort of m there. Well, m is something that came from the client. And it was itself encrypted in a way that guaranteed that only the client could have produced that. And that by itself is not sufficient. It also has a sequence number in it that guarantees that it's the current thing the client wants to do.

AUDIENCE: That's what it has to do. Okay.

LISKOV: Yeah, so--

AUDIENCE: [INAUDIBLE]

LISKOV: So this protocol works in various forms. And one form is the client sends this to the primary. And the primary relays it. Another form is the client sends it to everybody. And all the primary has to do is say, here's the number. And here's the digest of the client message. But these are sort of little optimizations of the--

AUDIENCE: [INAUDIBLE] step that's really its function and then the distribution--

LISKOV: Yes.

AUDIENCE: --of everybody else. But you can't see in the content. I mean, you can see it.

LISKOV: It can see it. But it can't fake it. And it can't replay it. Okay. Yeah.

AUDIENCE: There was a presumption that the primary knows what the current-- next sequence on there should be something out of this.

LISKOV: Yes.

AUDIENCE: There could be conflict between the primaries.

LISKOV: At any moment in time, though, there's one primary. And if there were two views-- there can be more than one view. One would be a majority view. The other one would be the old view that was sort of failing. Replicas will only pay attention to the most recent view. So there's always at any moment in time a unique replica that's acting as the primary as far as the group as a whole is concerned.

AUDIENCE: Okay.

LISKOV: Okay. Now, I'm not actually going to tell you all the other protocols. What I can do is forward you to a paper that will contain all the gory details of the rest of-- what I showed you was sort of the normal case operation. Okay. And to complete the picture, there is a bunch of other things that have to be solved.

First of all, we have to implement the view changes. Okay. And the view changes have to be done very carefully. First of all, they have to be done in such a way that any operation that committed, where it was at all possible that the client got the answer-- we have to make sure that it sort of survives into the next view-- so the very important correctness condition there.

In addition, the view change has to be done in such a way that it doesn't interfere with liveness. So you can imagine various kinds of problems that could arise. For example, if the replicas were too fast to do a view change and suspected the primary-- or imagine that a faulty replica tried to subvert the system by doing view changes over and over again. Well, then you'd have a system that was safe. You know, it did the right thing. But it never accomplished anything at all.

So you have to have mechanisms in the view change that ensure that on the one hand, you do view changes when the primary is faulty. And on the other hand, you don't do them too often. Okay. And that-- those details are all in some papers that we've written on this mechanism.

Another thing is that we need to have a garbage collection technique in here. I didn't show you how we were accumulating all these certificates. But the fact is we're storing them into a log. And you can't let that log grow without bound. And so there has to be a way of shrinking the log periodically so that it doesn't get too big.

And this system has to also be done carefully. You actually have to carry out a protocol, where the replicas agree that it's okay to get rid of the tail of the log. Otherwise, in certain view change situations, you wouldn't be able to go ahead and form the new view. And then, the state transfer answers the question that somebody asked a moment ago.

What happens if I have a perfectly good replica, but it somehow or other wasn't in the act? You know, and so it didn't hear about what was going on. How does it bring itself up to date? And we have a very efficient state transfer protocol for bringing replicas up to date.

The big problem with bringing slow replicas up to date is that when you execute operations and delete information from the log, you have a potentially huge database that contains all the changes that have happened in the past. And you don't want to have to ship that whole database off when a slow replica needs to find out information in it.

So we have a very nice tree structure sort of hierarchical system, where at high levels of the tree, we have digests that represent all the information below them. And the leaves of the tree are the pages, whatever the smallest unit is that you want to actually transfer. And we're able to carry out a protocol for this that allows us to send just the pages that need to be sent in order to bring the slow replica up to date.

Okay. So this is what I would call the base algorithm. This is BFT, Byzantine Fault Tolerance, the base algorithm. Oh, before I go on, there are a whole bunch of optimizations. I've alluded to these. Some of these are not necessarily optimizations. They're pessimizations, because you aren't sure until you run it, you know, what's going to be better and what's going to be worse. But here are the kinds of things that you can imagine doing.

For example, it isn't really necessary for everybody to send a reply to the client. It's okay if all of but one of you send digests of the reply, because this will be sufficient to allow the client to tell whether it has identical replies. We've talked already about this request transmission. It isn't necessary for the client to send the message with the primary. And the primary relays it. The client can send every-- to everybody. And the primary can just send a digest along in the messages.

Also, in our system, we do batching. So the primary-- if you imagine a very busy primary that's getting hit with request after request after request, it doesn't actually start the protocol for each request. Instead, it collects a batch of requests and does one protocol for the bunch of them.

And then, optimistic execution-- this one, I think, is the one that's the pessimization. The idea here is that you might reply to a read request before you've actually executed, so sort of out of order. It's optimistic. And with optimistic stuff, there's always a question of whether it was worthwhile if the answer is wrong. It requires that the primary collect 2f+1 answers rather than f+1. So there's a cost for it. And sometimes you have to take it back, because it wasn't just the right thing to do. Oh.

AUDIENCE: You mentinoed in the previous slide transparency.

LISKOV: Yeah.

AUDIENCE: Can you make a precise statements about the sensitivity of this to-- tends to slow things down or deny service by a primary [INAUDIBLE] coming up and down? Is it here or not here? [INAUDIBLE] view changes-- things like that.

LISKOV: So the algorithm has in it very clever techniques for doing this sort of little dance between doing things too frequently and doing things too slowly. And if you look at Miguel's' thesis-- and I will put up a slide later saying who Miguel is-- he has a quite careful analysis of some of this. But I don't think it's at the level of what you're talking about. These systems are very hard to analyze. And I doubt that we have a precise answer to that particular question.

Okay. All right. So the system that I've described you so far, this sort of base system, has a major problem. Okay. It allows a system to provide good service, provided it has no more than f failures over the system lifetime. But systems today last for a long time. And so you know for a fact that over the lifetime of a system, there's going to be more than one failure or more than f failures.

AUDIENCE: The total lifetime or just [INAUDIBLE] at one time?

LISKOV: Over the lifetime-- okay. So we have to close that gap. And so we need a system that does exactly what Ron just said-- namely, it doesn't have more than f simultaneous failures. And we have to do this in a system-- in a world in which we can't tell who's faulty and who's not.

So the big difference-- one of the big differences in this world versus the simpler world I talked about at the beginning of the talk is you simply can't tell. A faulty replica may appear to be perfectly okay, because it's answering queries and acting normally. A non-faulty replica might appear to not be okay, because for example, there's some sort of denial of service attack. And it's not able to get its messages through right now. So you have no way of knowing a priori whether a replica is faulty or not.

So we're doing something that we call proactive recovery. The idea is we're going to periodically recover these replicas, whether they're faulty or not. And if they were faulty, the recovery procedure will correct them. Okay. And that's how we're going to get f simultaneous failures as opposed to f failures over the lifetime of system. But the recovery has to be done very carefully since if the replica isn't faulty, we want to make sure that we don't make it appear to be faulty and that cause things to fail.

And given this technique that I'm about to describe, we can provide correct service, provided no more than f failures occur in some small time window, where small is on the order of 10 to 20 minutes in the current way that we run the system. So what you're going to want to do is you're going to want to think about your system, how vulnerable it is to failures. And then, you'll select a replication number that gives you confidence that given this window, that's good enough for you.

Okay. Now, the way that we do proactive recovery is by equipping every replica with a secure co-processor. And the secure co-processor stores the replica's private key. So the idea is you can't-- basically, the idea of the secure co-processor is it's not possible to corrupt or get at the information in the secure co-processor without physical presence. You can't get there by hacking in over the internet.

Okay. So the secure co-processor stores the private key. And associated with it is a watchdog timer that's going to go off periodically and tell you when to do the proactive recovery. And furthermore, there's a read-only memory that stores the code. Okay.

So the secure co-processor, when the timer goes off, is going to restart the node. And as I said before, the recovery protocol has to be done in such a way that it works whether the replica was faulty or not. Okay. And a correct replica has to be able to keep its state and continue processing. Otherwise, you can turn a perfectly functioning system into one that doesn't function.

So I'm just going to walk through the steps here. Okay. So now, imagine that the timer went off. And the secure co-processor said to the replica, it's time to recover. Okay. So the first thing the replica gets to do is it gets to save its state-- okay-- write it to disk.

And this is a timed operation, because of course, a faulty replica might refuse to cooperate. So you simply allow enough time for a non-faulty replica to save its state. And then, you're going to recover it, whether it wants to or not. In the case of a non-faulty replica, it won't matter. It was faulty anyway. Okay.

As soon as that time period has come up, the secure co-processor causes the system to reboot. And it reloads the code from this read-only memory. And then, you can get the state off the disk. At this point, the replica has the correct code, because you took it off of this read-only memory. And if it was correct, it has the correct state, as well. If it was faulty, it has whatever garbage it had. Okay.

Now, the next thing that happens is the replica immediately changes its secret keys, because if this was an attack, the attacker may have stolen all the keys. And we need to be very careful not to allow some node that's not a member of the system to impersonate. Okay. So if the keys are stolen, some node that's not one of the replicas could pretend to be a replica, because it would know the right keys to use for communication. And if that happened, all bets are off, because all of a sudden, we have more than f replicas since these other nodes are impersonating replicas.

So we change the keys that this node will allow to be used for the messages that are coming to it. That's an action it can take unilaterally. And then, it sends out a recovery request to all the other replicas, acting as a client. Okay. So it's simply saying to the other replicas as if it was a client-- it says, I want to recover. Okay.

And when the other replicas get this message, they immediately change their secret keys. So that's the other side of the impersonation story. They won't accept messages from these other replicas, these impersonators either. And through a mechanism that I'm not going be able to tell you about in today's talk, they then establish a sequence number by which time the recovery will be complete.

So they're going to say, you know, right now, it's at n. At n+100, the recovery will be complete. And the protocol is going to ensure that this will actually be true by the time you get to n+100. Okay. Now, after this point, the replicas just continue doing their normal protocol, including this replica, this recovery. And this is very important, because if it wasn't faulty, it needs to act like a non-faulty replica. Otherwise, the system could stall. Or you might have too many failures.

And meanwhile, the recovery replica is checking its state using these digests that I talk about in communicating with other nodes to find out whether its state is the same as what the other replicas think its state ought to be. And if it discovers that it's missing some state, then it's going to fetch them using this protocol that I mentioned of the tree structured thing.

And by the time we get to n+100, the recovery will be complete. And the recovery replica will have recovered its state, as well. So that's the basic idea. Am I running out of time?

AUDIENCE: Got five minutes.

LISKOV: Okay. Good. All right. So I'm going to finish up very fast. We did some performance experiments. Basically, we implemented something called BFT, this little library that anybody who wants can get hold of. And then, we used it to implement a replicated file system. We did NFS, which is a common file system.

And we ran it on the Andrew benchmark, which is a standard file system benchmark, except it's old. And therefore, we made it much bigger than as originally defined. So we run something called Andrew100, which is 100 times bigger than Andrew and the Andrew500, which is 500 times bigger than Andrew. And we run it on a bunch of machines. And in particular, we're doing the four replicas to survive one failure case. Okay.

And here's our results. This is Andrew100. And what you can see is that we ran three versions of the system BFS with the full fault tolerance turned on-- no replica, which doesn't have any replication. So we're just running the bear algorithm on a single machine, and then the base system.

And what you see here is an interesting truth about NFS. First of all, you may have thought that it synced to disk when you wrote something to a file, but it doesn't. Okay. And that's why BFS-- No Replica-- No Replica and NFS have identical performance. You know, our system, No Replica, doesn't write to disk, because we're going to rely on replication to take care of the problem. We're writing to disk in the background. Okay. And we have identical performance to NFS. And that's because it's not writing to disk either. Okay.

All right. BFS is slower. And actually, in this particular case, it's about 20% slower. And the same thing is true in Andrew500. So we've run these experiments with both with Interfast and also with Solaris. And our results show that we run anywhere from 2% faster to 20% slower. We're faster than Solaris, because Solaris actually writes to disk. Okay. So we have good performance.

And I don't really have time to talk about this. But we have actually defined some future-- some further work that allows us to get rid of some of these requirements of state machine replication, deterministic operations in particular. And also, the algorithm I described to you relies on every replica having identical state. And that can be a problem if your implementations are non-deterministic. Or furthermore, you might like to be able to run different implementations of the different replicas. So those were limitations.

And we have an extension to BFT called base that overcomes those limitations by combining Byzantine fault tolerance with a kind of data abstraction that even allows us to run implementations that have slightly different specifications. And so the system as a whole satisfies an abstract specification. So this gives us what we call opportunistic inversion programming.

Inversion programming is the only way people have ever been able to suggest that would allow you to really survive software failures, because you can run different implementations. But the trouble is that you really can't start off from scratch to write four implementations of the system. If you take off the shelf implementations, which are available for important services, the chances are they don't do exactly the same thing. But this technique will mask their differences. And so we can get very nice behavior.

And as I mentioned a moment ago, there are co-authors. In particular, this work has been done primarily with two students-- Miguel Castro, who did the original work on BFT and finished his PhD about a year ago and Rodrigo Rodrigues, who worked on the base system along with me and Miguel.

So in conclusion, we have an algorithm that's practical. And not only that, but it really provides you with the kind of behavior you want, because these systems can survive, provided they're no more than f failures in a small time window. And there's a bunch of future work that we may or may not decide to do. By reconfiguration, I mean, when I talked about replicas, there was a fixed set of replicas.

Supposing that you'd like to remove one and put another one in, how do you manage to make that work? I thought proactive recovery would be simple when I started to work on it-- turned out to be hard. So I wouldn't be surprised if reconfiguration is likewise quite hard.

Scalability-- I'm really more interested here in the question of supposing have a great big system, how do I insert the right sets of replicas to make the system perform with advantages that BFT gives you without having to provide, say, four copies of every server in the system? And it would be interesting to look at performance under attack, supposing there's a denial of service attack. Or there's really a vicious hacker.

In the performance phase I showed you, we're just proactively recovering. But we aren't trying to simulate what would happen if there were real attacks in the system, although I must say that with the proactive recovery, because of the fact that we run these systems under heavy load, just the very act of recovering a replica means it loses a lot of state. So it's way behind. And therefore, we are already seeing sort of the cost of what it would be to bring a faulty replica back up to date.

Okay. So that's it. Yeah.

AUDIENCE: You showed that it was about a 20% performance hit, because I think it was four?

LISKOV: Yes.

AUDIENCE: If it were 10 or 20, would it also be 20%?

LISKOV: I can't remember. I mean, these results are in Miguel's thesis up to some number of replicas. He might not have gone beyond seven. The truth is people don't typically think of running systems like this with more than just a-- to survive more than a very small number of failures.

I can't invent the analysis for you. It's kind of what you'd expect. You know, there's going to be-- the more replicas there are, the more messages there are. And these will tend to interfere with normal case processing. So you would expect a degradation. If I think about his graph, I believe the degradation is not huge. It's kind of a small linear sort of degradation. But I should've brought his thesis with me. But I didn't.

AUDIENCE: Would it impact the more exponential growth messages?

LISKOV: Yes, but there's a lot of stuff going on in the system to optimize, you know, like the group-- you know, handling of groups and stuff like that that offset to some extent the kinds of problems that you would-- you know, you might have fewer, bigger messages, for example. But yes, absolutely. Yeah.

AUDIENCE: It just occurred to me that he used cryptographic keys here. Which parties have which keys [INAUDIBLE] share what your [INAUDIBLE].

LISKOV: Okay.

AUDIENCE: [INAUDIBLE]

LISKOV: So I didn't show you anything having to do with the private keys. Okay. Everything I'm showing you here is using secret keys. Okay. And--

AUDIENCE: [INAUDIBLE]

LISKOV: Hmm?

AUDIENCE: Conventional symmetric--

LISKOV: Conventional symmetric cryptography-- we used the public keys to establish the secret keys. Okay. And that's done on recovery. And it's also actually done every 30 seconds. So we're refreshing those secret keys all the time, using the private keys. Okay.

AUDIENCE: So each client has a key that it shares with--

LISKOV: One replica.

AUDIENCE: One replica.

LISKOV: Right. And each replica has a key that it shares with-- in fact, each replica has two keys that it shares with another replica-- one for sending messages and one for receiving messages. That was why replicas could unilaterally throw away the keys that they used for receiving messages, since they could still send messages, but they couldn't receive. Yeah, so there's a lot of secret keys floating around, and they're being refreshed periodically and actually at a pretty fast clip.

AUDIENCE: Thank you.

[APPLAUSE]

LISKOV: Yeah.