Project Athena - X Window System Users and Developers Conference, Day 1 [2/4]

[MUSIC PLAYING]

PETER DOYLE: I'll just give a quick talk about the extensions that we added in order to support 3D graphics output in X Windows and support for non-core devices used for 3D interaction. Re-iterating a bit about what Roco mentioned, one of the key features of the system is the high-performance, 3D graphics hardware that we want to take advantage of and having host processes access to shared structure memory directly. We had considered implementing a full 3D wire protocol. But there are a number of things that precluded that, one being the X extension mechanism was still evolving. X itself was still evolving.

We wanted to get it done. And we didn't necessarily want to take the performance hit of having to create graphic structures over the X wire. So the current implementation has 3D graphics clients building structures directly in structure memory and requesting traversals without having to go over the X wire. We've added a minimum 3D extension-- 3D in quotes here-- to provide windowing support for the 3D and address some synchronization issues and a separate input extension to support the additional devices.

Real quick here-- in core X, there is defined a bitmap graphics function which, under control of the graphics context, takes bitmap primitives and renders them into drawables. On the 3D side, it's a little more complicated. Here you want to take 3D primitives, build nodes out of them, put them into structures, then traverse the structures once they are complete, passing the 3D data into a geometry process, which we'll do through 3D transformations clipping, and then in the case of polygons, do some rendering-- lighting calculations, shading calculations-- and then put it in the next drawable.

These contexts here you could look at as in aggregate as being a 3D graphics context. But as we'll see, they map in two different places on our system. We also had to add some additional attributes to X drawables in order to provide support for double buffering and Z-buffering that the 3D graphics wanted to take advantage of.

Let's just take a quick look at how-- I had a little too much coffee on this line here, I think-- of how those functions map into our system. Because we have the 3D bypass mechanism, the formatting and the structure building take place in the 3D client. And that builds the client data structure directly in structure memory here. And then at some point, the traversal context, the traversal process, is executed by the structure walker in the graphics subsystem, passes the data on through the geometry pipe and into the renderer. Here, these contexts are modified on the fly by the process here, which is a bit different than the way X binds the attributes right when you issue the primitives.

On the server side, we added some additional attributes to the window. And in order to set up the graphics subsystem in order to put the data in the right place and the right format, we had to build some structure in order to set that up. And the thing that binds it all together is this traversal context, which we'll call upon the display context to set up the 3D destination and then call the client structure in order to render the image. And a lot like the core graphics context, the traversal context values are cached in the client process, which allows the 3D client to directly request traversals. So there isn't much going over the wire here.

This just shows how the traversal context works to tie things together, where in this case, you can have two traversal contexts which are pointing to two different structures. And they render 3D graphics into the same window. And here, two traversal contexts would access different parts of the same structure, where one traversal context would perform draw traversals and another one might perform a hit test traversal on the same structure or a write back in order to do some plotting, whatever. And here are the attributes that are found in the traversal context that basically specify how and when traversals are going to occur and where the destination is and where the source is.

Moving right along-- there are some issues that are raised when trying to do bitmap-- 3D graphics into bitmap Windows. One is how do you map the 3D images into an X Window, where typically 3D graphics wind up being mapped into some canonical volume here, in our case from minus 1-1 to plus 1-1, which is square. And X Window bitmap graphics are defined as far as pixel locations in a window, which may not necessarily be square. So you have questions as to how do you map that data?

Another issue was support for the double-buffering and Z-buffering, which we solved by adding an additional attribute. We found that we had to synchronize, in some cases, 3D traversal with the bitmapped operations as far as windowing manipulations. You don't want to be rendering a 3D image as you're moving windows around the screen. Because of the structure walker is an asynchronous process.

And also, there's the idea of using the graphics structure as a backing store for the 3D window, where if you move your window, you can cause a redisplay traversal to occur. And your image is back. We asked ourselves the question whether we wanted to mix 3D and bitmap windows, bitmap graphics, in the same window. And it wasn't clear how we would do that since the 3D graphics were in structure mode and X bitmap graphics are in immediate mode. And you'd have to play games with building bitmap nodes and 3D structures. So we punted on that issue for the time. And then we had to handle some events generated by the graphics subsystem that occur at traversal time, of which are many.

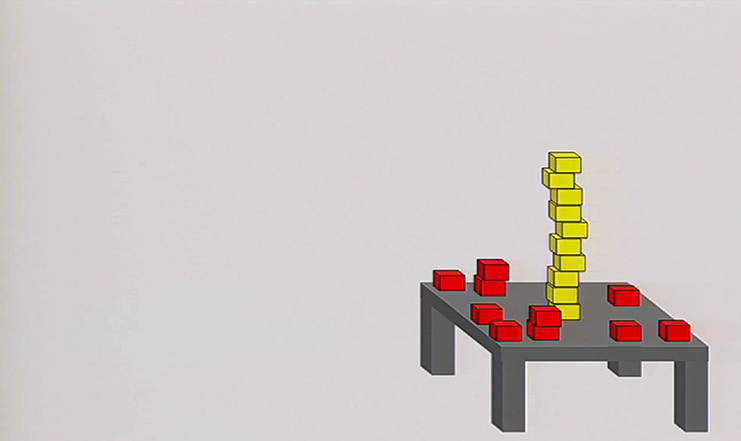

Just a quick picture here of what I was talking about as far as the problem of mapping 3D graphics into a Window-- . If you happen to have some rectangular window that you have to put 3D graphics in, you ask yourself the question, do I want to maintain this aspect ratio of the object? And also, where do I place the object in the window? So this base geometry extender attribute works in tandem with that Window BitGravity, as I'll show here. The easy way is just to map the viewport boundaries with the Window boundaries. And you distort the object.

The other options here are to maintain the aspect ratio of the object. Here, you try to keep this square window within your X Window. And using the BitGravity, you can place it wherever you want. Here is a converse of that, where are you want to map the major axis here, minus 1 to 1. And you scale the other axis. So you lose some data. And another option is to maintain the size of the object. And resizing just shows more or less of your object.

And a few notes about the input extension-- we had to support such devices as knobs, tablets, button boxes, things you normally see in a 3D workstation. And the features here is we wanted to allow you to obtain device data via either an event delivery or polling explicitly for the data. We want it to support some time sensitivity filters in case of dials. You can easily overload the server by reporting too many events there. And we wanted to control the alphanumeric labels, or LEDs, on the devices.

Since the X was still in a forming up, we decided to first tackle a simple mechanism of focusing device input to a client. And in fact, we do currently focus device input to a particular client and not a window, as you normally see in X. And it's rude in the fact that one client focusing a device will take it away from other client.

This also has to work with standard Window managers. So we didn't have support for focusing devices, say in a banner. You click a little button to get focus for the tablet. We didn't want to deal with that. So here, the client himself determines went to focus using focus in or inter notify events. So it's pretty thin there.

One second-- and the last slide, as far as what we consider in the future to do with the input extension, is to have a more sophisticated focusing strategy where it would look more like core devices. And we'd focus device to a window. Looking at input context, where we wouldn't have to reload device state upon focusing and adding some local device interaction-- for example, connecting a dial directly to a matrix and the possibility of downloading some interaction routines in order to do that local processing in a server, and last but not least, looking into some asynchronous event delivery that some people seem to like over the current pull model.

Can folks here me? Can folks here me? We're running a little bit long. So we will be around after this session for any questions that you want. One final note is that a lot of people contributed to this project. A lot of people contributed to this project. And it was their efforts that enabled it to work after all of this. And it's not only the people that were directly on the project but a lot of other people that worked on various components in the system.

[APPLAUSE]

AUDIENCE: They were talking about 10 seconds-- 10 words a minute.

AUDIENCE: Yeah. Yeah. I fucked up by taking too much time. Sorry about that.

AUDIENCE: What?

PRESENTER: That's OK.

PRESENTER: That's OK. Don't apologize.

AUDIENCE: Oh, I'm sorry.

[LAUGHS]

AUDIENCE: Oh, boy.

PHIL GUST: OK. Got it. Good morning. I'm Phil Gust. I'm from Hewlett-Packard Laboratories. And I'm going to be discussing a topic that I call X in a distributed multi-user environment. And what does that mean? Well, we've got this idea of the distributed computing environment. And the idea of DCE is that you've got a person. And you have resources scattered all over the place. And the focus is to get that person access to distributed resources. That's the current DCE model.

What we think is that the future of DCE isn't going to be so much on the access to remote resources as it is to access to remote people-- that is to say, the support of groups working together. I think the 1980s are going to see this as the major thing for computing. Well, we got together and thought about what the implications were for most of the systems on the workstations. And one of the things we thought about was, what is the implication for the user interface of a distributed computing environment that supports groups of people working together?

And one of the things, that most rudimentary things, that we can do is to support the idea of sharing windows that are already in existence among a group of workstations so that people can work at them as though they were sitting in front of the same terminal. So we've come up with the concept of shared windows and a multi-user interface. And schematically, it means that.

There's a lot of other ways you can accomplish the same thing through semantic layers and remote procedure calls and all that. But I think that there's some use for allowing the interface to participate in that. What would you do with such a thing if you had it? Well, there's a number of things you could do from a very low level to fairly high level.

At the fairly low level, you could share an individual window that represented an application with someone else. For instance, you could have a debugger running and push it at somebody else and say, I've got a problem. Can you look at it? And you can both work on it.

Another thing you could do would be to share entire window hierarchy, such as your root window, so that you could do consulting. I've got a problem. Would you look at this group of applications and find out why it's misbehaving? And the third thing that you can do is to create specific workspaces, graphic workspaces, for particular purposes, such as, say, chemical analysis if you have a suite of chemical analysis applications and to put all your chemical analysis Workbench running there and sort of switch channels or go into that place in order to work together on a particular problem.

So here's my mom and apple pie slide. The goals are sort of can we have it all? What we'd like to do is to allow existing X applications to continue to work in this environment and maybe be improved to the extent that a lot of X applications become immediately group-oriented applications because the Windows system supports that, with some caveats that you have to be careful how you use certain things. If I type in A and you type in B in an Emacs buffer, for instance, is that OK? Well, if it is, then we're for you. And also, we'd like to provide additional capabilities so that new applications can be written in order to exploit the sharing specifically. Can we write group-oriented applications using this kind of thing?

Well, there are some extensions that I think are necessary to any windowing system model or any interface model. And I'll address myself to X specifically. You need the concept of what does it mean to be a view of a particular window on a particular display, as opposed to the window itself? Right now a window and a view are synonymous because there's one and only one. But if I had one window and five of us were looking at it on five different displays on workstations, then you need the logical concept of a view and multiple views of a particular window in order to make this work.

You need operations that manipulate, create, and destroy views, such as sharing a view to create multiple views. How can I share them? What are the rules for that kind of thing? What are the operations?

There's some interesting problems dealing with sub windows when you create views. What are the rules for sub windows of views that are shared? For example, what are the rules for inputs in sub views that are shared? What's the behavior of input in general? Again, if I type A, you type B, that's fine. What if we're drawing and both of us put the pen down at the same time and start co-drawing on something?

And finally, what does it mean point at something? The current implementation of X supports a local pointer. But if I want to say, no, no, this up over here, tap, tap, that's a little bit harder. You're reduced to some pretty rudimentary verbal descriptions over the telephone to make that work.

To get at some of these, what we've done is to implement a little toy library under X10. And the reason we did that was simply because we had X10. We didn't have X11 in any form that was reasonable to use. So we said, can we make some progress here? The library is not the right way to do it. But it helped us understand what the right thing to do was. And it wasn't too painful to do or throw away.

We made some assumptions in order to make this work well-- fixed color maps, fixed fonts, fixed tiles. I didn't want to get at that. I was trying to get at some of the much more simple underlying issues. And I'll worry about the really hairy stuff later, specifically when I get to X11.

We did some fairly sleazy things, like on-the-fly broadcast of pixmaps when they were needed. So if I created a new window, I'd copy of the old window on somebody else's server and update yours, for example. That's probably the wrong way to do it. And performance issues show us that that's true. That's the wrong way to do it. But there are some right ways.

Let me show you what the internal structure of this library looked like. It's not too important, but it sort of helps you understand what we're doing. We created a set of records for each of these concepts of a view display in Window and we shadowed a lot of the resources. So for instance, when I opened a display, I create a display record, insert the actual display stuff in it, plus some other things that I need to make all this shared stuff work.

The same thing with windows-- we put the real window ID in the window record and then give you this fake window ID that looked just like a window ID. In fact it was called window. So the goal was, could an application that was already there be linked into this new library and still work? Hopefully, the answer is no or we didn't learn anything. And then we had view records, which were actually instances of windows on particular displays. And what we did is we created local windows, put them in the view records, and then managed those.

There are sort of two things you have to do to discover what needs to be done in a Windows system like this. One is, what about reinterpreting existing X commands so that things more or less work out? If you've done it right, perhaps a lot of applications will work just because you've placed the correct interpretation on the existing X primitives. Some of the things that you'd have to reinterpret obviously are rendering algorithms, because you need to multi-render on various displays, opening and closing displays, some things like creating/destroying windows.

Input processing turned out to be a real challenge because you really like to be able to distinguish where the input was coming from. If I type and you type, some applications by care. And some applications don't care. And the ones that don't care should see things just as they are where the input streams are just coalesced at the application level-- and finally, resource management.

There's a whole bunch of new commands that you might want to add in order to exploit the sharing of-- to write sharable applications. And I'll show you an existing application that has to know about sharing in a minute. We need some ability to create new views. And I call that operation sharing. And to destroy a particular view, I call that unsharing. You might want to be able to specify what the parent of a new window view is when you share it.

We need some way of querying for a list of active views on a particular window. And we have a call like that that allocates up some space and returns a list of these things that I call view info structures that are useful in a couple other places. They carry some auxiliary information that there wasn't room for in the normal X input structure. So rather than go trying to extend that right now, we simply created an auxiliary structure that you can do a query to. And finally, being able to query for some more information and from the last event that you got-- for instance, which of you did this A come from so I can do the correct thing with it? If another user was already typing along, I might just save it up, for instance, until that user was done typing.

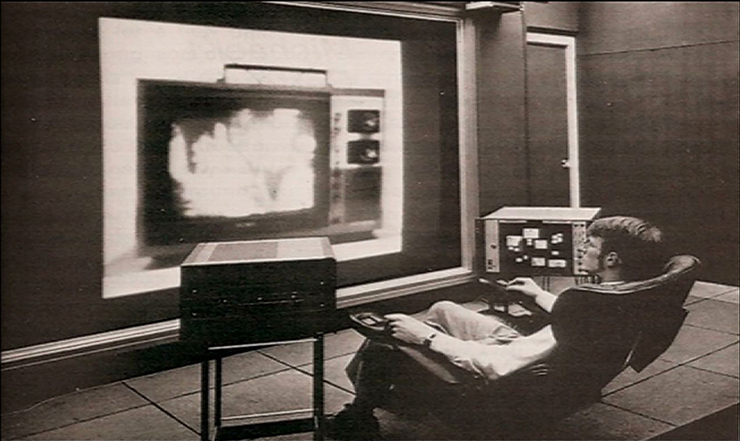

What I want to show you is a videotape that we did of a toy application. It's a white board program along the lines of one that Xerox PARC has had running for a little bit in their CoLab. And the main point wasn't beauty or anything else like that. In fact, we had a graphic designer-- get the play going here-- we had a graphic designer designed some very nice icons for it that I didn't get time to knit into this. The point was to get at some of the thorny problems. And this particular application is specifically knowledgeable about sharing.

I don't know whether this is going to work out. So I can't commit to it being a product or anything else like that. This is a sort of schematic picture of the shared whiteboard so that you can sort of see what it's about. It has a central area which is a draw window and then a tool tray down at the bottom and an easel up at the top. Now the scenario is that I have a white board. I'm working on some difficult problem. And I call a collaborator to ask her to look at this problem, too.

What I'm going to do is to push a copy of my whiteboard at her. And I'm going to sort of describe what the problem is by doing some drawing. So I'll select the color that I'm going to draw from out of the tool tray. And I will draw this region. And you can see it being drawn on the other side as well.

What we've done is we've done some transaction handling so that 1,000 transactions or something like that happened before it flushed out to the other views. We can control that completely. What she's going to do is sort of indicate which region she thinks is appropriate and then suggest an alternative. Here's where a pointer would have been nice because we could have just said, no, this one. And instead, what she's doing is sort of drawing this one. And she's going to indicate how you might shape that region.

Now what I'm going to ask [? Hei Wen ?] to do in this tape is I've got to go do something else. She's the expert. Could she please continue looking at the problem? She agrees. So her view stays up. And I'm going to shut my view down and go do something else. So this is an idea of not only can we share, but we can also have the idea of giving to somebody else. I can give you the monkey, which is a very use--

[LAUGHTER]

Which from an engine point of view is a very intelligent thing to do. So there's a living, breathing example of an application which does this. Some of the gnatty problems we ran into I'll point out in a little bit. This is a schematic picture of that application.

There's a tool tray down here which has drawing and erasing and typing tools. There's an easel tray up here where you can tear off what's in the central screen and put it up so you can have five or six of these drawings pending all at once. And there's a central drawing area for the current drawing.

Now I used color coding here to indicate that the red windows are shared. That's to say, the application specifically when it creates a new instance of a whiteboard links in these shared windows. And the blue windows are non-shared. And you'll notice my root window for the application is not shared. And the tool tray-- everybody has their own tool tray so that I can draw in red and you can erase all at the same time. And finally, the easels-- we'd all like to see what we've all torn off and put up there.

And off to the right there, I've drawn a tree which kind of shows you how that structure looks. Some of them are unique windows in a particular workstation. Some of them are shared among the application.

What I'd like to do is to show you some of the implications of being able to share windows and to create these kind of linkages. I'm going to show you a sequence of slides. And each of these represents some action on the previous slide. We have two servers-- DX and DY-- with some window hierarchies on each. And the first operation that I'm going to do is to share with a DY a particular sub window structure from DX.

So there I've done it. I've shared X2. And one of the things is this WYSIWYG concept. What you see is what I see kind of concept-- WYSIWYG, WYSIWYG concept says that the sub windows have to go with it. And there's some particular geometric implications of that about positions within sub windows. But those windows are actually running on the blue server. And a view of them is on the red server. The color indicates if which server died, would those windows go away?

The next thing that I'm going to do is on the red server-- DY-- I'm going to create a window. And all of a sudden, it magically appears on the DX server as well. Because after all, it's a sub window of a shared window. And so the rules are it shows up there, too, and everywhere else.

The next thing that I'm going to do is kind of kinky. I'm going to take two existing windows-- Y2 from DY and X3 from DX-- and I'm going to make those sub windows of that new Y6. That says that I can have multiple views even on the same server pending under that. So this is a really horrible situation. But if you implement it correctly, this is possible. And in fact, it's even desirable at times.

There's some real serious problems to doing this. And what we want to get at is what does it imply in terms of Windows system design to hit these problems and to think about these in advance? The claim is that I believe it's possible to modify the X Windows system to make this work. It's probably possible to modify the NeWS Windows System to make this work in any future windows systems. But the point I want to make about all this is that it's important to think about it in advance. Like any other piece of good design, you have to know what the problem you're attacking is.

There's some real bad problems-- needing to share other resources used with Windows-- for instance pixmap, graphics, context, whatever else. And part of the approach is, for instance, the per color window maps, per window color maps, are a good start. But you need a lot of other things linked to individual views that go along with it when it's shared. We could do some things like larger resources could be demand-loaded at render time or whenever else. These are classical operating system problems of when you bring resources in, page resources in. And you could cache for efficiency.

There's a new networking concept that's going to be implemented relatively soon in commercial systems. And it's called multicasting. And the idea is I can send one message out to a mailing list of servers, for example. And they all get the same thing. That's very convenient in terms of implementing the shared windows system because I don't want to have to have end broadcast events to get out to end servers. I'd like to broadcast one thing and it gets out to all of them-- very efficient. But there's some problems with the way things are done right now that make that difficult. And part of it is, can we correct that?

We'd like to be able to send a pixmap out and have it mean the same thing to all possible servers. We'd like to send a bunch of stuff out and have it mean the same thing to all possible servers. And what we'd like to do is to have each server sort of resolve it in terms of its server-specific stuff when it gets there, rather than trying to send server-specific ones out for each one. So you might want to, for instance, send translation maps when things change to particular servers. And that's OK sometimes. But the bulk of your transmission should be server-independent.

Inputs from multiple views are a real problem. In the drawing program, for instance, I had to be specifically knowledgeable about drawing. Because if I put my pen down and you put your pen down and we both start co-drawing at the same time, or co-erasing, which we do sometimes in whiteboards, you'd like to be able to keep those separate if you care. And we had to write the application so that we were specifically knowledgeable about which view was giving us the input by that auxiliary query.

What you might want to do instead is to adopt a more transaction-based model so that you could keep extended transactions like drawing distinct, or any other extended transactions you like. You'd like to allow applications, or for instance, a user-interface management system, to be able to define what a transaction was for a particular group of windows or a particular application. And finally, you'd like to be able to define appropriate default transactions so that applications not written to take advantage of sharing could nonetheless co-exist with applications that were.

Finally, a social type of thing-- I would be really loath to think somebody could wander into my window system and steal a window. Users are very uncomfortable with that concept. So what I would like to do is to address privacy problems. And again, the solution, the first step in solving that problem, is to think about it in advance. You don't want to implement a particular social policy in a Windows system-- I claim this. But you'd like to provide adequate tools so that users who care can. And they can have different ones.

Some of the issues, for instance, are to give individual control over who can share my resources. X sort of does that right now in terms of who can put something on my screen. More than that, I'd like to allow control over how things that I have shared get used, this capability idea. So for instance, if I give you a window, can you pass it on to a third person or does it stop there? The least I might be able to do is have read and write bits so that you can look at a window, for instance, but not modify the window if I can say, look at this. But I might want to go further than that in terms of what I can do with this window once I've got a view of it, once I've been given a view of it.

And finally, the bottom line control of a window might want to stay with the server-- or with the application that created it on the machine where it's actually executing. Again, if which machine dies, does the entire all views of that window go away? So there's the bottom line control. I can turn off my machine or kill that window.

And finally, what are some future directions for this kind of work? Well, I'll look into my crystal ball. And I'll say that we need to assess the impact on application architecture. There's a lot of things that you may have to change in your application architecture, just as there were a lot of things that had change when people started adopting toolkits that modified or inverted the program architecture. You might suspect that there's some things that have to be done in applications in order to exploit sharing properly.

You'd also like to consider window management implications of this stuff. What does it mean, for instance, to have two window managers controlling views on particular servers, which is the way they would do now, and yet those window management policies perhaps being in conflict because they're shared. And if a window moves on one screen, it also moves on the other it's a sub window of a shared window. You'd like to be able to understand what to do in that case.

There's also some neat things you might be able to do. You might be able to create a group environment-type window manager that supports a rooms-like concept, for example, out of this. You could already sort of do that under X11 anyway. But it would be even more fun if we had a group-oriented one running across machines, where there wasn't a monolithic process controlling that, but instead, a bunch of operating rooms like window managers that can make this work. And each of us has different views of rooms perhaps, if I want to.

You could see that in the slide. If I took something from my room and moved it to a shared area, re-parented it to be a sub window of a shared window, that's kind of like moving it into a public room. And similarly, if I took something out of a shared area and moved it as a sub window of a private window, that's sort of like putting it into a private room. So there's a start there at a concept of being able to do that. There's also social problems with being able to do that, too-- for instance, taking something from you.

Finally, I'd like to look at what higher level user interface toolkits you would need and how you would exploit this concept in existing toolkits. For instance, if I bring up a menu and I don't know which one to choose, could I push the copy of the menu at you and say, here. I don't know which one to pick. What do you think? And perhaps you could pick it instead. I mean, that's a perfectly plausible thing to do that we do in real life. but we'd like to be able to do on our electronic real lives, too, which is sometimes more real than-- distressingly more real.

So that's sort of what I've been thinking. And if anybody has some thoughts about some things that I've left out or some help they could give me in terms of suggesting directions, I'd be glad to talk to you any time today or tomorrow about it. If you believe it's absolutely impossible to do any of this, I'd like to know about that, too, before I go off and finish it.

[LAUGHTER]

My research budget is limited, just like everybody else's. But I feel in my bones that it's something I'd like to have. We had it 20 years ago with TOPS-20 systems. And I'd like to sort of regain that and perhaps even go further than we were able to go 20 years ago with that kind of thing.

[APPLAUSE]

Yes? Hi.

Hi. Well, I kind of like what you're trying to do. But I really don't like the way you are trying to implement it. It seems to me you can do this without any modification of any of the clients or any extensions to the X server. All you need to do is to implement a nested server that-- I mean, we already have a nested X server, which takes a bunch of clients and images them into an X window, right? As far as those clients are concerned, it's just the X server.

All you need is an X server that takes a bunch of clients, images them into a number of X windows on different real servers. And you need that central coordination point in order to provide what I believe in this thing is called control of the floor. You know, you take the floor. And you have control of what's going on or is the term? I can't remember. Anyway, you need some central coordination point to hand the control of the input device effectively around. And that's what this pseudo server provides.

So for each session, you have a pseudo server which is imaging on two windows on a number of real servers. And it seems to me you can do that. You don't need to affect the applications. You don't need to change the actual server at all.

We actually-- we kicked around three possible ways of implementing this. And the idea of a super server or a nested server is one of them that we plan to look at. Because it's a pretty heady thing to be able to go in and actually whack on the 11 server or something like that. And I'd like to avoid that. So the idea of a co-server that's like the nested server feels like the right thing to do to me, too. I agree.

From writing some applications that use this, though, it feels like there's some issues that aren't addressed in X that really have to get addressed by whatever you implement, however you implement it. An input management is a critical one, I think, that's not there. And having new tools to implement input management and view update management and view control seem to me to be important. And I don't know whether or not it's doable without any modification.

There's the other side to looking at it, too. One is do you have to make any modifications to get existing X applications to work? And that's true. But are there new opportunities you could have if you thought about it a little bit further and added some things so that new applications could be written that went beyond what was possible today? And that's sort of the second part and to me, the more interesting part of doing it. I agree with you. I think it's possible for existing X applications to continue working. I sure hope it is, or we're in trouble. Hi.

Thank you. I wanted to also endorse this. I think this is very important work. We're trying to look at this, too, at Bellcore. We had sort of a similar idea of making a box that looked like a server to the application and a bunch of applications to all the various servers, but also have an overlay capability to be doing drawing and passive pointing, because any window that multiple people can see, you probably want to have some sort of passive conversation on top of, so perhaps talk later.

Thank you.

But I wanted to tell you that I thought this was very important work and endorse this kind of stuff in the community.

Please come talk to me afterwards. I'd like to. Any other questions? Thank you very much.

[APPLAUSE]