Brains, Minds, and Machines: Consciousness and Intelligence

ULLMAN: I'd like to welcome everybody to this panel on Consciousness and Intelligence. And we have with us three distinguished panel members to discuss some of the most difficult and most fundamental problems in the entire domain of mind and brain.

So let me start by introducing the speakers, and I will not go into the full list of their academic distinctions and so forth, of which there are many, but I will focus briefly on the relation to the topic of today, namely consciousness and intelligence. I will do it in alphabetical order, starting with Ned Block on the left. He's the Silver Professor of Philosophy, Psychology, and Neurosciences at New York University. He works on the philosophy of mind and foundations of neuroscience and cognitive science. He's the past President of the Society of Philosophy and Psychology and of the Association for The Scientific Study of Consciousness. He has many, of course, many, many publications, but a collection of his papers on functionalism, consciousness, and representation is two large volumes published by MIT Press.

Christof Koch is the Lois and Victor Troendle Professor of Cognitive Behavioral Biology at Caltech. He's currently on leave from Caltech, and he's the Chief Scientific Officer at the Allen Institute of Brain Science in Seattle. With his long-term collaborator, Francis Crick, he pioneered the scientific study of consciousness. And he's the author of the well-known book, The Quest for Consciousness. And there is a new book by Christof about to be published shortly I believe, titled Consciousness, Confessions of a Romantic Reductionist.

And the third member, Giulio Tononi, is a Professor of Psychiatry at the University of Wisconsin. The main focus of Giulio's work has been for a long time the scientific understanding of consciousness and also a large body of work on the function of sleep with close connections to the study of consciousness as well. Among his publications, there is a book with Gerry Edelman that many of you probably have seen, which is called The Universe of Consciousness, How Mental Becomes Imagination. In a more recent book on the topic of consciousness, titled The Neurology of Consciousness, Cognitive Neuroscience in Neuropathology.

Now let me say just very few words about the topic and then call upon the three members of the panel. And the order would be Giulio Tononi followed by Christof and finally Ned Block. Now clearly, consciousness is one of the most intriguing and most important topics in the study of the mind and the brain, and I think in science in general. It is also very special and very, very unique because consciousness cannot really be studied using the same scientific tools which have been proven successful in many other areas of science.

So because of this, consciousness has been sort of left out of the study in science for many, many years. And only recently there has been a revival, or even say a revolution, and consciousness came back into the mainstream of scientific studies. And this was in large part following the pioneering studies and discussions which were led by Francis Crick and Christof Koch. And I think that another reason for why consciousness came back into the mainstream of scientific studies has been the advance of certain new technical tools, like the FMRI, that allowed us for the first time to study brain processes in the conscious human brain in a way that were not possible using previous techniques like EEG, brain lesion, and so on. So suddenly it became possible to have human subjects and put them under conditions where they are either conscious or unconscious of what happens in the brain, and we can look at the brain activation and try to make correlations and conclusions, perhaps even some causal but at least correlations, between certain brain activations and conscious states.

Now the scientific study of consciousness raises many, many different questions and problems, and we will not tackle them all today. The goal is more restricted. We will focus on the issues of relationship between consciousness and intelligence and many interesting and intriguing possibilities that we would like the panel members to address in their presentation and in subsequent discussions. For example, we would like to discuss and consider whether consciousness is in some sense a byproduct, whether it comes about by intelligent processing.

So if you have something which carries on intelligent information processing, whether this in some sense makes the system become conscious. Whether, if we build machines for example, that would become more and more intelligent, whether we can expect that some form of awareness may result from the mere fact that are producing highly intelligent processing. Or in the other direction, one can ask whether consciousness is necessary for highly intelligent processing. Whether to become really intelligent, in some deep sense, really understand certain concepts whether this involves some form of consciousness. And whether if we want to build intelligent machines, we will have to understand something about consciousness. And we would have to make sure that in some sense these machines have some form of awareness or whether this is not at all a necessary condition.

Now, we cannot hope, of course, to answer these questions. These are very deep and complicated questions that we are just starting to deal with, but I hope that some interesting discussions would follow. And also that we will get at least some feeling for the kind of scientific techniques or what kind of scientific investigations in the domains of brain science, in the domain of machine intelligence, could help us provide some better understanding of the problems that we are discussing today.

So we will have now a sequence of three presentations. Since there are only three of us, and not four or more, we will be able to be a little bit more relaxed with time and have slightly more longer presentations, but not very long. We will keep them still rather short, and then we will open it for discussions, first within the panel, and then we will open it up also to questions from the audience.

So I would like to call upon the first speaker, Giulio, please.

[APPLAUSE]

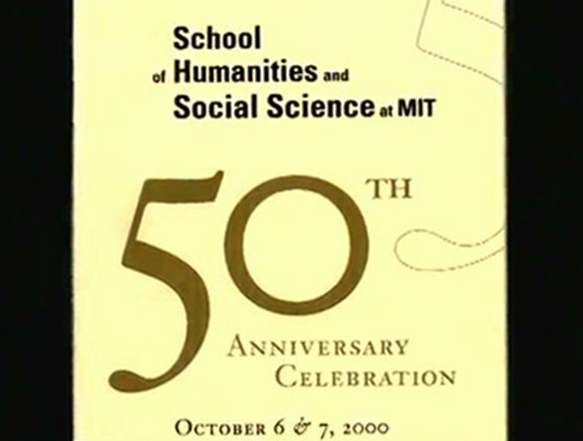

TONONI: Thank you, Shimon. So in exactly the same era in which MIT was founded, Thomas Huxley, Darwin's bulldog who wasn't really shy about anything and thought everything could be explained, said consciousness cannot be explained. Because as we all know, and as we heard two nights ago at the beginning of this symposium, even 150 years later many people who think that we'll never be able to bridge the gap from matter mechanism to imagination and experience.

Now it has been stated, for instance, that consciousness cannot be defined. I actually believe it's much easier to define than intelligence itself. I usually define it as that thing that goes away when you fall into dreamless sleep or are anesthetized or somebody hits you on the head, or presumably when you die. It also has been said that in 50 years or so-- this was two nights ago-- consciousness-- we won't even talk about. It would have gone away. I would believe that that may be so, but if it is so, the universe would be a pretty empty place then.

So having said that and remembering why the problem is so difficult, I want to bring back a couple of facts I'm particularly fond of. They are very big facts about the brain and consciousness that tell us that an explanation must be there, and there must be a very special relationship between mechanism and forum.

So what you see there is an old PET image of the activity of the cerebrum lodged in the cerebral cortex. And as you heard from Christof yesterday, that has roughly 16 billion neurons in humans. And then underneath the cerebellum, which is smaller but has more neurons, roughly 50 billion or so. Now the cerebellum is a wonderful kind of machine. It has more neurons, as I said. It has all kinds of connections-- neurotransmitters, neuro modulators, genes. It's incredibly complex. It has maps of the environment. It controls movement. It connects to the cortex. And it learns beautifully. It's extremely plastic.

So you may want to decide for yourself whether it is intelligent or not. But one thing is for sure, if you take away the cerebellum completely from the brain, your consciousness is essentially unchanged. It's the same person, having the same feelings, seeing the same shapes and colors, and hearing the same sounds, and so on and so forth. If I take away your cerebral cortex, nothing is left. You're not there anymore. So there is something about this beautiful, complicated machine, very intelligent and capable of learning, which is just not good enough to generate even a glimmer of experience. That tells us something or at least it should.

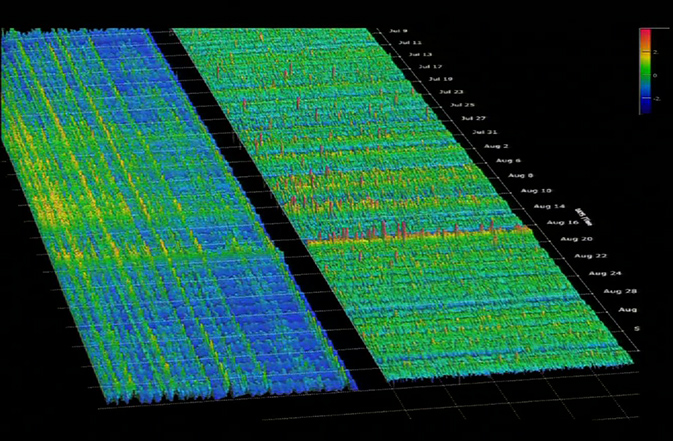

Another example I'm fond of is the sleeping brain, especially during that early phase of slow wave sleep in which if I wake you up, you're not there. You have nothing to report. As far as you're concerned, the world is not there. You're not there. Nothing is there. But if you look at neuro activity-- this is a cat, but the same is true in humans as we now know-- in a high order visual area presumably involved in consciousness, you can see the activity continues unabated. Find rates are very much the same as in quiet wakefulness with very, very small interruptions like these. So there are some interesting differences, but the brain is remarkably active. The same cortex is remarkably active, and yet our experience disappears altogether.

Now this is not only of practical significance to address consciousness and understand how it's generated. It's, of course, also important when you consider situations like this. This is the cortex of a healthy human brain, and this is a vegetative patient of Nicho Schiff in which you see most of the brain is annihilated, most of the cortex, but there are some islands of activity that are actually reasonably normal, let's say in areas of the brain that deal with color. If those parts of the brain are preserved, one can ask, will there be somebody there experiencing just colors for instance, but nothing else? Will there be nobody there at all?

Those are questions that we definitely need to know the answer to. For instance, what if that were an area for pain? Will there be pain forever, excruciating, or will there be nothing at all? And on the left you see a case of dementia, in which the brain fragments into pieces of activity, and as we all know by seeing some older people become demented, it becomes difficult to know whether there is anybody home. So we should start looking at science to provide at least some potential answer to these questions.

Now these questions apply then to everything. We heard today about development. That's the neural activity that happens, the only PET study really in infants like that, that happens early in the brain of an infant. Some areas show up. Is there gain some experience associated with that? Is it the blooming confusion some people talk about or even more consciousness than you find in adults as others have stated?

Or if you go to animals, which is preferred by philosophers, because of course that is one situation where it's very difficult to imagine what it feels like to be a bat. If you have a brain like this though, which is not too unlike ours, maybe they do experience something like we do. What's the answer to that? And I particularly like the moray eel because that is a philosophical animal by excellence.

Look at how it looks and how it thinks. And the kind of brain it has here is extremely different from ours. So how much consciousness is there? Of course, the theme of the symposium is more about how much consciousness is there or will there be in machines that may be highly intelligent by any criterion, any machine that we may already have. How do we go about addressing those questions?

I think the way you have to go is to develop a theory. You've heard many times the call for theories that are needed in addition to empirical studies. To understand the mind and consciousness is probably the place where theory is most important. So a theory of consciousness needs to do several things. One is to define what consciousness is, what determines the quantity and quality. Another thing is to be able to go back to neuroscience and account in a parsimonious manner from many empirical observations, like why the cerebrum and not the cerebellum, why wake and not earliest wave sleep, and so on and so forth. There are many other examples.

And even account for those aspects of phenomenology that right now seem ineffable. Like what makes the experience of pure red different from that of pure blue, or a sound, or of the scene that is in front of me right now. All those questions must have a scientific explanation.

Now the two axioms I believe are most important to understand what consciousness is come actually straight from phenomenology. You don't need to do any science or experiments to know that. You it directly. The first one is that every experience by itself is extraordinary and informative, not because of how many chunks of information are contained in it, but because of what it rules out. Whenever we have an experience, the one you're having right now, it rules out trillions and trillions of other possible experiences that you could have had but you didn't. And it distinguishes it from them in that particular way, from each and every one of them. That is the essence of information, no matter how you want to measure it.

And so that's the key feature of consciousness. Every experience is what it is because it is different in a particular way from many, many multitudinous other ones. But there is a second related feature that we cannot forget, and that's the integration. Meaning, every experience is what it is and cannot be decomposed into independent parts. We can talk about the various pieces, and you know what's on the left and what's on the right, but we cannot experience them as independent things. It doesn't even make sense. In fact, to be able to experience the left side of the visual field independent of the right side, or the shape independent of the color, you would need to split the brain. In fact, split brain patients are the only ones who can do that. But then they have two consciousness and not one.

So now, the basic idea, purely from phenomenology then, is that given those two key features of consciousness and the physical systems that generate consciousness, should be able to be treated as a single entity. It should be one. That's the Integration part. And at the same time, it should have a huge repertoire of distinguishable states due to its own mechanism. Those two requirements have to be there together, and if any of them goes away, consciousness should vanish.

If the repertoire stage decreases, the system becomes like a coin instead of a die with a trillion faces as depicted here. Then you have little repertoire. So any state of the system can only distinguish between two. The world is divided into two things, this or not this. Or vice versa. If you lose the integration, the system breaks down into many little pieces that may store a lot of information if you want, represent a lot of information, but it's not a single entity any more. So we'll see. There are ready examples, and there are experiments we can do to address these two properties in the brain.

But before I go there, let me tell you at least in principle, how one can go ahead now and develop conceptual tools and finally measures to capture these two notions of information and integration. What you see here is simply the very simple physical system, three end gates, simple Boolean gates that happen to be all three of them A, B, and C off. The way to look at this, I think, is to imagine that for every physical mechanism in a particular state there is a corresponding information structure.

And the first thing we want to know about that information structure is can it be decomposed into independent parts? Was it integrated? And you can do that by asking what is the information generated by these three end gates when they are off? Well, if you work through this example, you will see that they rule out some possible states. The system couldn't have been in certain states, but it would have been in some others. And if you would divide it into parts, along this minimum information partition here, something is lost. That is, the system, the whole, cannot be reduced to the sum, or in this case the product of the parts. And this is a precise definition of the extent to which the whole is more than the sum of the parts, information.

Now that is a way to define a quantity called phi that tells us exactly how much more the whole is than the parts, but there is more to that, and it will only indicate it briefly. So that variant causal structure here in a particular state, if you work it out, cannot be decomposed. So it's integrated. But it will specify many different probability distributions. And those are supposed to be the very distinctions that make every experience what it is.

Now I'm showing this for a system of three end gates that are off. You can only imagine what kind of information structure would be generated by the appropriate parts of one of our brains. It is, indeed, impossible to even contemplate right now, but it's probably expressing somewhat of the richness of experience.

Now with such tools at hand, one can then go back to neuroscience and look at those examples and many more that I mentioned before, facts we know about consciousness. So why is the cortex capable of generating consciousness, at least some parts of it? Well the cortex is ideally a structure for that from this perspective. It's made of functional specialists-- specialists that do different things-- we heard it many times-- and yet they all talk to each other. That's ideal for generating a lot of integrated information, a lot of phi.

By contrast, the cerebellum is a wonderful machine, intelligent if you wish, but it is composed of little modules that hardly talk to each other at all. So it really decomposes into many pieces, each of which generates very little integrated information. And there is no integrated information structure corresponding to the cerebellum as a whole. That, I think, is the answer to why the cerebellum can't do that.

There are many other things one can do. I just want to show you one experiment in which one tests some of the very basic notions of this theory by going to the sleeping brain, that early part of sleep when we are unconscious, and one can use transcranial magnetic stimulation to perturb a particular portion of the brain and see how the cortex reacts to that perturbation.

And we can see here-- well, I hope we can-- I'm not sure if I can start the movie. Let me see. Yes. If you perturb the brain when he is awake, you will see that under the coil this brain reacts very strongly, and then the activation of the brain jumps around in all kinds of places in the cortex, indicating the system is behaving as a single entity. You touch it, and it rolls around in all kind of ways for roughly a third of a second. It's integrated, and he had a vast repertoire of states, just as you would expect when you are awake and conscious.

And you do the same thing in the same subject in the same place at the same intensity when you are unconscious early in sleep. And what you see there is that the brain is indeed active. It is reactive. It is responding very strongly to the stimulation, but that activity doesn't go anywhere. The system doesn't behave like an integrated entity anymore. It actually breaks down into pieces. And every time you perturb a piece, only that piece will respond, and not the rest of the cortex. So when consciousness vanishes, the integration is vanished too in the cortex.

We have now seen this not only in sleep, and we have seen it return when you're asleep but dreaming. We have seen the same thing, integration vanishing and consciousness vanishing, in anesthesia. We have seen that in vegetative patients who are unconscious. And by contrast, we've seen the response of the brain turning again integrated when the person becomes minimally conscious. Presumably experience has resurfaced there.

So let me finish by giving you then, based on this theory, a recipe for building a conscious system. The minimal ingredients are you need then a system which is a single entity. That's the integration. So it cannot be decomposed into informational independent subsystems. You need that system to have a very large repertoire of distinguishable states by virtue of its own mechanisms. That's the information part. And when you put that together, you will get a system that's actually capable of generating a very large set of informational relationship.

And to prepare the transition to the next presentation, let me show you one picture to try and illustrate this notion. We have heard many times earlier in the symposium that we now have subsystems, very sophisticated ones, that can deal with restricted task domains very well. They can identify, for instance, pedestrians. They can identify street signs. They can identify where the road ends. And you can imagine, actually you have been told, that in the next four or five years we'll have a plethora of these that are going to be able to do in their own limited domain a job probably better than that that anyone can do, each of them within its limited domain. And the information structures generated by them can be summarized as local, independent, and limited.

But when we look at this scene-- well that's obviously wrong-- these are some that are not so obvious. When we look at that scene, what the claim is is somewhere in our brain there is a single large integrated information structure being generated. This is the tree view and one generated by three logical gates, but as I say, imagine the one that really happens there. And the claim here is that this kind of intelligence, the one that is context sensitive that is needed to approach task domains that cannot be decomposed into independent parts, and that's the world, well that kind of intelligence predisposes consciousness. In fact, the claim is stronger. That kind of intelligence is actually consciousness.

Thanks.

[APPLAUSE]

ULLMAN: Thank you. Now Christof. You can watch time. The clock is there.

KOCH: All right, I'll be brief. This past Christmas I spent 10 days enjoying the hospitality of Giulio and Kiera in Madison, and between readings of Dante we thought about this question of The Integrated Theory of Consciousness, how would that apply to machines? And I think some of those thoughts are highly germane to what we are trying to answer here, which is the relationship between intelligence and consciousness, which is going to come out next week.

So the two axiomatic principles that Giulio just mentioned for Integrated Theory of Consciousness, we experience both of them. One of them that every conscious experience excludes a stupendous large number of sheer uncountable number of other experiences that we could have had. So individual domain. Even image of black. Two nights ago I woke up in my hotel room at 5:00 in the morning. It was still dark, and so it took me a couple seconds to own myself where I was in this new place, but even that experience just of black, it still rules out seeing the burning twin towers. It rules out seeing the face of my daughter or seeing every image of every movie that's ever been made.

At the same time, this is a point that's always been emphasized by philosophers such as Ned Block, is conscious experience, the current content of what you're conscious of is unitary. It's holistic. Now that might sound very airy fairy to many scientists but what it means that what you're currently conscious of you apprehend as one, and you don't divide. You're unable to subdivide. Of course, what you can do in terms of images, I can shift my eyes or my head towards the left or to the right, but once I see something, whatever I consciously apprehend I cannot subdivide anymore.

So for instance, what I consciously apprehend right now with the current state of my attention and my eyes, the scene in front of me, meaning you, I'm unable to sort of consciously just apprehend the left half separate from the right half. I'm consciously unable to, for example, see only black and white information that surely is available in my visual system. We know this, but I also see color. I'm unable not to see color unless there's something wrong with my photo receptor. If something moves and I have a properly functioning MT and motor system, I'm unable not to perceive the motion, if it forms part of this one conscious experience.

And so from that is the idea that consciousness, which is really experience, it's just a different word for what we experience all the time, is just a set of informational relationship. And they have to be A, highly differentiated, certainly if you care about human level of consciousness, and they have to be highly integrated. And this leads to ways to possible test this. In an analogy to-- of course, you all know the famous article by Turing published in 1950 where he played the Imitation Game. You put a man and a woman in two rooms, and then you have a third operator, qualia, who tries to find out who's the man who's the woman. The modern version is slightly different, but it's the same idea. So this would be a test that you can apply in principle to machines in the domain of images. We prefer images because images are immensely more richer than words. After all, an image is worth more than a thousand words.

System capacity for integrated information that's for consciousness can be measured by asking how much information the system contains above and beyond that contained by its individual parts. So as I said, pictures contain a huge amount of implicit information that we all have direct conscious access to, if you're a healthy neurological, normal individual.

And so a test for integrated information-- if you have a particular machine, and you're interested to what extent is it conscious-- let's leave a part the question for a moment to the extent to which it's really intelligent-- you can ask it what Shimon did yesterday. So you can show the computer a very large number of images, and you can try to get it to interpret what it is and try to make sense of this image. There's so much information in any given image, all the relationship in an image among the different parts, that we have implicit access to in terms of the spatial relationship, in terms of the perspective cues, in terms of the semantic content, but also in terms of the individual physical elements that make up an image. And we have those because they are implemented in our brain. They are implement both as a product of our genes, of our experience of our forefathers, as well as our personal experiences that we have in our early childhood.

So one way to address this is to generate images and to ask a machine to make sense of those images. Different ways to do it, the one I'm going to pursue here briefly is to ask a machine what's wrong with these images. So as children we had these puzzles where we had to find out what's wrong with an image, and you can do the same thing with a computer. And a computer, the space of things that could be wrong with an image is gigantic. It's not only the things that I'll show you they could be semantically wrong with an image. So, for example, you put an image of a bicycle in a refrigerator, and obviously we know that that doesn't make sense. But you can also manipulate images to show that the physics of the image is obviously impossible. And you can, given all the pixels that make up an image and given all the object that on a typical image like the one in front of me, you can manipulate small objects, and to any child that's obviously wrong.

So, for example, there are a few images like this. Now think of a split brain patient. So I think that the best way to have intuition about integrated information theory is to think about a split brain patient. So you have a patient who has experienced severe epileptic seizures and to alleviate those seizures the neurosurgeon-- this operation was first done in the '40s-- the neurosurgeons cut sometimes the entire corpus callosum, the 200 million fibers that connect my left cortical hemisphere with my right cortical hemisphere. So now there are two cortical hemispheres that are sort of independent. And of course, we all know from the work of Roger Sperry at Caltech. It turns out that two entities now inside this one skull.

As far as we can tell, there are two conscious beings inside this one skull. If you talk to this individual, to this patient, you're going to talk almost always to his or her left hemisphere, because as we know, in the left hemisphere typically the one that's linguistic competent. The right one can sing. The right one can, if you silence it for example using a Wada Test, you can sort of query the right one in simple ways. And it can sing. It can ask simple questions, that I can do very sophisticated tasks. So Roger Sperry concluded that to the best of our knowledge, there are two conscious minds with two cortical hemispheres, but they're totally independent. They don't communicate with each other. So in that sense, there are two conscious minds inside one skull.

And so you can show in principle, and people have done these sort of experiments, you can [INAUDIBLE] someone take a simple picture like this-- this is a desk in Giulio's office-- and you can cut out the central part, and you can ask a normal human if you have your intact corpus callosum you can easily ask them to answer this question, do these halves match? And yes, in this case they do. And in this case they don't match because obviously they're taken from a very different part of the house.

So this is something that a split brain patient could not answer. The left brain would see the right image and the right hemisphere would see the left image, and to both of them they look perfectly fine, but only somebody that has this unified consciousness and the substrate of that has to involve the 200 million cortical and colloidal fibers. Only a person who has an integrated information could answer that question and say yes.

This is another beautiful picture I found on the web two days ago. It really shows very nicely. And if you think about what it would have taken for a computer vision algorithm to recognize this. All the local parts, of course, perfectly highly accurate render, graphically render. But then of course, a child could tell you that physical just impossible, doesn't fit together. Now, if you think about the local pieces of cortex that have access to local information that's not integrated as a whole, locally everything is perfectly fine there, but of course globally it's a jarring image.

You can look at images like this. It gets a little bit more sophisticated where you have these abrupt-- the towels are abruptly cut. Just think of how many images can you generate where you sort of play around with silhouettes, where you can abruptly cut off an arm for instance. You can, like in this case, you can abruptly cut off a head. You can make changes in the skyline. For example, you can make a V-cut here in the skyline. There's so many things. You can play around with the colors. You can play around with the shadows. Here's there's an innumerable number of things that you could do with images.

And if you as a normal, conscious human person with normal visual experience, if you consciously apprehend the whole you will immediately know there's something wrong. Of course, it depends on the granularity. If I just mess up a single pixel, you might not have the sufficient spatial resolution. So here for example, we know, again, locally, this little person, everything looks okay. But you have to know about perspective. And suddenly a child after seven or eight years old will know that this is physically impossible. There's something wrong with this image. Obviously it can't be built in the real, three dimensional world. You can play here around. You can cut out this.

You can have another silhouette that's going to be more difficult to find here, but again you can see this person here is just half cut off. You can have things like this that are obviously-- now again think of, in terms of computer vision algorhythm-- if you do, for example, a Google Image search, what would it take to recognize that this is obviously physical, that this picture obviously isn't in the real world? Because once again, all the individual things are perfectly fine locally. It all makes sense. But globally, if you put it together, it obviously doesn't make sense.

Or a case like this. This is actually a picture of a performance artist in Germany. It's actually a real picture. That's what he does for a living.

[LAUGHTER]

Think of it a little bit like a capture. I mean you all know these capture tests, like when you have to go out fill out credit card information. You have to try to identify these words. It's getting more and more difficult because the algorithms are getting better doing it. So one can think of this a little bit like in that spirit of visual based tests, and you have to ask at what stage in the development of machine vision are we going to be at the point where you can show a computer these images, and the computer will be able to tell that something's wrong or even to identify what is wrong.

Now of course the insidious thing is if I just fix a single test. So for example, a couple of years ago Microsoft had a test to identify humans and distinguish them from bots that asked them to distinguish cats from dogs. And of course, now if you put a lot of computer power behind that particular question, you can surely make a local algorithm that all it knows and all it does is to differentiate cat images from dog images by doing a machine-based learning on those images.

But the point about this idea is that there are innumerable numbers of things that we have implicitly encoded in the structure of our visual cortices, and all that information is consciously accessible to us. And so you would have to make a computer, in order to build a computer that can do this you have to build a machine that totally understands images the way we understand images.

So you can't just have a local set of very successful algorithms. Like for example, Arman [INAUDIBLE] showed us two days ago, in terms of detecting pedestrian. Or as we know there are now very, very immensely successful machine vision system that can do face recognition much better than any human can.

But this is highly specialized because this face vision system will not know unless again you program it to the emotional expression of the face. Or if you show this face identity software an image of where Julius Caesar is shaking the hand of our president, and this computer vision system could maybe identify the persons as Julius Caesar and Barack Obama, but wouldn't know that obviously this is a physical impossibility for them to have met because these algorithms are very local they have local information they don't have access to global information.

Here's another picture. Obviously anybody can see that this is wrong. Locally it all looks perfectly fine. So our claim is that once we have machines that can pass this sort of test, not just in one instance because, once again, you can always build-- but if you have a particular test, like if all you want to test is the orientation. If you turn the orientation, Google has done this. If you want to test for the proper orientation of images, of course, you can build up statistics of the natural world. And ultimately, you can have an algorithm that distinguishes an image from this one.

But if you, for example, locally mess up the orientation, or once again if you think of all possible things that you could mess up in an image and if a computer could understand all of that, then our claim is you would have built a machine that has integrated information, and that therefore per the integrated theory of information, that has conscious experience of a different structure because it's brain, it's architecture, is radical different, but it would have conscious experience.

Now human level consciousness it would also be considered intelligence. So the claim is that once you build machines that possess human level intelligence, that's equivalent to building a machine that has human level consciousness and vice versa. In this case they're really identical. So in other words, if you have a general purpose machine that really understands just in the visual domain the entire range of images that we understand, then it would be intelligent, at least vis-a-vis images, and it would also be consciousness.

Now of course, you can have dissociations. You can have double dissociation. So in principle, it depends just what we mean by intelligent. If you have a machine like Mobileye that drives your car autonomously, like it looks like we're going to have in a few years, by some measure, of course, it's intelligent. If you look at Watson, that's intelligent, but it has no conscious knowledge about the world because it doesn't have access to integrated information. Or it only has very, very little integrated information.

Likewise, if you think about the brain of a bee. I love bees, 850,000. It's an incredible complex. They have learning. They have this dance. They have this collective decision making when they look for their next nest. So by any measure, if you look at the integrated information among 850,000 highly sophisticated neurons, at least the theory tells you quite clearly, and its behavior repertoire is so complex that you can assume yes, it does actually mean something to be a bee, that the bee does have experiences, that there is a geometry behind the informational relationship build up in the brain of a bee. Yet by any conventional definition it wouldn't be intelligent, at least not human level intelligent.

So what this predicts, that if you have human level intelligence that's equivalent to human level of consciousness, at least in the visual domain, but it may be it different for specific types of highly specialized algorithms for machines, and it might be different for certain types of biological consciousness that don't share what we would call high level intelligence. Thank you.

[APPLAUSE]

[SIDE CONVERSATION]

BLOCK: Well, no slides. So let me say to begin with that I'm very pleased to be here. I was an MIT undergrad in the days when everything worked, and I was also on the faculty for 25 years. There we go. So I'm going to very briefly go over some basic features of consciousness.

I have to say just at the outset that I'm quite skeptical about the theory that Giulio and Christof hold, the theory of integrated information. I think it's a theory of something, and both Giulio and Christof mentioned that it's consciousness that is also intelligence. But I think it lacks some basic features of theories that ought to be considered theories of consciousness, and I'll get into that.

So we can distinguish between three aspects of consciousness. First of all, there is a specific perceptual representation. I'll be talking mainly about perceptual consciousness. So for example, the representation of red versus the representation of blue, and I am indicating that these are conscious representations by the little cloud around the red and the blue. So that's one aspect of consciousness, what differs between the conscious experiences of red and blue.

But there's also the difference between an unconscious representation of red and a conscious representation of red. One of the advances in contemporary theories of perception is that we now understand that there's a lot of unconscious perception.

And the third feature is what makes a creature a conscious creature. So this is a conscious creature, and this isn't. So what is the difference between a whole system in virtue of which one system is conscious, and the other isn't?

And there are four basic theories that have been offered. There is the Global Neuronal Workspace Theory. And each of them differs in regard to what they take to be the representations, what makes the representation conscious, and what makes a creature conscious.

So just going very briefly through these because there's so little time. There's the Global Neuronal Workspace Theory that takes brain representations. I've indicated here the representation of motion in the visual system. And the idea is that what makes that representation conscious is that it's made available to certain mechanisms, higher cognitive mechanisms, of planning, decision making, of control of behavior of a certain sort.

What makes a creature conscious. Well, I put that in parenthesis because in all the theories of consciousness, except the one that Christof and Guilio advocate, what makes a creature conscious really is a kind of a byproduct. It's not a serious aspect of the theory. What makes a creature conscious is just that it has a conscious representation.

Another view is the higher order view first put forward by Locke. Or I should say this is Bernard Baars, [INAUDIBLE] [INAUDIBLE] and John-Pierre Changeux. John Locke, the idea there is that a conscious state is a state one has another state about. It can be a thought or some other kind of state. And then there's the physicalistic view, put forward perhaps first by Hobbes, but in the modern era put forward by Francis Crick and Christof. So Christof appears on here twice.

[LAUGHS]

Now I've purposely put him, in this case as being a little wild and crazy with orange hair, because I think that the informational theory does fit that.

[LAUGHTER]

So this is the theory that I hold. The key feature here. I've put the important part of the theory in red. So in the case of the Global Workspace Theory, it's this broadcasting. In the case of the Higher Order Theory, it's this attributing by a higher order thought or higher order state. In the physicalist case, it's both. Brain representations, and there are various proposals as to what makes those representations conscious, as opposed to unconscious representations.

In the case of the Informational Theory, the key notion is that phi as you've just heard, and it applies to a system, a system that has many different possible states and a system that is also integrated. So that's high Phi. But it's a little unclear what this theory says either about perceptual representations or about what makes them conscious. And this is the crucial thing, what makes them conscious, as you'll see.

So the key fact that I'm going to present that I think is a kind of a test for a theory of consciousness is how does it deal with certain cases in which a single representation can have a conscious and an unconscious part. And I'll just give you some examples. In order to understand the examples, you need to know about a neuropsychiatric syndrome called Visual Spatial Neglect. It's usually the left side of space. This is the drawings of an artist who had a stroke, right sided parietal stroke, and that keeps people from having conscious perception of the left side of space. And here's how he drew himself shortly after the stroke. He just draws one side, not the other side. And you can see the gradual improvement.

Now here is an experiment that shows, among one of many experiments, that shows that a single representation can have an unconscious and a conscious part. These are an experiment done by Tony [INAUDIBLE] in which a neglect patient who couldn't see the left side of figures was given some examples of the Muller-Lyer Illusion and the so-called Judd Illusion. And the key thing here is this. This figure looks the same to the subject as this figure, even though the left sides are different.

He's asked to bisect the line. Now there's the objective center of the line, and this subject perceives the center to be shifted to the right. So there's an overall right word bisection error, but there's also a normal influence of these brackets. Despite not being able to see these things, they have their normal effect. And this is a common effect observed in neglect, which is that the patient shows that he sees the whole object, although partly unconsciously.

Here's another example where the subject fixates here. The subject does not see this, does see this. But if it's rotated, the subject sees the part on the left showing that there was an object representation which was tracked throughout this change.

Here's another kind of case, which I won't have time to explain in very much detail, but there's a way which Christof's lab has much explored, which in its most important form was actually invented by a post-doc of Christof's called continuous flash suppression. It's a way of making a stimulus invisible. And I won't go into the details. I won't even describe what's up there. What you have is an invisible, fearful face that becomes more and more, higher and higher in contrast, while the thing that makes it invisible decreases in its invisible making power.

And what you find is the fearful face breaks through the cloak of invisibility faster. So this is time on this axis. This is a fearful face, breaks through in a little over two seconds, whereas a happy face takes two and 1/2 seconds, and a neutral face takes somewhere in between. So a fearful face breaks through the cloak of invisibility faster, showing that it is seen unconsciously in that unconscious representation, then can become conscious. So this is a single representation that is conscious at one time later but had earlier been unconscious.

So the idea here is, the question is, what does a theory say about what makes a content conscious? Now the problem with the informational theory is it's a theory of systems. It isn't clear how it can even apply to a single representation. It doesn't seem to be geared for that. It seems to be geared for a whole system being either conscious or unconscious. And the examples that you've seen are in cases of slow wave sleep, anesthesia, the cerebellum is a completely unconscious thing. But what is it for a single representation? We know from the David Marr generated Theory of Vision, we know that there are individual representations which are structured items in the visual system. We know that they can be conscious at one time, unconscious at another. They can be partly unconscious and partly conscious, but how is a Theory of Integrated Information going to try to explain that?

Now I suppose one thing it might say is, well it becomes more integrated. But I'm not really sure what this would really come to in terms of the constructs of the theory. But there's also another problem which is that we know that unconscious representations are in some ways better integrated. And there are some famous studies that show that people integrate large numbers of items better when they don't think about them, then if they do think about them. So it looks like there's a special kind of integration, conscious integration. But if we say that, we haven't really gotten anywhere.

Now let me move just briefly to the issue of consciousness versus intelligence. As both Guilio and Christof said, it is a daring thesis of the Integrated Information Theory. And by the way, intelligence here is used in the sense of this Turing paper, where it really just means the capacity for thought. It's not something that could be measured by an IQ test. It's rather whether you're a thinking thing or not. It's not a graded thing.

And as I said, the examples that Christof and Guilio give are all cases where consciousness disappears, like in epilepsy, anesthesia, slow wave sleep, and the cerebellum, et cetera. Those are low phi But of course the capacity for thought disappears in those cases, too. Now we have cases which we know, or I think we know, that are cases of consciousness, but unknown thought capacity. Let's see if I can get one to come up here. There is an example. And we have other cases, hypothetical cases, where there's known thought capacity but unknown consciousness. And there are a few examples, at least hypothetical examples.

I think we'd need some pretty powerful argument or evidence to think that consciousness really was the kind of thought-- certainly at the conceptual level they seem very different. And the examples I've given give you a very specific way in which they differ, which is that with intelligence it makes no sense to talk about the intelligence of a single representation.

It makes no sense to speak of a single representation that is first unintelligent and then intelligent or has an unintelligent part and an intelligent part. But as we've seen, it does make sense to speak of a single visual representation which is partly unconscious and partly conscious. So it looks like the Integrated Information Theory is a theory of something different. So I think that a good theory of consciousness should distinguish these things, and that's the end.

[APPLAUSE]

ULLMAN: Okay, thank you very much.

As you saw and as we could have expected, there are different points of view about consciousness and intelligence. You touched upon it very briefly, but I would like to see if we can elaborate a little bit at least on where you stand, not necessarily what the truth is. But certainly in consciousness we have more than intelligence that we are trying to explain. There is this thing that makes the discussion of consciousness so difficult and so different from the more standard scientific discussions that we assume we know from our first hand experience that we have some kind of internal feelings. We have what in the more accepted jargon in the field, we have some kind of qualia that are associated with different things. And we always ponder, we are not sure whether or not even intelligent machines have something like qualia, which can be the perception of red or pain. And I think that people do not discuss it much, but there is also something about cognitive qualia.

There is a difference. You can try to solve a problem by mechanically following symbols around and do some symbol manipulation, but sometimes I look at the problem and I see now I really understand what the whole thing means. And it's not the pain, and it's not redness, it's not heat.It's something more cognitive that goes from the mechanical symbol pushing and following to some sort of a feeling of understanding which has something that I would call cognitive qualia.

And I was wondering from the theories about intelligence that Guilio and Christof briefly outlined and what Ned was saying, what is your feeling about it? Do you think that trying to explain something about the subjective qualia, is it included in general in the Theory of Information and computational approaches to the brain? Is it something different that at the moment we have to put aside and would simply not be encompassed by our theories? Or is it something that as Sydney Brenner suggested, sort of the metaphor to the questions about life? Is it something that will just go away, and it will not be answered by yes or no, here is the exact answer. It will just dissolve and will no longer be a problem. I'd like to go through the panel and see what you think about it.

KOCH: It's not going to go away. It's been here for as long as humans are conscious. It's not going to go away because we have difficulty understanding it. So the key difference between consciousness and black holes and viruses and genes and neurons is consciousness has this interior aspect, and the black hole and genes don't have this interior aspect, and that remains to be explained. The central fact of our existence, that we have these conscious states.

You allude to a very high level of cognitive sort of a high and other type of states. Ultimately, they also need to be explained, and the difference between them and red and blue is ultimately the geometry of the informational relationship. I think right now for tactical reasons, it's not as easy to explore those experimentally. Either can't manipulate it very easily in a Mac. And although people have tried to do these aha experiences in a Mac and using EG.

In particular, if you want to do animal experiments where you have access to the different representations, I think you need to do simple sensory forms of consciousness first. So that's why I think exploring vision and smell and olfaction. And audition is probably much more practical.

ULLMAN: I don't think it's so much about the difference between a high experience and redness, but whether the whole realm of phenomena, it's basically will have explained in terms of entropy and information and computation and speed of processing and how many states and so on, or will something else will be required?

KOCH: I think ultimately the answer has to be an information theoretical term. Information is the idiom to think about conscious experience, unless you postulate some extra stuff which I assume you're not. So I think it's the right language. We may not have the right calculus yet, or we're still groping for the right calculus, but I think it's the right idiom to understand. It's supervenient on highly organized pieces of matter. Certain informational relationships that arise out of the causal structure gives rise to experience.

BLOCK: Can I add something to that?

KOCH: Sure.

BLOCK: So philosophers have this notion that sometimes is called the explanatory gap, and the idea of it is this. Suppose I have an experience of something red. And suppose I actually know what the underlying brain basis of that is. Still, for any brain basis that we understand today, the question will arise why is that brain basis of that experience the basis of the experience of red as opposed to the experience of green or no experience at all?

And it's commonly thought among philosophers, and I certainly agree, that no brain description we have today gives the faintest clue of an answer to that. So I think the only rational conclusion to come to is that there are missing ideas. Now some people go dualist and say, "oh that shows there must be a soul," but I don't go with that. I don't think any three of us would go with that.

So I think the only thing to think is we lack the right ideas for closing this explanatory gap. So I think we just have to keep looking, deal with conscious phenomena, including cases where consciousness is present in one case and absent in another, and many of this stuff is set out very nicely in Christof's 2004 book. I think only by pursuing that can we ever hope to close that gap.

KOCH: I'm skeptical. It's going to be like the birthers, right?

[LAUGHTER]

See no matter what you say, there's always this gap there. And we've seen where that leads us. I think we do have a cohesive explanation. It's profoundly counter-intuitive. That's why people have difficulty wrapping their mind about it.

Ultimately it's about operations. It's about questions that you can pose. You can answer. And all questions experimentally that you can pose, at least in principle-- what part of the brain, how long does it take to develop, is it in children, when does it happen in the fetus, what about bees and flies and birds and mice and all of that-- all those questions can at least in principle answer such a theory. If all the questions that I can pose legitimately in an operational sense can be answered, what more do I want of a theory?

Just because right now our intuitions aren't very well developed about it because it's very counter intuitive, but it's consistent with all the facts. I think that we have to accept it. The same thing was happening with relatively and quantum mechanics, profoundly counter-intuitive, but ultimately we accept it. That's my point.

BLOCK: So my colleague, Tom Nagel, has a nice example of trying to explain to a Presocratic philosopher how it can be that matter is energy. And what he points out is that that Presocratic philosopher would have lacked a concept of matter and the concept of energy that would have allowed him to see how those two could be concepts of the same thing. And I think the same is true of us. We lack a concept of consciousness and a concept of the physical that allows us to see how they could be the same thing. I have no doubt--

KOCH: You do. I don't.

[LAUGHTER]

BLOCK: I have no doubt that they are the same thing. Will you have done what?

KOCH: No, you say you don't--

BLOCK: I have no doubt that they are the same thing.

KOCH: Have the right concepts.

BLOCK: I'm a physicalist.

KOCH: Okay.

ULLMAN: Okay. I'd like to [INAUDIBLE]. That's entirely expected that we will not reach a conclusion on that. But since this is also about the brain, it was prompted a little bit with what Ned was saying-- I mean it's something that, Christof, you helped starting-- I think that a very large part of the program so far in the scientific study of intelligence has been on the so-called NCC, what is the neural correlate of consciousness. And many studies have been done on that by Mary Lee finding clever ways of invoking in the brain relatively similar by the same stimuli, relatively similar situations, but in one case being perceived consciously and in another case not like in rivalry and in many other examples.

Now we all know and agree that the cortex is very important for generating or being involved in consciousness, but what do you think now in talking about the brain side of it, what we have learned from the NCC in more specific terms? I think if there is one surprising recurring phenomenon that came out is that very complicated and informative brain states can be unconscious, that sometimes there is a lot in the brain that the person who owns the brain doesn't know about.

But if you look with an FMRI, for example, into the brain of this individual, you can gain information about what this person is perceiving and how some of his concepts are organized without him being conscious of it. So there is a lot of informative activity inside the skull, inside the brain, which nevertheless does not reach consciousness. So there is something surprising. And can we say something more about what makes, what distinguishes those brain states which reach awareness and those that do not? Can you add any more from the summarizing 20 years of experiments on this topic? What would be the main take home messages?

KOCH: Well, okay, so the most important one is the one Shimon pointed out because this was a long time debated. It's not any brain activity that gives rise to conscious experience. It's not even any activity in the cerebral cortex. It was long thought, so people made these arguments going back to the 19th century, [INAUDIBLE] spinal cord you get reflexes, but anything that's in cortex obviously has to be conscious accessible.

And experiments first on a monkey and then later on FMRI experiments in humans show conclusively that's not the case. There can be literally millions of neurons firing away in your visual cortex, yet you or the monkey is not conscious of the representations that are associated with that because that poses very stark in relief similar to the cerebellum cortex.

What is it about these states that give rise to conscious sensation? And there it seems to involve many cortical areas. If you are conscious of something, typically an area in the back as well as the area in prefrontal cortex, although it's still debated whether you cannot get activity only in the back of cerebral cortex and still be conscious of it. And it seems to involve relatively spatially distributed ensembles of neurons that are somewhat synchronized, either using high frequency oscillation, although there the link is closer to attention, and or synchrony.

Unfortunately, in humans we don't have access to the underlying neurons. It's very, very difficult to really say much more about it. We know it takes a couple of hundred milliseconds to establish. We know there are certain electro physical signatures. If you put a large scale electrodes in patients, you can see there are certain electrophysiological signatures that are characteristics, but it's very difficult to make progress because of extremely limited ability to query the human brain.

That's why I believe, as I said yesterday, we really need to instrument animal brains where we can record from the individual cells, because it may well be that what I suspect, because conscious information is so specific, and we know one of the lessons of neuroscience is the sparse representation for high level stimulus such as Jennifer Aniston. There's only a very small subset of neurons that respond to that.

You have this rapidly shifting representation. It's not a single representation. There are many representations. The conscious one is a single, highly integrated one. But there are lots of other ones that associate with it, and we need to be able to track them in real time, to be able to read in real time on a single trial the conscious content, and we can't do that now.

ULLMAN: Giulio.

TONONI: Well, there are many things to say, so I say nothing before. So first, it is indeed true that we need to do cellular level investigations of large swaths of cortex, ideally in the mouse, see what's actually going on. We can't do that without understanding the underlying mechanisms. Again, even from a theoretical standpoint, you need both the connectome, the wiring diagram, and activity patterns to even understand what might be going on. The two must go together. And that's coming. It will be coming in the next few years.

But even so, that's not enough. Not only do you need the theory to know what to do with that, and the claim here would be that you need to be able to see what are the integrated information structures that are generated by that particular causal structure in the cortex in that particular state. But I would claim that once we get there, we actually will see consciousness as the elephant in the room. Consciousness has always been the elephant in the room, but now it's the elephant in the brain. We're going to see this gigantic brain TV that Christof was talking about yesterday, and we perhaps will have a decent notion of the connectome underlying it.

And if we just look at this flickering pattern, we understand absolutely nothing about what is going on. Inside there-- among a fraction of those neurons and among a fraction of those connections-- we don't know how big that fraction is-- we know it's in the cortex, it's perhaps certainly in the back as we've heard-- inside that there will be one set of the neurons connecting in a certain way in a particular state to which there will be this fantastic shape corresponding that is the integrated information structure corresponding to it and lots of other flickering patterns of activity with very complicated mechanisms, like the cerebellum or elsewhere in cortex will not correspond to any such big and overwhelming shape.

It's going to be local little fragments that are important. They will hit each other. They will influence each other, but they will never be that beautiful shape which I am not claiming is going to be the shape of an elephant but is going to be as big as that. Now one other thing I want to add to this in response to what Ned said before, so no theory of conscious would indeed be a theory of conscious, I would assume, if he does not pretend to explain what the quality of an experience is, why red is different from blue and from a sound.

And the basic idea, which we didn't really have time to explore, is that indeed an experience, it's quality in all its aspects, is a shape in qualia space. It's a shape made of information and relationship of which I showed very few. I showed information, relation, generated by three binary gates coupled together. That's really nothing. But something more sophisticated than that can do that. It's not a matter of how many units you have or how many mechanisms you have, it depends how they are put together. And it's actually very difficult to put together something that can generate big shapes.

Now one last observation I want to make on this is then-- let's take again inspiration from neuroscience-- and I showed you one slide in which there was an island of cortex, of fusiform cortex, preserved in a sea of nothingness. Everything else was destroyed. And I said I would like to know whether that patient is experiencing pure color, what you would call a representation of color, which is a pretty complicated thing to do. You need a sophisticated network going from the retina to fuse and form cortex to actually extract color and color constancy and all of those things. So will he be experiencing red when you show him a red pattern and nothing else?

Versus, there is a nice converse case which was recently described of a personal who has a lesion, probably genetic, in the very same area, but everything else is fine. So that area now is off as opposed to on. And that is a person who experiences everything like you and me, more or less, but just completely lacks color. So these are chromatopsy patients with a very specific lesion. So now here we have the two cases. In one case there is a very nice mechanism that can have every presentation of red. If you want to call it the representation, it could tell red apart from any other color perfectly nicely.

But I would claim it has not the slightest idea of the fact that red is a color. It doesn't know about black and white. It doesn't know about shapes. It doesn't know about space. It doesn't know about thoughts. It doesn't know about anything. So a color cannot be a color if you cannot compare it to all the other things it is not. The other guy knows all those things. That's why he's conscious. This context is gigantic for him. He's just lacking a little set of information relationship, which is what adds color to the overall picture. But there is a big difference between a picture without color and no picture at all.

ULLMAN: Okay, I think our time is almost up, but I'd like to open it up of people have some questions or potentially answers that would a good thing to [INTERPOSING VOICES].

[LAUGHTER]

Is the microphone on? People down there. Select one. Good.

AUDIENCE: I'd like to hear the panel's thoughts on the distinction between, if they see any, between consciousness and self-awareness. So is consciousness a necessary but not sufficient requirement for self-awareness? And if you had a machine that you gave the Christof Test to, and you gave it all these pictures and it could identify all the discrepancies and problems with it, what would it do if you showed it a picture of itself? Would it say, "that's me?"

KOCH: Well okay, so you're using two words. So there's conscious and awareness. Now Ned has written extensively on that. Maybe I'll let him comment on it. So if we just talk about the difference between conscious and self consciousness to stay consistent, I think the difference is only in kind it's consciousness reflecting upon itself. Let's say you have a body based representation, and that's probably how it evolved, in my somatosensory cortex, and then this is included in the conscious representation.

Then you begin to a recurrent connection to reflect it upon yourself. So I think it's just a special case of conscious itself. And of course, there are some patients who have lost consciousness, self-consciousness, a Cotard Syndrome. They might think they're dead. They might think their skin is rotting, et cetera, yet they still have access to other forms. They can perfectly well see color. So self-consciousness in this [INAUDIBLE] is just one particular aspect of consciousness.

BLOCK: Yeah, I would agree with that. But I think it's important to note that we have some evidence that in some cases of consciousness the self part is suppressed. So there's reason to think that self circuits are deactivated or less activated in dreaming. And Rafi Malik has shown that supposed so-called self related circuits are deactivated when doing a very demanding sensory motor task where the subject is clearly conscious of the stimulate that are being used. So of course when we think ourselves-- no, I'm looking at something, I'm unconscious of it-- those self circuits are very active in those cases. So we think that's the paradigm, but maybe it isn't.

ULLMAN: Let me also add that I think that coming to machines, that at least some form of ability of a system to reason about the system itself is not very magical, and I don't think that by itself leads to some unexpected consequences. I don't think it's a big problem to have a computer program that reasons about some structures of the program itself. And I don't think that this by itself will suddenly open the door.

And we have reasons to believe that the ability of a system to self represent or of self reflect, at least on some aspects of the system itself, it sounds sort of very special, but I'm not sure that it would lead spontaneously to something extraordinary just putting such a capability inside an artificial device.

Let's move to another question, but is there a microphone? Thank you.

AUDIENCE: I've heard it said that a fish has no word for water.

[SIDE CONVERSATION]

AUDIENCE: I've heard it said that a fish has no word for water in the sense that consciousness is the water that we swim in. So I'm wondering in the scientific method what we do is we externalize an object in order to study its properties systematically, so I don't see how we can externalize consciousness because we have to find a place to stand outside of consciousness in order to look at it and vary its properties and analyze it systematically. So it doesn't seem to me, for example, consciousness is the basis of experience. So how can we use experience to study consciousness? I don't see that anybody's even--

BLOCK: Do you use thought to study thought?

AUDIENCE: Pardon me.

BLOCK: Do you think we can't use our old thinking to study thinking? What's the problem?

AUDIENCE: Well, maybe in the sense of the Aristotelian notion that certain forms of knowledge are appropriate to certain objects of knowledge. So we can use our thinking to study thinking but not as a scientific method.

KOCH: If empirity do it, every psychologist every time you put a subject in front of a monitor and you manipulate the image, the color, the motion, and you query the answer, does it move to the left or right, is it red, green, all of that, we probe conscious experience. The subject is having a particular type of conscious experience, and we're asking some information about it.

And we can do it. It's highly reliable. You can do it in a large population. You could do it in animals. You get highly reliable results. All of that shows us you can actually probe the structure of conscious experience under reductionist laboratory conditions. It can be done. It's done since 200 years roughly.

ULLMAN: Okay, can we have one in the front? Over there. If we can move the microphone.

AUDIENCE: I have a microphone.

ULLMAN: Very good.

AUDIENCE: May I ask a question of Christof? So I'm wondering about the what's wrong with this picture task and how it applies to other animals. I mean you can't imagine a mouse doing very well with that task or a moray eel. So what does that task say, or your definition of consciousness, say about other species and consciousness there?

KOCH: That's a good point, John. So this is purely, I mean I emphasise, to try to compare human level of consciousness and machines to a mouse cause a mouse-- all of its behavior tells us-- its similarity to us-- it's close evolutionary kinship to us tells us a mouse is also conscious. Its conscious states are going to be much less complex.

It probably doesn't sit there and think about the past and project itself in the future, but it has this complex integrated state pertaining to its sensory domain, which is vision, which is certain touch. It has a very large somatosensory cortex and of course olfactory cortex. Its world is probably much more redolent of odors than ours. So you would have to do a similar test within the olfactory domain, where you create the odors in the natural world and then you do something that's obviously is highly unnatural. Thought about it, in principle, you can train a mouse to also do a similar test. It can be done.

ULLMAN: It would be difficult.

Okay, last question. Can we have one in the front? This gentleman behind you. Right there. Thank you.

AUDIENCE: I'm interested in the kind of consciousness one has in experiencing a good mood versus a not-so-good mood. Now in the case of visual experience, one can see that the visual system has the capacity for discriminating a huge number of states. On the other hand, it is not so obvious that it can experience a vast number of different moods.

For example, think of relaxed moods that are pleasant but not intensely aroused. It seems that this is the kind of consciousness that exists. On the other kind it does not seem, at least from my point of view as someone who does not do experiments on the brain, that there is a huge capacity for different informational states. So it seems that intuitively this presents something of a problem to the view that the two of you have been presenting.

TONONI: That's excellent. If I may answer that. It is true indeed that within specialized domain the quality of experience may not have that great range. There may be just a few moods, as opposed to a huge number of visual pictures, just as you say. But what matters here is that those moods are in the context of everything else. So in other words, if you had a machine that just were able to attach a valence of good or bad to the world divided into two parts, that would definitely not be conscious. It would be minimally so, just like a machine that can only tell light from dark and nothing else.

But if you add on mood to the vision of the world that we have, which is indeed enormously rich and for us very visual, then indeed mood can become conscious because it enriches one particular corner of the shape. And the very interesting possibility, which has not really being investigated, is whether the perception of mood, and for that matter pain, may actually be a newcomer in experience.

It was always there, probably, for a long time there to guide behavior. But that it was incorporated in what we actually experience, that may have been a late philosnetic development that made it possible to be consistent, have that largely visual world picture we have to which we need to add a valence. So it's a very good point. Thank you.

ULLMAN: Okay, very last question before we have to adjourn. Okay, if you can give it to one of the people there. Thank you.

AUDIENCE: Could you comment on claims that meditation creates another third kind of a state which has been observed in images and how it relates to the other States?

TONONI: I am not an expert on meditation, although we are studying something of that. But I have been told and having spoken to meditators-- you know there are, of course, many kinds of meditation-- but the most interesting from our point of view I think is there are some states in which supposedly the meditator is highly conscious but let's say of nothing at all. So hardly any content, but high levels of consciousness.

There is an interesting prediction that comes from looking at conscious the way we discussed today which is if you have a very complicated system in terms of its mechanisms, but it happens to be in a state in which let's say none of the relevant neurons are firing, well that's a very special state. Recitivity is a state, and it generates a shape in this informational space. And maybe that might be the state corresponding to I'm very conscious but of nothing at all.

ULLMAN: Okay, with this let's conclude. Thank you very much, everybody here on the panel.

[APPLAUSE]