Computation and the Transformation of Practically Everything: Current Research (Session 2)

KAASHOEK: So, good afternoon. There's no break. We keep on going. This is MIT. More information per second or minute, as much as possible.

So we're going to start on the next session, which is a research session. So a number of the [? CCL ?] researchers will present work they're currently working on. So the first speaker is Leslie Kaelbling.

KAELBLING: All right, thank you. OK, so I'm going to talk about robotic intelligence. So most of us have thought about robots to some degree. And it used to be that the dream of what robots could do for us was big and romantic and exciting. Right, all these robots in the movies. If you look at the commercial reality of robots, right, there are sort of robot automata in factories that will weld whatever goes by, or, say, simple vacuums. But the research frontier is getting to be actually right at this moment pretty exciting. Autonomous vehicles, soccer players, and so on.

So what I want to think about is how to push that research frontier in robots to making them really flexible and really intelligent.

So it used to be the robotics and AI really worked pretty closely together. And they've come farther apart. So the question is, you know, is it time to try again? So, you know here's my robot-- oh, my animations aren't building. Darn. OK, anyway, the punchline is ruined. Here is this robot, it's looking at the kitchen. So I'd like to have robot that could come and clean my kitchen. My kitchen looks better than that. I hope yours does. Maybe, maybe not.

But imagine the robot has to come. And it looks at this world. And this world is really complicated. It's very high dimensional. How many objects are there? Lots. What state are they in? It's very hard to describe. Is this stuff down there on the left moldy? I don't know. Right. There's all sorts of stuff going on in that scene, that if the robot's going to do a good job of dealing with it, it has to really be able to understand that, think about it, plan, and take appropriate actions.

So how can we make that happen? Here are the things that cause us trouble as roboticists trying to make intelligent robots. There's-- it's not fear, uncertainty, or doubt at this time. It's far horizons. The robot, the scale at which it might have to plan its actions could be minutes or hours or days or weeks. If it lives in your home and tries to keep the kitchen stocked, there's serious uncertainty. It doesn't know exactly what's going on anywhere. And the place is really high dimensional.

So how do we address these problems? So I think that we have actually right now an exciting situation. Because while AI and robotics kind of separated for awhile, a bunch of technique got developed. So there are a bunch of, now, technical levers that we can apply to this problem. We have ways of describing very complicated situations compactly and effectively. We have explicit representation of uncertainty. What do we know? And what do we not know? Which is crucial when you're in a complicated environment that's not totally clear. And we have ways of doing approximation.

So I'm going to do now in my five minutes is show you just a couple examples of applying these ideas to real robots. OK. So one thing is in a system that has to think about what actions to take, it needs to take into consideration explicitly what it knows and doesn't know. Right. If you're lost in the city, you might act differently than if you know precisely where you are. Some of you might not, but most of you would. OK, so how do we do that in a robot?

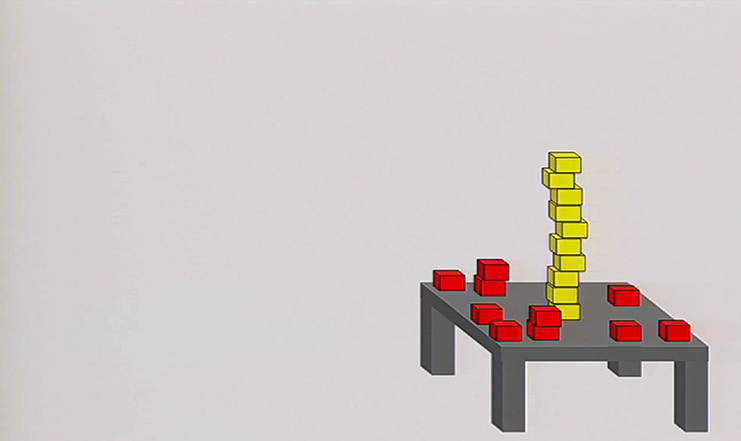

Imagine that we have this robot. Maybe vision told it where that object was, and it needs to pick it up and put it away. The first time it tries it, it works great. It tries it again the next time, looks like it's going to work again, but no.

OK. So there was uncertainty in the position of that object on the table. But the robot had some tools that it could use to fix that. It can feel things with its fingers. So what is it tries to estimate the position of that object on the table. And so it's going to try to estimate where that object is. It gets some information from its fingers. So it starts out saying, hm, I'm not sure where that is. It reaches down. Touches a finger. Update its belief. Reaches again. Touches two more fingers. Now it has a better idea where that thing is. And now it can go and pick it up.

Oh. Well actually, it only localized it in one dimension. But what if the other coordinate on the graph matters? Well, it might have to think about that. In some cases that other coordinate won't matter. In other cases, it will. So we apply a combination of probabilistic reasoning, AI search, a bunch of things to search in the space of what the robot knows about the world. And we're able to pick up really interesting and complicated objects.

So here's an example of the robot. It doesn't know where that drill is initially. So it plans to reach and touch it in places that will give it information. Ultimately, it wants to pick it up and drill with it. Or at least activate the trigger. So there it had to reorient it, because it wasn't very well oriented. It touches at the base because that gives it more information than touching it on the handle. Eventually, it touches it one more time at the top. Now it says I know where it is. And yay!

OK. So that's actually, for you, that's easy. For the robot, that's really hard. OK. So. But it's able to incorporate information it gets. It's able to take actions to gather information. By modeling its own certainly, it knows when to say OK, I've got it, let me pull the trigger.

OK. So what happens when the world gets much more complicated? Right. I'm trying to plan to manage the whole house. I have a combination of low level geometric information. I have sensors that give me Gaussian noise. I also have closets I haven't seen inside. And I have goals that are very abstract.

So we address this problem by combining logic and probability and geometry together. Often these people fight with each other. Oh, you should use logic, no, you should use probability. The answer is we need every tool we can get. And we can combine them into a coherent framework. So we use hierarchy as a way of dealing with very long horizons. By taking hierarchical approaches, and in particular, taking them aggressively, our robot sometimes does stupid stuff. Like pick something up and walk into a room, realize it needs to do something else, put the thing down, do the thing it needs to do, pick this up and go back. So it's suboptimal. I don't suppose any of you do that. So it's OK.

So we're going to trade optimality for efficiency. So we can make plans that are hierarchical that adapt to what's going on in the world. I'm buzzing through this now.

Imagine we have the robot that needed to clean the house. Needed to put stuff away. It had to find things. It makes a plan. Here's a picture of a tree representing the plan that this robot's executing. So here's a simulated robot. It's supposed to pick up all the time and put it in the closet. It doesn't know where the positions of the things are in advance. It's doing the motion planning, figuring out what to do with its joints. At the same time that it's reasoning about what it knows and doesn't know about the positions of things in the world.

OK, this movie goes on for a long time. But eventually, it will go along, it will find the vacuum, it will clean the room. I'm embarrassed by the graphics, especially given my predecessor. But oh well, so it is.

OK. So now we have a new robot. This is robot-- it's name Mens et Manus. As you kind of understand it, we call it M and M for short. And some people think it should be called Eminem. And we're getting it to do things using the same kind of hierarchical planning method. Integrating information it gets from its cameras with information it gets from its touch sensors. So that it can plan to do things like, this is a very simple example, move the soup can out of the way and pick up the box behind it.

Our goal for the, you know, our short term research push goal is finding the pickles in the back of the refrigerator. That problem exemplifies everything. Right, there is geometric uncertainty. There's clutter. There is difficult seeing, difficult time seeing things. It has to move stuff out of the way. So we have to integrate every tool we have in terms of sensing and reasoning, motor planning, motor execution, and so on to do something like that.

So our hope is that, you know, in the future, these ideas of using compact representations for very complicated problems, explicitly reasoning about the uncertainty that we have in the world and taking action to alleviate it, and being willing to use hierarchy and other things that lose optimality but gain us, actually, feasibility, will ultimately let our robot join the pantheon of great robots in the world. Thanks.

[APPLAUSE]

KAASHOEK: So this is another rapid fire session. So no Q&A directly after the talk. But all the speakers will be around at the break. So if you have questions, you know, please find them. So I'm happy to introduce our next speaker, which you've seen before. Fredo Durand.

DURAND: Hi. Let me tell you about computational photography, which is a new field at the intersection of computer graphics, computer vision, optics, and image processing. Now, we're trying to make it possible to see the world better, to capture richer visual information about the world. And the big message to get from [INAUDIBLE] is that it's more than just a post-process after you've taken the photo. It's about changing the way we capture the information and changing the hardware as well as the software.

And I want to warn you that most of the word that I'm going to describe was done by other people from the field in general. So what are the traits that we used to take better visual information about the world? Well, one of the tricks we like is to combine multiple images. If one image doesn't quite do the job, such as cases like here where the contrast is too high in the scene and you get overexposure problem, so one of the things we can do is capture multiple exposures at a different lighting levels that do capture the whole information. And then combine them computationally in order to create an image that has all the information. Something that would not be possible with optics alone.

Also example of combining multiple images that many of you must have tried is panorama stitching. Now, one image doesn't have a big enough field of view. So we can capture multiple images in multiple directions and then put them together in the big panorama, blend it nicely, and get an image that has a much wider field of view. And also a resolution that would not be possible with physics alone, with physical senses alone.

And this is now going further, we can combine images taken from different viewpoints in a nice 3D registration. This work that I'm going to show you is done by my colleagues at the University of Washington in Microsoft research. [INAUDIBLE]. And here we show it, in a plaza in Prague. And you can see that you can navigate from photograph to photograph because they've all been registered together using modern computer vision technique. And it gives you a much richer representation of this visual scene.

Another thing that we can do with computation is compensate for other optical limitations such as aberrations. So here you see first an image that's taken by the lens and displayed directly. It's a little soft, the lens is not perfectly sharp. It's really hard to make a lens that's perfectly sharp. Especially at the high resolution that sensor have today. But using computation, after calibrating the lens, we can remove this blur and get the image on the right. So that's a case that's semi-easy because we know the blur. We can calibrate the lens.

But recently, new results have enabled removal of blur that's unknown. For example, if you take a picture very often, you press the button and you move the camera. As a result, you get a blurry picture. Especially in low light environment. You can try to use Photoshop and sharpen, but that doesn't quite work because it doesn't know the blur.

Well, my colleagues Rob Fergus, Bill Freeman and others have developed a technique that allows you to automatically identify the blur and remove it. And you get results such as the one that you have here.

Another issue with blur is depth of field. If you take an image, you have one plane that's in focus, and the rest that's behind or in front might be blurry. So this is an example where by codesigning computation and the hardware, we can do a lot better. Because we could try to use computation alone and apply one of the techniques I described earlier to remove the blur. But unfortunately, the focus blur is a very nasty one, and it's really hard to remove.

What we can do instead is change the optics, and build a new lens like [INAUDIBLE] did that is blurry everywhere. So that doesn't quite solve the blur problem, but, in fact, it does. Because this blue is much easier to remove computationally. And after computation, you can get the following image that is sharp everywhere. And really, I think it's in this new type of approach where optics is designed to make computation easier that we're going to see big progress in image quality.

And along that line, another thing we can do is not form the initial optically. So instead, a number of people, including Ted Adelson here and [? Ran ?] Wang at Stanford have proposed to not form a 2D image optically, but to instead recall the whole set of 4D light rays that reach a camera. And once you've recorded all those light rays, you can postpone image formation and do it later. And possibly change the imaging parameters such as the focusing distance. And I've demonstrated that by putting a microlensal ray in front of the sensor as you can see here, they can capture this 4D set of lights rays, and then decide the focusing distance later.

So this is an example of results. This capture, it's a single capture. You could think that the focus is wrong, it's not on this vision. But because the image formation is computational, we have the full set of 4D lights rays. You can refocus very easily, and you can focus it at any distance that you want. So here, again, the hardware and the software are working together.

And finally, the last thing that you can do with computation and imaging is to make invisible things visible. So the first example I'll show is [INAUDIBLE] that was [INAUDIBLE]. We call it a motion microscope. So what is it takes motion that's too small to be seen. Like, for example, the beam here is moving a little bit up and down because the person is playing on the swing. But it's very hard to see, especially if you're in the back.

Well, we have a technique that analyzes motion in the video, and can rerender the video with the motion removed. And that's what you see here on the right. And now it's very obvious what's going on structurally. And [INAUDIBLE] this technique has a lot of applications to science and engineering. And we're excited to have provided it to a number of colleagues. In particular, we have colleagues using it for seismic data to see the subsurface is collapsing when they start exploiting oil rigs.

And finally, my colleague Ramesh Rashar at the Media Lab is working on very exciting femtosecond imaging. Also with the goal of making invisible things visible. And in this case, he tries to take images of things that are behind the corner. So without direct line of sight with this, using time of flight data, he tries to reconstruct the information inside the room by taking pictures outside the room. So at this point, it's still at the laboratory level. You see here his experimental setup is a bunch of lasers. But in the future, it might allow us to see behind corners.

And let me conclude by saying that this principle of combining new hardware and new computation is very general. It actually started in other areas such as radar, astronomy, microscopy, and medical imaging. All these areas where the physical challenges of really hard. And using physics alone, you can't get the image quality that you want. So you combine computation and optics in order to image things that would not be possible before. All right. I'll conclude here. Thank you.

[APPLAUSE]

So we're going to switch topics again and look at another area of research. And it will be presented by Regina Barzilay.

BARZILAY: It's actually the end of the talk. Sorry.

Excuse me. This is not some setup. I'm serious. This is the end of the talk. Can somebody please rewind it? I can go back but it will take forever. It's now going backwards, it's great. But can be just do it fast? You can see my whole talk. OK.

[GASP OF EXASPERATION]

OK. The title of the symposium is Computation and Transformation of Practically Everything. So I'm sure that all of you are convinced that this is true. But apparently, there are some areas where people are really not convinced. And that's why I decided to talk about those areas.

So in 2002, I picked up a book. It was before I came to MIT so I had time. It was a book about undeciphered languages. And also this book, in the very beginning, in the introduction stated that machines cannot be used to actually decipher languages, because they don't posses the logic and intuition required to make this task. So I read this words, and decided that those are really fight words, and we have to decipher language.

So it took us a while. Because I wanted to find a language where, first of all, you have enough electronic data. And where you know what are the answers, so you can compare against humans.

And in 2006, I think, we found such a language. This is you Ugaritic. You have quite an amount of data, it's 30,000 words. This is a very old language. It's a dead civilization. It was deciphered in the 20th century by theologists who were also code breakers during World War I. So they were sort of mathematicians. And it closely relates to Arabic, Hebrew, and other Semitic languages.

So the question is how you can go and decipher this language? And this is not Rosetta Stone scenario. You don't have parallel translations. So the way humans can decipher languages is because a language which come from the same family, they have what we call cognates. Those are the words which sound similar, but they actually fit in very differently. And if you can identify cognates for the language that you don't know to one of the known languages, you can decipher the language.

Another type of intuition that humans use is that actually languages which come from the same family have alphabets which are very close. They come from the same source. So even though all those letters look differently in Semitic languages, they do call the same sound.

So the task of the decipherment computationally is a full one. You're given some tablet which you cannot read. You have access to any human language that you do know. And you need to automatically predict the mapping between the alphabets. And you want to predict the mapping between the cognates. And that would allow you to actually read the dead language.

So how can you do it? So humans who did it, and it was very painstaking effort. It takes decades to decipher. So the first thing is they start doing is they take the alphabets, and try to find the mapping between the alphabets.

And the assumption here is if you really know how the letters much you can decipher what's. So if you take two related languages and you know that A and B occur very frequently together, you would assume that if you map them correctly, A prime and B prime would occur together. So you can go and try to have some guesses of alphabet mappings.

The next thing that you do, you have some assumption what are the correct alphabet mappings. And the next thing you will do, you will try to find some mapping was. It will obviously not work. You go back and forth and back and forth until you are tired and died. And then somebody else continue doing. So it takes literally decades to do it.

So just to give you an idea of how it might work, I brought you a question. So this is at a language which we call Windings where every letter can respond to a single English letter. Which is different, definitely an oversimplification. So just to start you going, this is a task for you. France, we're not going to move until we solve it. I give you one letter. This is letter D. Can you guess other letters?

Sorry. So the things that you can guess, it may be here. So what is the next letter you would guess? E. So you would guess a next letter, it will be E. Can you guess the next letter. My time is running. It's OK, I'll give you a hint. Can you see the next letter? You can say that those are the two rules. Those are the rules of verbs. Which suffixes can rule stake? S. So this next one should be S, correct? And then you could get this is death, and so on and so forth. I helped you.

So in this particular case, you can see that what we did in order to decipher, we use our knowledge of morphology. We use it in English, you can have suffixes like -ed and -s. We also knew, actually the whole vocabulary of English. That would help us to solve the task.

And that's exactly what the model will be doing. So the model will assume that every single Ugaritic word is generated from some Hebrew word. And there are separate paths-- though we do not know what are they-- for prefix, stem and suffix. And each time you can think about this probabilistic process as corruption. You take a valid Hebrew string, you corrupt it and get yourself Ugaritic.

Now, corruption is a bad word. I better way to talk about today it is just probabilistic string edits. You can think that you have certain probability to substitute two letters, to insert a new letter, or to delete the letters.

And once we have this probabilities, we can actually estimate the likelihood of certain rewritings. So that's what we want to get.

Now, you could uncover, sort of talk about all this probabilities in a model. There will be a lot of interdependent probabilities. And if you know them, you can decipher them. But the difficulty is that we actually don't know them, and this is our job, to find them. When the only things that we have are the letters in Ugaritic. And here what you see is the full Hebrew Bible.

And just to give you an intuition of how this probabilistic model works, you take some word in Ugaritic. You have your current estimate of how you can rewrite this word. You will generate a lot of possible rewritten corrupted words in Hebrew. Then you're going to go to Bible, and choose which one actually appear in the real Bible. So some of them appear, some of them don't. And then you can look at this new subset of words. Can usually reestimate again your probabilities of string edits at the latent level. Does it make sense?

So this is like a very, very big model. And each time when you're doing it, you're doing it millions of times. You're taking one word, creating a huge list of possibilities, and checking in Bible.

So one particular, just to give you an example of what kinds of difficulties, computational difficulties we had. We said we definitely cannot do it directly. It will take forever to compute. So the idea is if we had was actually something that we typically study in the introductory computer science classes, but you know, we forget once we get our Ph.D., is to represent the rewriting as just a finite state autodata. So you can represent all the writing as a weighted finite state autodata. You can take the whole Hebrew lexicon and represented it as another finite state autodata. You can actually crunch them and minimize them. You'd be surprised how much you can cut by just crunching it. And then you can do the intersection between these two autodata. And you're going to get your [INAUDIBLE] Hebrew writings.

So because we use this tool, we can do it very fast, exact. And it is one of those tools that help us to do the inference exactly. And there are many, many of the computational tricks in this model. But let me show you how it worked. So we were able to correctly decipher 29 out of 30 letters of Ugaritic. The remaining letter is really, really infrequent. We didn't have enough sort of computing numbers for this letter. On the level of morphine mappings, we get the accuracy of 75%. And at the word level, the accuracy was 60%.

Now, some of you may say is it really great? Correct? Comparatively to Google Translate, maybe it's not great. But if you compare it to human decipherers, it take years and years to get to these numbers. And typically, what happens are still, if you go to the Ugaritic dictionary, some words that people don't know what they mean. So typically, the process of decipherment takes decades until it is finalized. So if you can cut human's time and directly bring them to these numbers, it would greatly help.

So now in my computing slide, I actually brought you some sort of big mysteries. This is one on the left side, is this document from FBI, where FBI asks help from the public to decipher this document which was found near a murdered person. There is a [INAUDIBLE]. And there is [INAUDIBLE]. There are plenty, plenty of ancient and not so ancient screeds that we still don't know how to decipher. So I think it would be great Basal for computation, and I encourage you to try it. Thank you.

[APPLAUSE]

KAASHOEK: Take a deep breath. Store all this information in your head. And then we're going to switch yet to a completely different topic. And that will be presented by Sam Madden.

Hi everybody. Thanks for coming. I'm Sam. Thanks Franz. I'm going to talk today about mobile data and the sort of significance and importance of mobile data.

Before-- to sort of motivate this, so mobile data is data that comes from phones, laptops, other sources like that. I just want, you guys have seen a lot of numbers today, throw out some more numbers. So according to this site, the ITU, there's going to be five billion cellphones in service in the world in 2011.

That's a pretty staggering numbers. You think about five billion, right, there's something like 6.8 billion people in the entire world. OK, now almost-- this doesn't mean that everybody has a cellphone. It means, I think, there are lots of people who have multiple cellphones, actually. But to put this number in perspective, this is more than the number of people who have shoes, toilets, toothbrushes, or electricity, OK? Cellphones are incredibly ubiquitous and they're only getting more so.

This same company estimates that there's a billion mobile broadbands. So basically, internet equipped cellphones that are out there. And again, this number is getting larger and larger. And this is really an incredible source of data. And the research question I'm interested in is what can we do with this data. One source of data comes from things like photographs, the sorts of things that Fredo talked about. Or conversations between people, or just people getting on the internet.

I'm specifically interested in the question of what to do with the sensor data that comes from these phone. So many of you know that phones have a variety of different sensors on them. Things that can estimate your position like GPS, movement with an accelerometer, gyroscope to estimate heading, various different kinds of sensors to detect proximity to two people who are talking to each other or near each other.

And so my colleague Hari Balakrishnan and I have been working for several years on a project called CarTel. Which is really, this is CarTel as in car telecommunications. Not drug cartel. It's about sensing phones, sensing roads using phones. And the real idea is that imagine you have a bunch of users out on the roads driving around with their phones. What kinds of things can we measure about the roads?

And so we built a number of applications. Some of them are around traffic monitoring. Detecting what traffic looks like by looking at how fast people are driving at different times on different roads. Looking at things like pothole mappings. So we built an application that uses the accelerometers in phones to measure vibration. And then we automatically detect when a car runs over a pothole, and we upload that to a map. So you can go to our website and see a map of as of 2007, the largest potholes in the city of Boston. As many of you know, there's lots of particles here.

Network monitoring. So we've looked at using these phones to actually measure the quality of wireless networks or cellular networks as users drive around. Where's connectivity? This is the sort of automating Verizon's can you hear me now commercial.

And finally, things like a commute portal. Building a personalized portal or environment that allows users to see what they're driving habits look like, to compare their driving habits to other users to see how they're driving changes over time. Maybe to understand if their recent efforts to take the subway more frequently are actually resulting in reduced hours in the car.

There's lots of other applications of mobile data beyond just the kinds of things I talked about here. So in the area of cars, things like smart tolling, insurance where people or your insurance company actually-- this is kind of a scary application-- might directly measure how much driving you're doing and use that to bill you, rather than billing you based on some rough, kind of, demographic method. Or things like urban activity monitoring. So this is a company called City Sense that builds little reports that show what activity in the city. You could imagine this being useful to urban planners or people doing various kinds of entertainment or marketing campaigns.

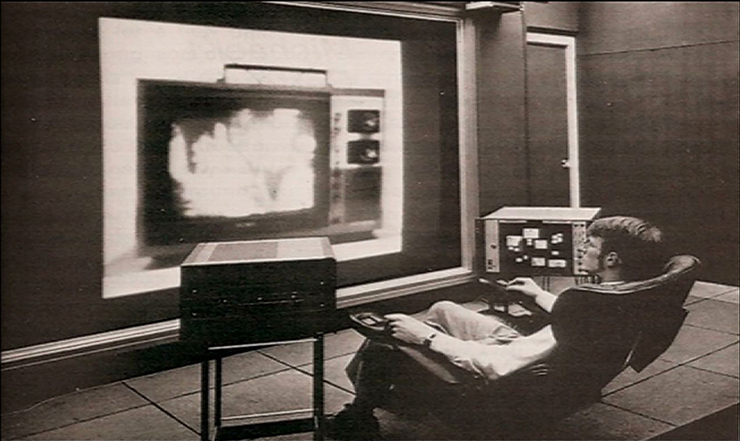

More generally, sensors, I think phone sensing has really broad applications. So things like personal medical monitoring. Or that kind of memex thing, the memex vision that was mentioned in the beginning today. This is Vannevar Bush's idea that you have a device that records every moment that's going on in your life. His vision was a giant desk that could do this. And we're really not very far from the little iPhone that you carry in your pocket being able to record every moment of your life.

Right. So the applications here are-- this, sort of the, I like to think of this research area as being sort of sensor data analytics. I'm a data person. I come from the database background. And specifically, in the area of CarTel is about location analytics. How do we take in the case of CarTel, we've had about 100 cars on the road, we've collected some 500 million data points from these cars over the last three years. We got this massive data set. How do we understand it and makes sense of it and process it? In particular, how do we build software they can kind of chew on this data and produce interesting results from it.

And this touches a lot of areas of computer science. And hopefully I'll give you a flavor for that with just a couple of research results today.

So our goal is to develop software, to store and efficiently access this data. I know a lot of you guys out there are thinking, man, aren't there terrible privacy concerns with this? Again, a part of our-- I feel like a part of our job as computer scientists is to think about how to do this in a way that provides control over privacy. In our project, we've been looking at this from a couple of different angles. One is to build tools that try and automatically anonymize information as it's collected. So for example, we don't tell people where you were even if we're using your data to produce a report about what traffic looks like.

The other, sort of, more pragmatic, I think, approach is that in all the systems that we build, we provide users with the ability to see what data has been collected about them, and to delete any data that's been collected about them, and to stop data collection at any point in time. This is an example of our personal commute portal. You can see that there's this column of buttons here that say delete, delete, delete, delete. This means, in this case, this is Hari's driving log. He can go into the system at any point in time and remove data that's been collected about him.

So the sort of privacy theme is control and transparency. Allow users to control what's collected about them and provide transparency. And I think, and one of the things that I wish that cellphone providers would do is to adopt this kind of policy. It's very frustrating. We don't know what Google knows about us, we don't know what AT&T knows about us. And I guarantee you they know a lot of scary things. Because this kind of data is a little bit frightening.

All right, so anyway. Back to some of the other research challenges that we're working on. The sort of fundamental idea that we're trying, I'm going to talk about is building tools that allow you to extract, and store and query information about trajectories. So think of a trajectory as a time series of positions and some sensor data.

So I'm going to talk quickly about two ideas. One is a system called CTrack for extracting this trajectory data from a series of raw sensor data and something called TrajStore which is not storing and querying it. So CTrack, the challenge in CTrack is that we've got a collection of raw data points that have been collected from these sensors. Imagine these are locations. The idea with CTrack is we want to take these raw data points and convert them into a list of trajectories. Think of this as a list of roads that the user drove on. OK.

So these little red dots here, the red is representing a series of raw sensor data that came from a smartphone. In this case, it had a GPS lock. You can see there's a big gap in the middle of this. And the reason there's a gap is because the users driving in downtown Boston. And there was some big, some set of buildings that occluded visibility to the cell tower. So we lost some information. We don't-- the raw data itself doesn't directly record the track. But we would like to actually know, and a person to look at this and infer that the car probably actually drove on those green lines. This is a harder problem. But it's a similar one.

In this case, each little red dot is a position estimate inferred from seeing a cell tower or a Wi-Fi access point. Now, just connected together in time all those different red points. You can see that this track is not at all close to what a real set of roads that were driven. But we'd like to convert this into a path or trajectory that the user actually traveled.

So the idea, CTrack is an algorithm that does this. To quickly outline how it works, it first subdivides all of space into a set of grids. It computes using this algorithm that was mentioned by Tom [? Latten ?] at the beginning of the session today, the Viterbi algorithm, to compute the most probable sequence of these grids that the car actually traversed. Respecting constraints on the fact that a car can't magically teleport a very large distance, it can only travel at a maximum speed. And it does some smoothing on top of this. And finally, it runs another instance of this Viterbi algorithm to actually infer the most probable sequence of road segments the user drove one.

So what we've been able to do is go from this noisy thing to this much more cleaned up looking trace. In this case, the red line is what our system inferred, and the black line is where the user actually drove. So we haven't done a perfect job. We've gone from this very noisy thing to this much less noisy thing. OK.

So quickly, TrajStore is a system for taking all these hundreds of thousands of trajectories from these cars and storing them and letting users query them. Imagine that this rectangular, set of rectangular regions here represent regions of space. OK. They're subdivided by latitude and longitude. And suppose we have a collection of data files stored on our computer that represents the data contained in each one of these rectangular regions. The idea within TrajStore store is that we're going to take every new trajectory that a user drove. And we're going to insert it into the regions that that trajectory overlaps into. So we'll take each little portion of this trajectory and we'll cut it up and store it in the data files on disk the represent those regions. And now we can answer queries in a very similar way. Simply by if we have a rectangular region like this, looking at these regions on disk, they contain, they overlap that rectangle, and reporting the resulting trajectories back to the user.

So the challenge in doing this is that we need, we want to sort of adaptively split and form these rectangles in a way that minimizes the amount of time that it takes to both insert data into the data structure and retrieve data back. And we've come up with is basically a set of algorithms that dynamically split these cells as the density of data grows goes up or down in each region.

Just to quickly summarize, mobile phones are an incredible driver of new applications and a source of data. I told you about some of the cool applications we've done in CarTel and a couple of the technologies that underlie some of the research that we're doing. Thanks very much.

[APPLAUSE]