Brains, Minds, and Machines: The Marketplace for Intelligence

POGGIO: I'm especially proud of this panel. And thankful to the panelists for allowing us to put together a group of people that perhaps is even more interesting than each of its very interesting components.

The panel idea is to go back to the main theme of the old symposium, which reads as follows. Over the past 50 years, research in AI and machine learning has lead to the current development of a remarkably successful applications, such as Deep Blue, Google Search, Kinect, Watson, and Mobileye. While each of these systems performs at human level, or better in a narrow domain, none can be said to be intelligent.

So at the end of the symposium, we are going back to this theme. And I want to point out that often successes in science are measured in terms of publications or prizes given by other scientists. Now this is fine, but from the point of your machine learning, that smells of over-fitting. And it has dangers. So the ultimate test of success for science is really to have an impact over the long term, of course, on real life and for instance, on the marketplace. And so this panel is a marketplace for intelligence.

Now this close interplay between pure science and application is actually one of the genes of MIT. This is not a way our provost put it in a recent article, but he said, MIT pursues and values the most abstract curiosity-driven fundamental research and the most applied market-oriented innovations. And so, again, this panel is very much in this spirit. You have to show that the science you are doing can lead to things that do work in the real life.

Each of the panelists today represents one of the successful systems in the engineering of intelligence, which I listed in the theme of our symposium. They will cover state of the art achievements in our domain of intelligence, as I said, from Google Search to Watson to Kinect to Mobileye to medical devices and to future approaches. And each of the panelists also represent a company from well-known ones, such as Google, IBM, and Microsoft, to less known but successful ones like Medinol and Mobileye to really baby startups-- Navia and DeepMind. So behind the companies and products and ideas there are, of course, great people and I'll introduce each one of them in turn.

So first, Peter Norvig. You know, I find Google Search magic is better than any team of librarians I ever had when 30 years ago. Has changed so many things in the way we do things. Certainly, has changed the way I do research. I look for what has been published before what is known before I start really trying to solve a new problem.

Peter is director of research at Google. He's a fellow of the American Association of Artificial Intelligence. He has been faculty in various universities. He's the author of a very successful textbook, but also of a wonderful PowerPoint presentation, the Gettysburg PowerPoint presentation, which I recommend every one of you to look at.

David Ferrucci, let's see, I sent this email on February 14, 2011 to David. I said, David, sorry for the many emails. I'm watching the competition tonight-- this was the Jeopardy competition-- I'm watching the competition tonight in a very crowded MIT classroom. Could we invite Watson to come to our workshop? And David answered almost immediately. He said, not sure if Watson can join me, but I plan to attend. I hope it's still good enough. Watson steals the show. Thanks.

David is the lead researcher and principal investigator for the Watson Jeopardy Project. He's been research staff at IBM Watson Research Center since '95 where he led a team of 28 researchers and software engineers specializing in various areas critical to AI, software architecture, natural language processing, and so on.

And I want to say that even more than Watson, I was impressed by the organizational feat that David pulled out of leading and integrating in Watson so many different fields and people in his team.

Andrew Blake. Kinect is another magic application that just came out, is this box that used to play games with the Microsoft Xbox. It recognizes your gesture, the poses of your body. And the brain of Kinect, the machine learning technology which is inside, comes from the team of Andrew Blake who is the managing director of Microsoft Research Center in Cambridge.

Prior to joining Microsoft, Andrew was in academia for 18 years. Lately on the faculty at Oxford University. He has published several books. Was elected fellow among other things of the Royal Society a few years ago. He's an old friend, a real pioneer in computer vision. And among other contributions, a pioneer of the use of probabilities and machine learning in computer vision, which really transformed the field.

Amnon Shashua, I already introduced him yesterday, so I'll be brief. He holds the sax chair in computer science at the Hebrew University in Jerusalem. He received his PhD in computer science at MIT.

Working on competitional vision where he pioneered work on the most mathematical part of computer vision, which is really geometry, multiple view geometry bounding with this part of mathematics called algebraic geometry. I'm proud to say that Amnon was a student in the post-doc of mine. He's a great friend and a great colleague. And I said yesterday, I'll repeat today, that Mobileye is on top of my personal list of the successful applications of machine learning and computer vision in just the very recent past.

Demis Hassabis is a Wellcome Trust Research Fellow at the Gatsy Institute in London and a former well-known computer games designer. He was a child chess prodigy. He created the classical Theme Park for Electronic Arts, one of the best selling games at the time. And after selling a game company, he started a PhD in cognitive neuroscience at University College in London.

And he recently co-founded a new high tech startup to conduct research into general AI techniques. Among other things, he has won the International Mind Sports Olympiad five times, I think. He's a visiting scientist in my group, a wonderful dreamer of [INAUDIBLE] dreams.

Vikash. Vikash is a co-founder and CTO of Navia systems, which is a start up building probabilistic computing machines. He received his PhD at MIT in [INAUDIBLE], a BS, was a student of Josh Tennebaum. His PhD dissertation received MIT award for the best dissertation in computer science in 2009.

I still look at him as one of the bright students that make life a challenging fun at MIT for me. He's a great scientist, an entrepreneur, trying nothing less than changing the current paradigm in computation. You will hear from him how this will happen.

Kobi Richter. Kobi is one of these very rare people-- I know of two of them, including him-- who is very good at everything he does or did from flying fighter jets to all kinds of sports to art to science to business. He's a gifted multidisciplinary researcher in computer science and neuroscience with a PhD in broadly speaking neurophysiology from Tel Aviv.

He started recording from visual cortex while at MIT about at the time he arrived here in '81. Among other things, he was helping me negotiate with tough guy types used car dealers in Boston at the time. And at the same time, he was programming on the leased machines in my office in the AI lab and starting a company with Shimon Ullman in the field of computer vision called the Orbitech, which is still a world leader in the field of automated visual inspection machines.

And Kobi went on to develop new designs for coronary stents helping to create a dramatic transformation of therapeutics in cardiology over the last decade.

So with this, I'll ask Peter to give his presentation, around 10 minutes.

NORVIG: Thank you.

[APPLAUSE]

OK. Welcome all. Now one of the undercurrents of this conference has been let's compare the good old days to today and see how things are different, sort of this idea that maybe we missed something. And maybe things were great back then.

What I want to talk about, and maybe some of the others will talk about, is the great things that are going on right now. And yes, there is an opportunity to do more in the future to change what we're doing. And there's lots of good opportunities in the future. But there's also lots of great things going on recently and going on right now.

And since this panel's supposed to be about marketplace, I'll focus on the market impact of those great things, rather than the scientific impact. So let's try to estimate the economic impact of Google.

And by this, I'm exaggerating it, and using Google to stand for more than just Google itself and stand for the whole infrastructure of the web. Because what Google does is enable you to get to the web in the same sense that trucks have a big economic impact because they deliver all our goods around the country, but they don't make the goods themself. It's the same thing for Google. It's a transport system.

And Hal Varian took his attack at this at the Web 2.0 Conference recently. And you can look up his numbers. My numbers are going to be slightly different because I'm going to do the whole world, whereas he was just doing the US. But the main thing you should note is that there are really big error bars. And any of our guesses can be off by big factors.

Now the first thing to note is that we have advertisers. They pay us some money. They get some value back. And we can calculate that the value back to them is about seven times what they spend. So 88% of the economic value of Google is going back to them, rather than staying with us. And that works out to about $210 billion per year.

Then the next thing is, what about the values to the searchers rather than to the advertisers? And there's a few trillion interactions per year at the various Google properties. But it's hard to tell the economic value of that. If you watch a YouTube video of a kitten, how much is that worth to you? Well, you know, we can estimate it by saying how much are you willing to pay for a first run movie and divide by some constant. But maybe the economic impact is more negative rather than positive because you should have been doing something useful. So these are wild estimates.

But let's say something on the order of $7 to $73 billion for YouTube videos, depending on if you're willing to pay a penny or a dime to watch one. And then somewhere in the range of $37 to $370 billion for the searches at Google. And I'm only allowed to say that there's more than a billion searches per day at Google. We haven't updated that number in a while. But that's the official number I'm allowed to use.

And how much is a search worth? Maybe it's worth a dime. Maybe it's worth $1. If you have cancer and you discover the treatment that works for you on Google, that's worth a lot more than $1. If you use Google as a bookmark bar and you type in Yahoo and we send you to Yahoo, that's probably worth less than $0.10.

Okay. And then the value to websites. And there's a direct economic impact that there are publishers that show Google ads on their websites and we can measure that at $54 billion per year. But then there's the indirect of all the websites that get clicks and re-directions from Google for free. And maybe that's $250 billion. It's hard to tell.

And so the bottom line is that it works out to somewhere around 1% to 2% of the world GDP has been accelerated through this access to information. So that seems like a good economic impact.

What else is the impact of artificial intelligence, of machine learning, of statistics, of information technology? One is in experimentation. And Ronny Kohavi from Microsoft Research talks about this quick. Of these two, which one do you think would be better at getting you to click and make some money for MSN Real Estate? How many think it's a? Raise your right hand. How many think it's b? Raise your left hand. People aren't quite sure. Turns out a was 8.5% better.

Now look at these two. Which one is going to make you buy this product? Right hand if you think it's a. Left hand if you think it's b. And this time a was 60% better.

And you can play this game. And you don't have to do more than three or four before everybody's got at least one wrong. And the idea is that your intuition just is not reliable. And you've got to run these experiments. And having all this ability to do it online lets you do that.

And then having the data analytics lets you make sense of that in a larger sense. And there's lots of places now where data is available. And we can do more analytics.

There's all sorts of intelligent applications that have been enabled. So speech recognition, machine translation, self-driving cars, automated spam detection, genomic analysis, all these things are coming through new breakthroughs in machine learning, new capabilities for data analytics, and so on. Communications is important. And there's applications like question answering and dealing with e-mail and information overload and lots of opportunities there.

And then in education. So the US spends $120 billion a year. And the US is a quarter of the GDP. And maybe half of the education comes there and half the citizens pay for themselves. So it's a trillion dollars a year in education.

And currently, electronic learning is only $50 billion. And so I think there's a big gap there where it could be doing a lot more. MIT has 10,000 students, but the MIT open courseware website has 70 million students. And so what's the impact there? I don't think we have quite measured yet. The Khan Academy has 35,000 students a day. And the University of Phoenix has half a million students. That's a distance learning university.

Here's the Khan Academy. It has a bunch of videos that are online that are available for free. Students watch them and learn from that, either as a substitute or an augmentation to their own learning. And here's two examples-- one big and one small-- the MIT open courseware. Thousands of courses available where you can follow a whole course all the way through.

And then we're beginning to see applications as e-readers come on line, of things like the Amazon Kindle has this sharing of highlighted passages. So you can highlight a passage and you can choose to share it. And then you can see what other people think is important. And you can learn from that. And so according to Amazon, the most highlighted, the most important passage of all time is Jane Austen's it's a truth universally acknowledged quote.

Now, of course, in part she's helped because her book is out of copyright and so it's free to download, compared to the other ones that you actually have to buy. And she can't actually benefit from this feedback being dead. But for other people like me being a textbook author wouldn't it be great if I could get this feedback to say on this page this part worked and this part didn't work. And now I can go and fix it. And that's being enabled now.

And then in conclusion, let me just say that there's lots of big markets out there, some for which we've already had a huge economic impact on and others for which there's just money lying on the floor. And if you pick it up, you get to keep some. And like Google does, you get to share most of it with the world to make the world a better place. So go out there and pick it up.

[APPLAUSE]

POGGIO: David.

FERRUCCI: So thank you. Thank you very much. It's really an honor to be able to get a chance to speak to you today. I want to make a couple of points. I don't have those kinds of numbers that you've just seen about the economic impact of Watson. Of course, we're very excited about it. We have a tremendous interest from industry, from lots of customers coming to IBM. We've sort of captured the imagination of the broader audience. And all of this is having very positive potential from a business perspective with IBM.

I want to talk about three main points, which is during the course of this project we learned a lot. And one of the things we learned about was of the opaque perceptions of AI and computers that we found as we were communicating to the general audience about what Watson does and how it does it. And I wanted to share a little bit of that with you.

I also wanted to share a little bit of how we focused on engineering and engineering for science. And what some of the commitments we made to succeed with building Watson. And ultimately, you know the importance of the right interaction can really change the business. And I put interaction in quotes there because it's really about how you interact with computers and how you communicate with the user and with the general audience and how powerful that can be in impacting business and the understanding of how to apply technology.

I got an opportunity to see a couple of the panels before. And I learned two things. I think one was that brains are really sexy. And warning, there's not a single picture, I couldn't resist, not a single picture of a brain in this presentation. But I think the other thing I learned is that in this few just 10 minutes I have that these audiences can absorb someone speaking really, really quickly. So that's what I'm going to do.

So you know, first off, these bipolar impressions we got. I actually did a radio interview where someone asked me pretty much exactly what was there. Okay, so you put the questions and the answers in, and then when the clue comes up, Watson speaks the answer. Right? And I was like, no. We don't know what the questions or the answers are ahead of time. And the host said, well then how do you do it? I said exactly. Right? So true story.

But you have very different impressions. On the other hand, we would get people who would see some of this and say, oh my god, you know, it's frightening. It's Skynet. When's Watson going to enslave us? Right? So you had this complete opposing things. Even within IBM, people looked at this initially and thought, gee, it's too easy. In fact, some people thought it was name that tune.

Other people, on the other end of the spectrum, thought this is too hard. You know, this is pure folly. And you're just going to fall on your face and embarrass us.

So it was interesting to me that you had these bipolar impressions about what was going on. And this was really not just a study of the audience in fun. This really affected to what extent IBM, my company, was willing to make an investment in this, what I considered, very much an AI problem, an AI challenge. And for me, it was irresistible.

What I ended up with is trying to communicate more about what computers find easy and what they find hard. And looking for ways to communicate that. I had a unique opportunity to talk to many, many former jeopardy contestants who came and sparred against the computer. And I had this challenge.

It was amazing that they were more interested in how the computer did the strategy or how it decided to bet, because this is what they struggled with. Their unconscious of how. Or I'm going out on a limb here, but their unconscious often of how they actually answer the questions. It happens in a blink of an eye for them what's going on.

And what they are conscious of is how the computed a bet or how they did the math or how they did the strategy. And of course, that was largely the easy part. And I don't want to undersell some of the cool things we did there, but largely the easy part with regard to the technology and the effort. But something they really struggled with.

So one of the things I did was talk about what's easy and what's hard for a computer. And people can look at you. You had a long time now to look at that. Anybody know the answer? No. So I bet you weren't even sure if it was less than or greater than one.

So this is easy for a computer, looking through tables of information-- here's a pseudo SQL query-- is easy for a computer. And this helped communicate that. This is how computers work. And it's a paradigm we're all sort of very used to.

And even people who are not experts in computers use spreadsheets, they use databases, and they know if you put your serial number, you could look up your address. So this is kind of what they expect when they see a computer just getting a question and answering it. They're expecting you're just looking it up in a database.

And what I found was that-- at the speed of light here-- what I found was what helps communicate the challenge that we have with natural language processing and how to contrast that with database tables was this notion of what goes on with here you have this table, you wonder where somebody was born, and you could look it up in structured information.

But what if you had the same question and you had no idea where to go or how to answer it, how to interpret it? And you had to read where was Einstein born and infer that from that text? One day from among the city views of all [INAUDIBLE] Albert Einstein is remembered as Einstein's birthplace. You know, you have some cues there, but now you have to understand there are people, there are places, there are relationships.

Here's another example. X ran this. Now sure if we knew that question was answered by that table we can look it up. But instead if we had if leadership is an art, then surely Jack Welch has proved himself a master painter at his tenure at GE. We have a slightly different problem here. So with some confidence, we may deduce that Jack Welch was a painter at GE. But that, of course, is not what was intended by the question, nor really the answer.

And this is how people sort of scratch their heads and go, oh, I'm starting to understand really what the challenge is. And I think what was neat about Jeopardy from a scientific perspective was it helped us tackle some key points-- the broad open domain. So you can't anticipate what the questions were going to be about, or exactly how they were going to be phrased. Complex language.

And you have to have a very, very high precision. But moreover, the computer had to know what it knew in the sense that it had to know what was right. Because every time you say you know an answer, and you get in there and you buzz first, if you get that question wrong, you lose the dollar value associated with that question. So there was risk in answering with the wrong answer. So it had to compute, essentially, a probability, an accurate probability, as accurately as we can get, that you got the answer correctly. You got it correct. So you could take that risk and buzz in. And you have to do this very, very quickly.

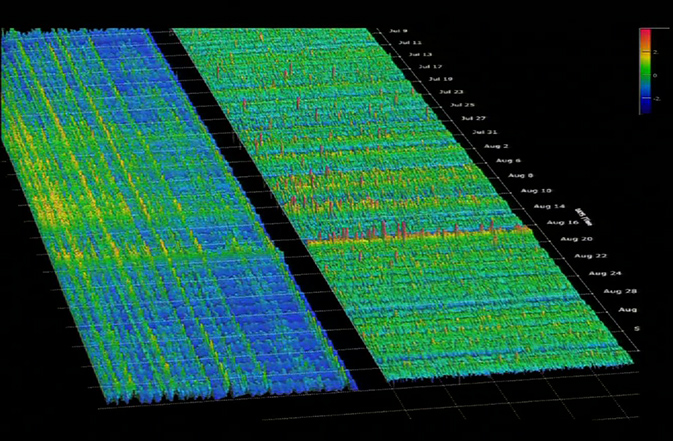

And this was really the foundation of what the engineering feat was. And it involved starting with really, really crisp metrics. One of most important metrics we had was represented by this winner's cloud. And I can't tell you how important having a crisp metric was for both the science and the engineering of the problem.

So we didn't start off with this general notion we're going to build this big AI. It's going to be really smart. And there's going to be Maxwell equations for doing question answering. We sat there, and we were very, very practical.

In fact, someone came to me and said, you know, I'm a cognitive psychologist. And I really, really want to be in your project. And as much as I love to philosophize about cognition, I had to get really practical really quickly. Because I had to meet with the senior VP quarterly who said to me, Dave, you're mucking with the IBM brand. Are we going to win?

So this was very much an engineering feat. That baseline was where we started with a QA system. And we had to drive that conference curve up so it was basically splitting right through that or cutting right across that winner's cloud. Because that's where winning players were performing. So if they were confident enough and fast enough to get a chance to answer 50% of the questions on a board, they were doing between 85% and 95% precision.

I'm going skip some of these slides because I don't have the time for it. But I want to get to a few guiding principles. And this is kind of for me were a lot of the AI theory sat for me. Right?

So we made a couple of key commitments. Large crafted semantic models will fail. Too slow, too narrow, too brittle, too biased. So we need to acquire and analyze information from as is bull sources. So we consumed things like Wikipedia and many other encyclopedias, dictionaries, thesauri, entire plays, books from Gutenberg press, the Bible, Shakespeare, and you name it.

We had the expectation that there was no one algorithm that was going to be able to do question answering, but that intelligence would come from many, many diverse algorithms. And that each were going to react to different weaknesses in the system based on doing error analysis and repeating and improving the core NLP that was in there to attack each problem we would start to see and generalize from.

And the relative impact of an integrated metric must be automatically learned. In other words, there was no presumption that if you got your parser from 95% to 98%, or if you got your information extraction from 75% F measure to 80%, that this was necessarily going to impact the end to end metric of the question answering system.

So instead, what we had to do was do this empirically. Run many, many, many experiments to understand how these various algorithms and features interacted to effect the end to end metric.

Another assumption was that while we were going to put many, many algorithms in there, and let different hypotheses compute throughout the system, that in order to deliver the latency, which we needed, which was to answer a question come up with an answer and an accurate probability in three seconds, we are going to have to scale out. We were going to have to have an algorithm that was embarrassingly parallel, which is exactly what we did. And this is a high level picture of Watson's brain, if you will, even though I promised I wouldn't show a picture of a brain. I meant a human brain.

So it takes questions and categories. It comes up with many different interpretations of that question based on doing deep language parsing and semantic analysis. It generates many searches from those hundreds of possible answers. Each of those become the root of a tree where it would collect evidence to support or refute those answers.

And from there, it would apply many different scores, hundreds of different scores that would analyze that content from different directions. It would weigh those various features based on machine learning models. Have an end result of a ranked list of answers with all of them having a probability that they were correct.

And if that probability was over the threshold, then Watson would want to buzz in and answer. And I'm going to go over it just a little bit. We populated that architecture with hundreds of algorithms. We had a sort of a goal-oriented metrics. We did regular integration testing. We needed the hardware necessary to support that engineering, which gave us those results we needed to push the science.

It took two hours to answer a single question on a single CPU. We ended up scaling that out over 3,000 cores to be able to answer fast enough.

In the end, we were able to drive that performance from that baseline all the way up to where it was cutting across that winner's cloud. And this was a big test on a blind data-- 200 games worth of blind data. Does it guarantee a win? Absolutely not, but you could see you're competitive there with the grand champions across that curve.

And the last thing I want to say is-- actually, this is not last thing. I have two more things I want to say. One is that the integrated metric, there was a system level end to end metric that we focused maniacally on with the presumption that winning on Jeopardy was something that was going to advance the size of NLP. We still had to focus on that end to end system metric.

When we were done with that, we went back and looked at some of our core component NLP. We looked at how it evolved with regard to independent component level metrics, the kinds of things you typically publish on. And we're going to write all these papers. But we were leaders in all these areas from parsing to disambiguation to relationship attraction to passage mashing and passage scoring. In every one of them we were leaders based on those metrics. So that was a very, very satisfying thing to discover. If you choose that problem right and you have metrics and you push that, you can get there. So it was very satisfying.

The last thing I want to talk about is evidence profiles, and then I'll stop. In Watson, we had many, many features-- many, many algorithms producing many, many features. Just to get a handle on what was going on, we had to build tools to understand how those algorithms were interacting and impacting the correctness of an answer.

Eventually, we got to what we called an evidence profile where you'd be able to group out of hundreds of features, you could group them into semantic categories. And them based on the machine model waves, you would be able to see how they contributed to a right and wrong answer. This allowed us to do error analysis, to understand where the system was failing. This was a very, very powerful capability ultimately.

And what to do to solve problems. There's a number of things going on here. But I want to point out that one of the issues in getting this question wrong here, it says, you'll find Bethel College and Seminary in this holy Minnesota city. Actually, Bethel College and Seminary in St. Paul, Minnesota and South Bend, Indiana. We didn't weigh location information high enough.

But there was also another path to answering this, which is through the pun, the association between holy and St. Paul, the right answer. Actually independently one person came up with some ideas on how to detect puns in language. Was able to integrate that into the system, train it, and that was having impact on the end to end system. And we were getting more pun questions right. We did this very, very rapidly because of that architecture we depended on.

My last point, the notion of an evidence profile in the communication of confidence ended up becoming very, very important. When you watch the game, where all you saw was the computer answering when it knew an answer and getting it right most often right, you thought, gee, it must be just looking it up in a database. What's really going on here?

When you saw just a glimmer of what was happening inside the machine, when you saw those confidence values and that histogram, the general audience starts to scratch their head and say, wait, there's something else going on here. You mean a computer can maybe be wrong? You mean it's not sure. You mean it's thinking. I mean we think of it as computing probabilities based on analyzing evidence.

But this got people to think, wow, this is really interesting. And this inspired both the general public to think about what is AI really all about. And it also inspired our customers, to think, you know what, this notion of dealing with uncertainty and dealing with language effectively helps me address a problem I didn't think of that way before. And we got lots of interest, both from the general public and from our customers. Thank you.

[APPLAUSE]

POGGIO: Andrew and Microsoft.

BLAKE: Thanks very much for inviting me, Tommy. It's been an amazing workshop the last two days. I guess one thing you didn't mention from my CV is that I studied here for a year. And I didn't get a degree at the end. So I guess that makes me an MIT dropout, which I guess must be something to be proud of.

So I just wanted to talk a little bit about technologies for natural interaction and some of the progress that I think is happening there. You know there are many contexts in which we want to do natural interaction. Some [INAUDIBLE] it is.

So the one that is sort of the source of my talk, if you like, is gaming. And that's kind of obvious. But people are thinking about all kinds of other things, like in medicine, for example, bringing some kind of intelligence, albeit superficial, to bear in analyzing what's going on in the human body.

And here's another investigation of natural interaction. This time in the operating theater that it's not really practical for a surgeon to get up and start poking at a keyboard and manipulating a mouse. If they do that, they'll have to go and scrub up again afterwards. So it would be great to have some more flexible non-contact kind of interaction with the environment.

Cars, well, Amnon is going to talk all about cars. More and more the high end ones, at any rate, at the moment are coming up with head up displays. And so there's a lot of opportunity there for a more natural interaction with the information that's displayed on the screen, for example, pointing navigation information, which is maybe projected onto the world.

Here's a rather beautiful robot that the Anybots Corporation has built to be your proxy at a meeting. It will turn up to the meeting and people will talk to it. And I don't know if that's going to catch on. It's a fascinating idea.

What else? So of course there's Minority Report. I don't know if we really believe in that, that that's what we actually want to have as the interface for interacting with information. But again, a fascinating idea.

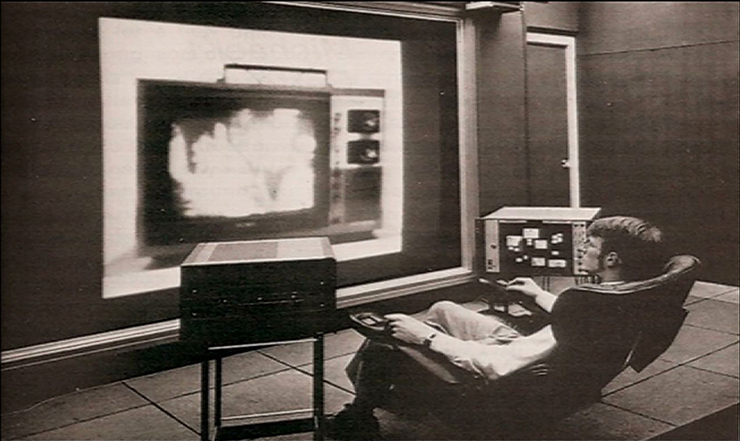

So you know the technologies for interaction are coming on. We've had history of necessarily clunky things getting more and more flexible. Now touch and multi-touch and then the new generation that I want to talk about, you could call it no touch or action at a distance.

So the Kinect camera that Tommy referred to came out earlier this year. And yes, that's what my team has been working on putting machine learning into it. And here is the team. I'm going to tell you just a little bit first about what we did. Oh, but before that, in case you didn't play with it yourselves, here are the kind of things you can do. You play games with your buddies. I'm not a gamer myself by any stretch of the imagination, but I must admit I have found some of these Kinect games are quite compelling just because they're social and they're easy to interact with. They're not too kind of head banging.

So what do we have to do to make the Kinect camera work? Well it's sensing the pose of the human body. And so the task is to receive the signal that the body generates and reduce that to a set of joints, rather like motion capture systems that they use in movies a lot. You know, in Titanic when the guys all drown in the sea. Actually what they do is they put the guys on a slide and they capture their motion as they slide into a pool. And then transfer that into the scene.

So what we needed was to build something like that, but without having to attach all the instrumentation to the body of the actor, because, of course, that doesn't make sense in the interaction setting. So we take the body in in the form of data. And what we have to put out is the skeleton inferred on the right there. And represented mathematically in terms of positions and angles and so on.

This is a hard problem because the human body is amazingly pliable and variable. Even your body can do many things. And then, of course, there are many different kinds of bodies varying in size with unfortunate accidents of appearance and in very complex settings and so on. So we needed to make significant inroads into these complexities.

And it's a kind of commonplace of AI one of the lessons that AI has established is that trying to program rules explicitly to handle situations of this complexity doesn't really work. So we realized we had to do this somehow by machine learning. What we thought we would start with was principles based on the work of Dariu Gavrila. He this work in the Daimler Benz research lab. He also was anticipating bringing a vision to cars. And he had a fascinating system of hierarchical templates of the human form capturing the position of the human form in a cluttered scene.

We thought that was not quite going to be enough because of the need to capture all of the articulations of the limbs, which you don't really have to detect pedestrians. So we were thinking in terms of something involving templates for parts of the body. But then you'd have something to do afterwards to harmonize those templates if they had all detected their parts independently.

Meanwhile, Jamie Shorten on my team had been working on I guess we can call it an AI problem. At least there are an awful lot of people working on this at the moment, which is let's get back to object recognition now with what we've learned in the last 15 or 20 years about low level vision and see if we do any better this time around.

And well, we don't have human level object recognition yet, but amazing amounts of progress have been made recognizing up to 100 objects at once in a scene. So Jamie wanted to use this kind of technology to try to solve the problem of detecting the human body in depth maps because the Kinect sensor is a 3D sensor.

And my contribution at this point was quite crucial. I told him that it would never work. And he, nonetheless, went on playing with it. And I said, well, that's fine. Try it. Why not? But we better pursue both tracks because yours is never going to work.

And then after a month, his was working surprisingly well. I mean, it's still not working properly. But I said, well, I think we should still hedge our bets and do the two approaches. But fine, you play with that if you like. Your Friday evening project, except every day was Friday.

And after a couple of months, it was really working amazingly well to an extent that I never thought it would. And so we kind of gave up on the harmonizing the templates idea and went with the recognition.

So this is what we ended up doing. The depth amount that you receive from the sensor is on the left here with close stuff coded as bright and far stuff coded as dark. And we ended up with an output which is like a kind of noisy clown suit. Actually, the input I'll show you soon in at least the training data is a kind of perfect clown suit. But the clown suit here is colored according to the body parts.

And then as the guy moves, you see the clown suit is moving pretty much with the guy. And this is what convinced me finally, okay, the clown suit doesn't fall off when you move around. So the labels really are sticking to the body.

Here we have many different poses, all labeled with the same clown suit. Of course, there are 31 parts of the body labeled here and various things happen, which I don't have time to tell you about to generate hypotheses, which eventually boil down to tracking a number of joints in the body. So that was the story vastly accelerated. And that kind of capability takes its place amongst I think a number of capabilities that are coming out that will change the nature of interaction with machines.

And of course, our aim is to make the computers disappear in our interactions with machines that we find around the world. So we don't want to think of our cars as computers or assemblies of computers. We want to think of them as cars. And I think this is true for all of the machines with embedded computing that we use.

So of course, speech technology has advanced incredibly over the last 15 years. And I think that is one of the huge areas of progress. I would like to disagree with Patrick I guess what you were saying a couple of days ago. You know this is a field which I think has been transformed out of all recognition. And you know, hey, let's call it artificial intelligence. I don't know if it exactly is or isn't, but let's claim it. And that has been a huge piece of progress.

Itrackers, incidentally, are something which all of a sudden seem to have come of age. You can get them embedded in computers now, other kinds of sensing. I've just got to show you one or two things. A couple of things from MIT. This is something I saw from Ross Picard from the Media Lab. It's amazing that you can sit in front of your laptop now, and without anything special attached to the laptop, it will tell you how fast your heart is beating. That is truly astonishing when you first see that demo.

And another thing which migrated from one Cambridge to the other, Rana el Kaliouby, who was in Cambridge University and now is here in the Media Lab, has made amazing progress in the interpretation of emotions. For a long time, computer vision researchers were grinding on about is it the six basic emotions and recognizing those. In turns out not to be very difficult.

Once you get into kind of complex emotions, then the emotions have trajectories in time. And everything gets more complex. And Rana's work is so beautiful because it exploits autism databases that were constructed in Cambridge Simon Baron Cohen for the diagnosis of autism and turns them into oracles for what emotions are as reflected in the face.

Something else we're doing in Cambridge is trying to make a common place out of the business of gesture recognition. The guy is controlling a DVD player, by the way, using his hands. And of course, you could build this into the Xbox with Kinect.

I'll just advance it so it stops making that noise. What's special about this is that we never actually built any kind of a recognizer for the gestures. All that happened was we recorded a DVD with examples of different gestures on it. Fed that into a machine learning algorithm. Out of that dropped out a recognizing module. So you could imagine a designer, somebody who doesn't want to think about computer science, building an interface to their machine using this capability.

Well okay, I'm over my limit. So I'm just going to skip to my-- how about this slide? So just one other thing I wanted to mention is just the whole idea that perception, which is what we're trying to build into these machines that give us flexible experiences with devices, is enhanced or relies on having probabilistic reasoning built into the machines.

And a kind of lingua Franca for probabilistic reasoning, much of this theory was developed here in MIT and elsewhere, is the idea of the probabilistic graphical model. Here, for example, is a graphical model that encodes asmer, the different causes and effects and possible intermediate concepts. Here is a probabilistic graphical model that encodes game playing and the way in which your skill will affect your performance and how that plays out with multiple teams.

And just to finish, I just wanted to say that one of the things we're working on in the lab is trying to embody this in a package which could be handed out to developers. And developers are trying it as we speak, it's on the web for people to download and play with. We call it infer,net. And it encapsulating all of that graphical model language, if you like, in a way which we hope ultimately will become a commonplace in software development. We're not really there yet.

So at the moment, it's still a good idea if you have a PhD in machine learning to program with this stuff. But we hope that in the future this will become commonplace.

So just to conclude, a lot is happening I think which gives us reason for hope about having more natural interactions with machines in the future, new forms of sensing and sensory processing, and that crucial ability to program with probabilities. Thanks.

[APPLAUSE]

POGGIO: Amnon.

SHASHUA: So the title is a bit presumptuous. Given the gap between our engineering successes and the wide spectrum of human perception abilities, the gap is staggering. So the title itself doesn't have any meaning. It's not well-defined unless we narrow the domain. And this is the secondary title that I'm going to talk about the domain of making the car see.

And I'll be modest. I'll be talking about technologies that I think can be implemented within five years, 5 to 10 years. Where this field of making cars see has the potential to reach within five years and what are those technologies that we'll need to develop.

So just a slide of recap. Today's technologies centered around a camera looking forward front facing doing about five different functionalities, lane departure warning or lane analysis, detecting cars, performing forward collision warning and braking on cars, detecting pedestrians, and doing the same thing on pedestrians, detecting and reading traffic signs about 60 to 80 different traffic signs. And also doing certain lighting functions. At night, be able to automatically determine whether the high beam should be on or off.

And where this is going is towards autonomous driving within the next five years. In terms of the computer vision technologies, the three main areas are object detection, cars, people, traffic signs. There's a lot of motion estimation going on both understanding the motion of the host car, the car is moving, and understanding what other cars are doing, kind of a friend and foe determination. And roadway understanding, which is like a light scene understanding.

So the next slide are these clips that are running in a loop. So you can look at them in a relaxed mode. So the one on the top is detecting cars and pedestrians. What you see here is detecting lanes and road shoulders and all what is necessary in road analysis.

Here you see some motion understanding. Whenever there is a lateral motion, it is colored, the dots are colored in red. And all the static points are colored in green. So there is a necessary ingredient here is to detect the ego-motion, the motion of the car in order to know what is moving and what is not moving.

And here is a friend to foe estimation. The red rectangle is the car in a possible collision course. And the white color is the cars in the neighboring lanes. System needs to know how many lanes are in the field of view and what the cars are doing, the average speed of cars, whether there is an escape lane in case of an accident and so forth.

In terms of what remains hard to do in this context is, one, is the scalability of object recognition. The engineering success is really limited to a small number of object classes. Means if the task is given an object class, go and detect it reliably in the image. This is possible if the task you have 1,000 object classes. Go and find instances of these object classes. And the image reliably, this is still an open problem.

And the second hard thing is situation awareness of scene understanding. In the context of automotive, is what is happening in the scene? It's not only where are the objects, but what the objects are doing with relation to one another.

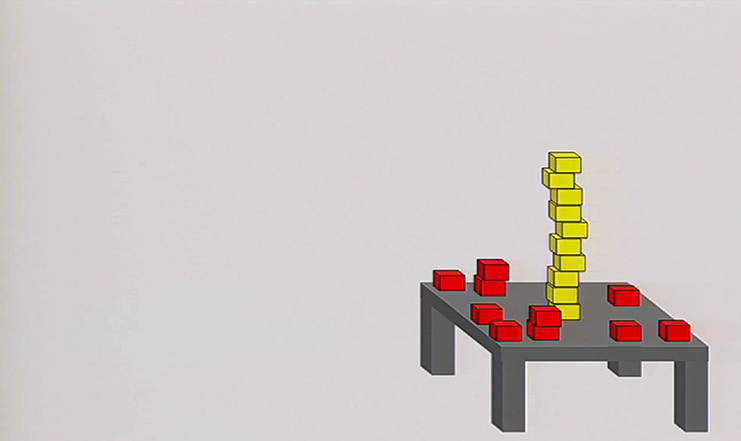

So in terms of scalability, we can conjecture given the engineering successes that we have seen so far that O of one classes can be done today. Now this is a controversial conjecture, but bear with me. And let's agree that this is possible.

And if we assume a linear scalability, it means if we need x computational resources for one object class, then it will need two x resources for two object classes, this linear scalability will not work when we have thousands of object classes. And thousands of object classes is what human perception is all about. We effortlessly can find instances of thousands of object classes.

So if the linear scalability means that we'll need to replicate resources. And this is definitely not something that is workable, even in the context of very large computing power.

In terms of in our automotive space, the areas in which you find a need for multiple objects in traffic signs you have a sample of the different kind of traffic signs, in addition all the stop signs, yield signs, traffic lights will be detected. There's guardrail, concrete barriers, road edge, poles, bridges, and the list goes on. And there's about 100 different classes that are being identified today.

And if you want to go into autonomous driving, it's at least 1,000 different object classes that you need to know where they are in the image in order to properly do semi-autonomous driving. The clip that you're seeing is a system detecting all the relevant objects and also understanding what those objects are.

This is an example of a clip where it's a challenge for any computer vision system. So you have a camera moving in space, both outdoor and indoor. And any object that it sees in its path there is a label of what that object sees or whether it's an obstacle without a label. This is something that I'm gearing towards the ability to have to store thousands of object classes and find them effortlessly in real time on a portable system. This is something that I think can be done within the next five years.

In terms of situational awareness, what are those scene understanding elements that are needed? For example, find the lane boundaries when there are no lanes. Simply knowing what other cars are doing, where other cars are moving. The position of static objects like parked cars also gives you a clue. What are the boundaries? What are the lane boundaries, even if there are no painted lanes?

Travel planning. How many lanes? Traffic dynamics in neighboring lanes. Whether there is an escape lane or not. Where are the objects of interest located? For example, is a pedestrian located on the road? Is the pedestrian located on the sidewalk?

Where is the face or the poster of the pedestrian located? Is it located toward the collision course? Is it located in another direction? What do the pedestrians do? What do other cars do? This is also something that is necessary to understand when you go into autonomous driving.

In terms of new research directions, again, I'm limiting it to this particular space. So one of them is the scalability. As I mentioned, the linear scalability is not workable. One needs to develop a principled way of reaching sub-linear logarithmic growth of resources as a function of the number of classes. Which means that when you go and do classification, it's not a one versus all classification, which is the popular way of dealing with multiclass set today. But it's a subset of classes versus subset of classes.

And the challenge here is that if you have n classes that are 2 to the n subsets, so the question is how do you in a principled way avoid this course of combinatorics and do something that is theoretically understood and works? It's not that there is no research in this direction here at MIT. There's a nice paper by Bill Freeman and Antonio Torralba, but it's still, it's a drop in the water. It's a drop in the ocean. This is an area that is under studied and needs to be pushed forward significantly in order to make room for the issue of scalability.

Processing video. By and large our community is a single frame centric. If you look at all our data sets for machine learning in computer vision, they are all single frame. In the work that have been done or I've been doing in Mobileye, the fact that processed video is critical. All the engineering success comes from the fact that you're not looking at a single frame but looking at that video.

I have here a second. No, I don't have. So I wanted to tell a story, but I'll put that aside.

Microprocessors. Most people are not aware of the fact that the hardware that you have will also determine the kinds of algorithms that you can run. Most applications in vision require a portable system. It's not that you'll be running the algorithm on the cloud like Google can do, but it has to be done on a microprocessor, low power consumption because it is portable. And there is a hardware consideration.

The array accelerators that exist today, these are DSPs, GPUs, FPGA were not designed for computer vision. They were designed for signal processing and for MPEG, JPEG compression, decompression. I'll put here a slide of the spectrum of a microprocessor. There is the risk machine CPUs that you're all aware of on one end of the spectrum, which allows you to run any branch and bound general code.

And on the other side, there is the vector accelerators, the DSPs, FPGA, GPUs that you may have heard. Maybe you don't know exactly what they do, but those are vector accelerators. Computer vision requires both. And requires also the spectrum in between. Early visual processing like edge detection and interest point location can be done using vector accelerators that exist today.

But once you identify certain areas of interest in the image, the type of algorithms that you run are very complex algorithms. These are not algorithms that can enable to convolution type of processing. So a vision co-processor is not a CPU and not an existing vector accelerator.

I have here a list of those existing accelerators each of them with their pros and cons. And the point is that none of them is designed for computer vision. At Mobileye, one of the things that we have done we designed our own ship and our own vision co-processors that are targeting the types of complex algorithms that are being done in computer vision, especially the 2D type of processing compared to 1D type of processing that is done in signal processing architectures.

But still, there's a long way to go to build hardware that is efficient, scalable, low cost, low power consumption that will enable to do very complex computer vision algorithms. Okay. So I'll stop here. Thank you.

[APPLAUSE]

POGGIO: Demis Hassabis.

HASSABIS: So I'm going to talk about the rich connection between games and artificial intelligence. So in fact, games and AI have a very long history together. In fact, one could argue that this mutual relationship dates back to before computers even existed.

So here, for example, is a picture of the Mechanical Turk, the original one before Amazon rebuilt him. And he's a chess automaton that was built in 1770 and was destroyed by a fire in 185. But before its destruction, the story goes that it beat Napoleon in a game of chess. And Napoleon was apparently so disgusted with losing the game that he ran his sword through the machine. But fortunately, the human chess master dwarf that was hiding inside apparently escaped unscathed. So it's a happy ending.

Now, in fact, beating a human champion at chess has been a dream of AI since the dawn of the field. And Alan Turing, for example, famously composed a chess program in the 1950s which managed to beat a few weak amateurs when he proceeded to run it by hand on pen and paper.

This dream, of course, was finally realized in 1997 when IBM's Deep Blue defeated Garry Kasparov in a titanic six-game match that captivated the world. Of course, that was not the end of the story. And the field moved on to tougher challenges, such as Go and Poker. But even those games have become relatively tractable in recent years.

So for example, Go, which is thought to be a much tougher problem than chess for a machine to play well mainly for two reasons. One is that it has a bigger branching factor. So the average number of moves in any one position is larger in Go than it is in chess. And probably the more difficult problem is building a good evaluation function for Go is very difficult because of its esoteric nature.

However, a French group from Inria built a program called MoGo that used state of the art Monte Carlo tree search simulation to overcome these problems. And recently became the first program to beat a professional at Go on a 9 by 9 board with no handicap. Poker is also tough because it is an imperfect information game with hidden states in terms of the opponents' cards. And again, recently here Polaris at the University of Alberta beat some top professionals at Heads Up limit poker in 2008.

So there's been some tremendous successes in terms of building AI for traditional abstract games. But probably what's less well-known is the role that AI has played in another sort of game, namely video games. So the video games industry is a big business, not as big as Google, but it's a global business worth more than $10 billion a year in revenues.

And AI has played a big part in that success. And it's found in nearly every AAA top title in some form or another. For example, with path-finding for agents to find their way around the world, character behavior, including squad tactics for war games. And AI opponents in all kinds of games-- racing, sports, and strategy games. And of course, even with things like Kinect even involved with the controller itself.

But AI is not just being used as a tool or a component for games. It is also being used for a certain genre of games as the central gameplay element upon which the game itself is actually built. So I'm going to talk about a few of those games and the history of the evolution of that kind of game. And it's a bit of a personal take on that history. And I'm going to focus on some of the games that I personally worked on.

So these types of games, games that used AI as a core gameplay component, sort of started in the early 90s with games like Sim City and also a game I worked on called Theme Park. The idea of Theme Park was that the player built their own Disneyland. And then once you've built this Disneyland, hundreds of autonomous agents came into your theme park and played on the rides and bought things from your shops.

And underlying this game was a fairly complex for its time economics model, the main challenge of which playing the game you had to balance. So the better you made the rides, the more fun the people had and the more you could charge them for things like Pepsi.

So that led to a whole genre of games called Management Simulation games. And then in 2001, we worked on a game called Black & White. So in this game, the idea was that the player was a kind of god and was battling against other gods. And your physical presence in the game world was in the form of these mythical beasts, the mythical creatures. Here you see a lion here.

And the F player actually reared these creatures from birth, from literally from very young. And depending on how you treated the creature, they became good or evil, which would have its uses, depending on which way you went. So this is a good lion. And evil ones would stamp on the villagers and your followers and so on and eat them. And even today, this is still one of the most complex reinforcement learning agent ever to have been built.

And then with management and simulation games, this came to fruition for me with Republic, The Revolution, which was done by my company in 2003. And in this game, the idea was that the player was a revolutionary. And we actually for this game stimulated a whole living, breathing country, a fictional ex-Soviet Republic called Novistrana.

And this game world literally had hundreds of thousands of people that went about their daily lives, went to work, went out in the evenings and had political affiliations. And you as the player had to influence this world. So the idea of this game was that we wanted to make you feel maybe a glimpse of what it would be like to be Che Guevara, to inspire revolution.

And the main difficulty with this game, actually, the main innovation on the AI side was what we called level of detail AI, which is that these kind of games have to run on standard home PCs so we don't have super computers to run these things on. This was in 2003.

So in order for the home PC to build able to run all the graphics, as well as the AI, we created this system called level of detail AI. Which is that any agents, of which there are hundreds of thousands, that weren't in the current cone of view or near the player, we would compress and run in a lostless state. And then decompress them as the player moved around the game world.

And finally, no discussion of AI in games would be complete without talking about the most famous one, the range of games under the title of The Sims. And this was designed by the game genius Will Wright for his company Maxis. And there's several iterations of this. And this version I'm showing here is Sims III done in 2009.

And here the scale is completely different from a game like Republic, where now we're focusing on a single family and your interactions with that family. So you can sum up those kinds of games with Maslow's Hierarchy of Needs, which is a classic theory of human motivation. Haven't really got time to go into this.

But basically, games have so far dealt with this bottom level, the physiological level-- breathing, food, water, sex, sleep, and so on-- and a little bit of the next level, but nothing up here at the higher levels of things like human morality, creativity, and so on. So whilst a lot has been achieved in games, and collectively the games I've talked about have sold millions of copies, there's still a ton of stuff to be done in games.

So I'll just finish with a couple of slides about the future. So where do I see the direction of AI going in games itself? Well, we want to create game characters with more depth, with things like emotion and memories and hopes and dreams. Games agents and opponents that learn and improve and adapt to the player. Of course, there's the old chestnut of conversing with game characters, which of course, will need natural language processing advances. And also using AI to make educational games that actually make learning fun.

But the one I'm most excited about is what I call dynamic story line generation. So I count on this when I ask myself, you know, what is it that makes games special as compared to other entertainment media like films and novels? Well I think I would argue that it's the ability to allow the audience, in this case the player, to actually influence the outcome of the narrative themselves. So that's something that none of these other media can offer.

So if that's what makes games truly distinct from these other types of genre of entertainment, then one can think then and one can argue that the ultimate game is, therefore, to give the player complete freedom of choice. But somehow also ensure that whatever choice they make, even it's a stupid choice, is always compelling and fun. So that's a really difficult balance to make.

And so game production teams know this. And there's really only two ways of dealing with this problem if you follow this line of argument. One is to special case all decision points, which is incredibly expensive and needs incredible amount of talent in terms of writing and so on. So you literally special case every decision a player could make.

Or the other alternative and more attractive alternative is to make the environment intelligent and allow it to react intelligently to the way the player interacts with it. And then the dream would be, then, to create games where every player's experience of the same game ends up being unique to them.

And then my final slide is perhaps is to me now more interesting even is, what can games do in the role of developing IA? So I believe actually that games could end up being a very useful platform for developing AI algorithms themselves.

So there's two types of platform I'm thinking about. One is current state of the art games, say for example, real time strategy games like StarCraft. And I think is the next step up in difficulty from abstract games like Go. So we have in games like StarCraft massive action sets and continuous and dynamic environments.

But the sort of thing that I'm working on is actually creating bespoke platforms for AI development that use games technology. So for example, rich 2D grid worlds, which include realistic perception and allow for a wide range of highly configurable problems in a unified framework. So this gets around the problem that we have in a lot of academic research where we special case the solutions. So I think it's the right balance of complexity between toy problems that we often use in academia that can be special cased and real world that is too messy to deal with.

And finally, I think there's another thing that this gains us is avoids robotics. And I think robots are cool. But they're very intensive in terms of the engineering effort. And that engineering effort can sometimes be a distraction in terms of concentrating on what makes them intelligent.

And then, finally, I think this is a good way to commercialize AI improvements. And this is actually the approach that we're using at the new company that I've co-founder called DeepMind.

So just to summarize, I think games are a great commercial domain for the application of AI. And also games are the perfect platform for the further development of AI algorithms.

[APPLAUSE]

POGGIO: Vikash.

MANSINGHKA: So I like to think about computer science as actually an early outgrowth of artificial intelligence. And my favorite illustration of that is actually the word computer. Right? People of my generation often forget this, but computers were once people. They were trained people who did arithmetic calculations by hand, sometimes with mechanical tools, usually in service of science and engineering. So a helpful metaphor, it's kind of like a very early version of Amazon's Mechanical Turk. Right?

Now once we started to understand the processes involved in arithmetic, and we started to understand how to break them down epistomologically and then in terms of sort of simple elementary logic operations, we were able to build machines that could do the same thing. So therefore, that sort of led to this notion of the electronic computer, which is now spread everywhere.

But there's something interesting about where computing has gone especially in relation to the boundary between computing and artificial intelligence. So we've heard throughout this symposium, and actually really beautifully today, that sort of at the frontier of computation the distinction between the problems we think of as easy for computers, like calculation, adding 2 plus 2, right, and the problems we'd really like that seem to require AI techniques, the heart of it seems to involve inferring causes behind data that we observe. And choosing good actions in response.

So one of my favorite examples is personalized medicine, pressing problem. We have an wealth of genomic data and health information about patients. And yet, we still don't really know, except in some very simple cases, which combinations of genetic markers are really causally responsible for response to which treatment.

You see the same theme that inferring the causes behind data is at the heart of AI computations in many other areas. So I won't go into all the details. But the interesting thing is that although we've seen remarkable successes, when we do this kind of work with our computing machines, as opposed to arithmetic, it seems very hard.

That difficulty ultimately boils down to the fact that when you're doing inference, as opposed to calculation, there's just a whole lot of possibilities to wrangle with. Right? So we heard about how many different types of bodies there are in the Kinect system to deal with is one example. Or how many different configurations of pedestrians in the world.

And so although there's a very simple mathematical theory that in principle lets us get good answers, this base rule here is sort of one expression of it. Just directly implementing it by calculation seems hopeless. And that's basically because there's too many causes.

So in the genetics example, let's say there are only 200 genes. There are more possible combinations of genes, if there are just a total set of 200, then there are particles in the universe. So enumerating them would be completely hopeless, let alone weighing them in light of the data. All right. So this leads to this art of AI engineering where people try to approximate sort of the dictates of this formula.

We've been exploring a different approach. So rather than try to use devices designed for calculation where you put in a program which specifies some function for turning an input into an output, we've been developing a different model of computation designed around inference by guessing. So the idea is this. Instead of giving the computer a function to calculate, you give it a space of possible explanations to consider. So you do that by telling it how to guess one of them.

So the machine's job then is to take that way of guessing and some data and explore the space of possible guesses the machine could have made in light of the data and hand you back a probable one. And our hope is that by making machines that are built from the ground up to do guessing instead of calculation we can avoid some of the tractability issues that people have struggled with in artificial intelligence.

So we currently simulate these machines on top of commodity hardware. But we've also just begun to explore what the hardware substrates would look like if you were building physical machines for guessing instead of arithmetic. So I'm just going to give a couple of illustrations of what these machines look like and sort of how they behave.

So let's take one illustrative example worked out by one of my colleagues, Keith Bonowicz, Breaking CAPTCHAs. So a CAPTCHA is nice. And then it's a thing that's very easy for a machine to generate some image. But it's supposed to be hard for a machine to work backwards from. It's supposed to require human intelligence in some sense.

So we're going to break CAPTCHAs by just taking a program that generates random CAPTCHAs and feeding them into a machine along with a particular CAPTCHA. And asking the machine, how did you probably run to produce that CAPTCHA?

So how might one write a program that guesses CAPTCHAs? Well maybe you write something that chooses some letters and puts them in a random location. So maybe you guessed five. And then renders an image. And then add some noise to make it harder to read. So you blur it and you know add a few errors. So writing this kind of program is pretty straightforward.

And then we're going to say, okay, machine, this program produced this particular CAPTCHA. How did you probably run? Okay.

So the code to do this takes about a page in our probabilistic language. And here what I'm showing is on the top, an input CAPTCHA. And on the bottom, we're going to watch a random walk through the space of possible explanations for this CAPTCHA in light of the program. So you can see actually as soon as it started it pretty quickly got kind of into the right ballpark. And now it's sort of exploring alternate names for my company.

It's slowly going to delete the letters until eventually it'll converge on the right explanation for the CAPTCHA. So it's bizarre, but when you have machines that are designed to make guesses, they start to behave as though they're imagining alternative explanations for the data that they observe.

Okay. So what are we doing with this kind of capability commercially? Well, our first product is based on the following observation. Although information technology has made it enormously easier to capture and query digital data, the thing that really gates people getting use out of it is humans telling stories about it, humans with statistical training. So machine learning is a part of that process, modeling, but really, there's this complicated iteration with the human expert in charge in many applications.

So we've built a system called Veritable, which we like to think of as sort of a statistical analyst or a statistical intern in a box. And what it does is it considers a hypothesis space of very, very many causal explanations for tabular data, including things like what all variables might possibly interact with each other and which ones might not using the same inference machinery that I showed you earlier. And then I can make predictions to fill in all of the missing values in a given table of data.

So one example, so here's a table from a health economics problem where each row is a hospital in the US. And each column is a variable measuring sort of how costly care at that hospital is, what the reported quality is, and so on.

So in just a few minutes of computation, our system was able to figure out, for example, that only the other quality scores with very high probability are causally related to quality. That means there's something out there in the world that makes hospitals good. And it has nothing to do with how big they are, how much capacity they have, how much it costs them to run. And that kind of statistical finding, which really requires a whole lot of internal validation, is actually the centerpiece of a New Yorker study that was actually somewhat influential in the health care debate.

Okay. So now you've gotten just sort of a flavor of a couple of different applications of machinery designed for inference. Let's talk a little bit about what the hardware to support it might look like. So there is this kind of bizarre thing that happens when you do a lot of probabilistic reasoning with computers. You realize, especially when you start doing it in the style that I just showed, where the machine's job is only to make guesses not really to calculate probabilities.

You have these chips which have billions of elements on them. And you're requiring them to do billions of steps in a row exactly the same way every single time. And they were manufactured with enormous persuasion and cost. Right? And you're using them for operations that are fundamentally random.

So you know, I actually think Watson illustrates this beautifully. If you compare and contrast the efficiency of the Watson computer to its human designers, it's kind of crazy. Right? It requires maybe 50,000 times the power and it's maybe 10 million times faster in terms of its switching elements. And that's all because it's frantically trying to calculate probabilities really, really quickly, as opposed to the brain which might actually be doing something that's a bit more organized around guessing.

So we've been exploring building hardware architectures designed to implement this model of computation for guessing. It's only 80? Oh, beautiful. All right.

Where instead of having gates like and, the and gate, which implements a logical connective, we have randomized gates, so rand gates that implements to caustic systems. They flip coins. And the noise is not an error. That's part of the behavior that lets the system make good guesses.

So in early architectural application we've done using this is to make a simple probabilistic video processor, which is designed to guess the causes behind sort of video motion, basically, to guess what the motion field is that explains a movie. And then we get some pretty interesting speed advantages. So this processor and the movie was made by my colleague Eric Jonas. He was a good sport.

So I just want to close by saying the thing that's exciting to me about this time in AI is that we've seen that many, many applications really boil down to just a handful of primitive operations, the operations of reasoning under uncertainty. And although these seem very, very hard technically, and we've had some great successes, there's actually hope for maybe removing a lot of that complexity.

So what if we reconsidered what computing is and what computing machines are, and we built our machines to do inference by guessing from the start? And maybe spread them everywhere that devices that calculate have already gone. Thank you.

[APPLAUSE]

POGGIO: Kobi Richter will close the presentations.

RICHTER: Good afternoon. You must be tired and bored. I'm the last and probably the least. Speaking after Google and IBM reminds me when we started Medinol, most of the employees we recruited were immigrants from the former Soviet Union that came to Israel in millions. And I had about 15 former railroad engineers that we made into stent manufactures. And I told them that the only thing I can think of that links the Israeli railroad system with the Russian one is the width. The same way I feel after Google's presentation that the only thing that combines me with Google is that we also have a dollar sign in front of our numbers.

The short presentation, I hope I will be making, is in honor of the contribution of David Marr that I was lucky to come here to MIT to work under in his last year of academic activity. And I will start with I was wondering who is David Marr today?

And when you want to do that, you Google him. You go into Wikipedia. And I was interested to find that Wikipedia knows or the world knows who he was. And that his seminal work had to do, first of all, in collaboration with Tommy Poggio had the tri-level hypothesis with the three levels of analysis computational level, a great [INAUDIBLE] representation level and implementation level.

The work of David Marr, the very short but amazing contribution was summarized by Tommy Poggio about a year after his death. And the one thing that I found very inspiring is that aside from David Marr, the interesting adventures excitement and fun awaits those who will advance this framework. This was said about 30 years ago.

I worked together with Shimon Ullmann mainly trying to find the way some of his ideas of early processing are implemented in the human brain. We started with David Marr basically started by saying that, first of all, showing that the Laplacian of Gaussian could be useful in efficiently representing images. And that the difference of Gaussian, that is implementable in combination of cells in the retina, is very similar to it.

He showed how useful the zero crossings of in difference of Gaussians could be in analyzing images. Later, in his work with his students here with Ellen Hildreth in '80, he showed how the processing of images into zero crossings helped to very efficiently do three dimensionality motion analysis and so on. Together with Tommy and Francis Crick and later Poggio, Ullmann, and Foley improved it. Showed that hyper-acuity, our ability to judge relative position of lines, for example, about 10 times better resolution than the resolution of all receptors was enabled by similar processing in the retina.

The first thing I did together with Shimon Ullmann is developing a retinal model based on comparison to all the physiological recording that was available to us at the time with the exact response transfer function and time delays between receptors, horizontal cells, by polar cells, and into the ganglion cells with the inhibitor effect of amacrine cells. We analyzed the spatial combination of such receptive fields of ganglion cells and the time, the temporal.