Project Athena - X Window System Users and Developers Conference, Day 1 [4/4]

[MUSIC PLAYING]

CARLING: My talk is on Pickling and Embellishing Widgets. First, let me explain what these two terms mean. Pickling basically refers to having an efficient format to store the user interface separately from the application. This way when the application is run, all this information can be easily converted into the application. The embellishing refers to adding graphical detail similar to some of the information we just heard, where we can add little bits of rectangles, vectors, and stuff like that to add more realism to the user interface.

What I'm going to do briefly is to first show a short movie, then I'll explain some of the principles involved, then I'll describe the Widget Editor and the way I handled the embellishing, then I'll explain some of the pickling and the storage techniques used, then I'll go over some further XToolkit extensions which I'd like to see implemented, and then finally, I just have a quick summary.

OK. I hope you can see. Play it.

[VIDEO PLAYBACK]

- I'm Richard Carling from MassComm, and I'm going to show on this tape some of the user interface capabilities that we've developed here. First thing we're going to show is a product called Laboratory Workbench. It does data acquisition using our graphical interface.

So the first thing I'm going to develop is to build a simple data flow diagram. So I'll pick up the instruments I want to use to create this particular diagram. Here I'm using just a simple test signal and a scope. I'll connect these together. And then when I run it, I get a graphical display of the data.

The graphical display lets you control a number of capabilities. You can resize the screen, You can move things around. And you can change the control of the information that you're seeing.

Now we're going to show a little bit more complicated instrument, which will highlight some of the output capabilities of our current user interface.

This particular data flow diagram consists of a standard plot and also a DB plot. There's an analog input scope, a power spectrum, and an X-Y plot. Currently, it's taking input from a microphone and displaying it both as regular input and also after the power spectrum on the right plot.

With the interface, we're able to manipulate the visual characteristics while the data is being taken in, so that the application is actually running full blast [INAUDIBLE] able to control the user interface and change parameters of the program. I actually shorted out there for a second by touching the back of the microphone.

This is an example of some of the buttons we've created using our object editor. As you can see, these buttons have a little bit more graphical detail than you would normally need, but we find that this type of detail provides more realism and gives the user more of a feel for a soft machine approach and more of a natural tendency to understand what's going on.

In the first button, we have a subobject that's actually the object that's being lit or unlit depending upon the condition of the button control. The second object has a backlighting which lights up as if there was a light bulb behind the button. And the third object, when it's selected, the color changes, showing that we can change the characteristics of the object.

The fourth object is actually, also, a tint lighting or a backlighting object, but the NTSC didn't quite provide much help. This last object shows the use of using an indirect object to show the condition of the button.

Now we're going to show some slide dials that we've developed. As you can see, the thumb on these particular dials has a lot of graphical embellishments. And as you move the thumb up and down, the graphical embellishments track. We actually here use a subwidget for that. Here's a further extension, where we actually put a numeric display widget on top of the thumb, and also include the graphical embellishments.

Now we're going to look at the scope that we had in Laboratory Workbench. First, we'll show you that it's made up of a bunch of different fields. So this is a label field, and also it's a move bar. That's a numeric that tells us which scope it is. That's the actual scope display area. Over here is essentially a prompt field that gets updated with various information from the application.

Here we have some controls that are sent back to the application, a slide dial, some more controls, a resize box, another prompt area, and another scroll box, giving us quite a lot of information that's controllable by the user on this one particular widget.

The widget can be enabled and moved and resized. And a lot of the parameters are actually manipulated while in the Widget Editor.

[END PLAYBACK]

OK, this is all being done in the Widget Editor. This stuff.

[VIDEO PLAYBACK]

- Now we're going to disassemble the parts to show that it's actually made up of a bunch of components that are hierarchically layered together to create the composite widget.

Now we're going to create a simple pulldown menu. Here we decide to select how many entries we want. And we have seven entries. We define the area for the pulldown menu.

Now we're going to create a horizontal menu, and find four entries for that, and define the area for that menu. Now we're going to rename the pulldown menu as a convenience.

Finally, we tie the pulldown menu to the horizontal menu. And then when we activate the horizontal menu, the pulldown menu acts as we'd like. OK, that's it. Thank you for watching our videotape.

[END PLAYBACK]

Now, I should quickly mention, that wasn't using the XToolkit. And basically, this is an internal user interface system that we've developed. And we've used that for Laboratory Workbench, one of the products we released. And otherwise, we still haven't really released that as a product. So what we want to do is basically provide those sort of capabilities for the Xtoolkit.

So our basic goals are to have the user interface provide a high degree of flexibility in the graphical representation, and also make it easy as possible for the user interface to be developed. Everything that you saw in the movie was basically created interactively from the user interface editor.

There's a few concepts that are important in order to make this easy to do. The first is the separation of the application from the user interface. This then lets us keep the user interface's data, which then can be modified either using a graphical widget editor or using languages and those sort of representations.

A further extension we sort of provide, although this could be argued, is we like to separate the semantics from the graphics. This lets us then have a core set or a generic set of widgets, which can provide a great deal of graphical flexibility. Also, I sort of believe that graphical representations in some sense cross semantic boundaries.

And an example of this is you could have a receiver where you have a lot of buttons that all look similar, yet a lot of them they act quite differently, where one may be a momentary button and one may have little lights above it that tell you what mode you're in. And so in general, certainly it's a good idea to make your graphical representations match your semantics. But in reality, there may be cases where you want to have more flexibility and not really create the policy.

The XToolkit programming interface does do a lot of good things. It provides a runtime creation of the user interface. It separates the application from the user interface.

The one slight drawback is since you are creating the actual user interface at runtime, you do have to have ext.create widget-type calls in your application to do that. It handles the graphical layout automatically, which is certainly quite nice. And it provides a nice level of control over the graphical appearance using Xdefaults file and the Resource Manager. The one drawback is the graphical flexibility really isn't that great.

Further, there's a few problems with creating a runtime-only user interface. The most prominent is as the complexity of the user interface gets more difficult, as you could see in Laboratory Workbench, it becomes more and more difficult to actually provide this sort of capability using runtime calls in an application. And also, it's more difficult to share the user interface between separate applications, because you have to go into source code and pick up pieces of source code.

Now, the ideal environment to me is just for the application to register a bunch of functions similar to the deck widget editor. What I have is a Xt register function call, where you give a function pointer an ASCII name of the function, and you just have a string of functions that you register. That way when the application's user interface is loaded, the names of the particular functions can be mapped to their actual pointer. And this way, you can just have the application register the functions, the user interface take control, and everything's done for you.

In order to provide these capabilities using Widget Editor, this gives us an interactive way to create the user interface, lets us create the layout of the user interface, the structure in terms of the hierarchy of how the widgets are defined and the semantics of the particular widgets, as well as the graphical details that any particular widget may have.

We also provide a mechanism to store the user interface separately, so that then the application can load that up when it's run. And finally, by using these type of capabilities, it makes it a lot easier for the user to experiment with different types of user interface representations, and do it a lot quicker, because they don't have to go into the application and make modifications and recompile it and relink it.

In order to provide the graphical flexibility, we use the notion of display list widgets. A display list is [INAUDIBLE] similar to a set of X calls, which is executed in order and provides some sort of graphical image on the screen.

The nice thing is the general widget structure is fairly inflexible, but the display list gives us a lot of flexibility in terms of what's actually stored there. And in order to provide a mechanism to provide the various states of the widget, we basically provide various or multiple display lists. And this also gives us a little bit more flexibility, because the different states can have quite varying graphical representations.

So one quick thing is, in order to make this a little bit more feasible by the Widget Editor, we provide a mechanism where all the different widget states can be provided at once, so that you can create one widget state, and then copy to another, and then copy to another, and make modifications as you go, so that you have a easy mechanism to go between the various display lists of the Widget Editor.

The format of the display list is then basically just opcode, and then some data, another opcode, and then more data. The opcodes tell the user interface system what registered routines to use to operate on that data. So the user interface in some sense intrinsics, although they certainly have the Xtoolkit intrinsics, but the internal user interface information doesn't really have to know what the representation of that data is.

The benefits of this mechanism are that it gives us a much more greater graphical flexibility. It separates the graphics from the source code. So we aren't going back to the application to change the graphics, which is currently how the widgets are implemented in the toolkit.

It's a symbolic representation. So you can go in with the Widget Editor, and similar to MacDraw, move vectors around and move rectangles around, and have all that information symbolically there.

It's extensible. I'll briefly go over a mechanism we have. To add new instructions easily due to the display list, you don't have to go mucking through a bunch of code to try to implement new information. And it provides a great deal of end user flexibility. And the fact that you don't have to give the end user source code or linkable code, but by just giving them a Widget Editor, they can go in and make changes to the user interface.

There's a few enhancements I make to the display list. One is, in order to make it version tolerant, besides having an opcode, we proceed that by a length field. The length field is the, basically, length of each opcode. This way when we store a particular user interface on disk in a efficient binary format,. We may have 100 widgets all stored together, and perhaps someone was working on a system that was a little newer than another system and happened to use a couple of instructions that weren't recognized by the application we're currently running. By having this length field in there, it's easy for the system to skip over information it doesn't know, and then basically, gracefully, skip information that it doesn't understand how to use.

This slide might be a little complicated. But basically, there's one problem with using display lists when everything's integer-based as it is in the Xtoolkit and that is if you take the widget and then size it real small and then size it real large again, everything sort of integer truncates, so that when you size it large again, everything shifts over into the wrong place.

So a way around this is we provide a floating point as well as a binary representation in the internal format of the display list. This way when the display list is stored on disk in a ASCII format, the user can edit the actual integer values quite easily. But when it's loaded, we use floating point numbers between 0 and 1 to provide scalings from the top left corner of the widget down to the bottom right corner. So when the resize routine comes through for any particular instruction, it can use these floating point numbers to recreate the integer values that can then be used by the redisplay routine to efficiently display the widget.

We could have gone straightly with a floating point description, but then there's the issue of what you're really doing is then resizing the widget when you display it every time. And it's just a performance issue, basically.

In order to associate the display list with widgets, we just associate them directly with the widget in the files that they're stored in. Initially, I was going to use the Resource Manager and have a notion of subclass for the various graphical descriptions. But I found that for efficiency reasons and just for user convenience, it's easier to do it this way. This way the user can go into an ASCII file and edit a particular widget and pieces of information if necessary, although usually they'd use the Widget Editor.

And also, not only can they edit the layout, but they can also then represent the graphical representation quite easily. And then finally, for performance, it's easy to just load this one database instead of having to merge a number of databases together.

The actual display instruction routines that have to be registered are ASCII load, which converts the ASCII representation into a binary representation, ASCII save, which converts the binary representation back to an ASCII representation, a resize routine, which, when called, basically resizes that particular instruction, a display routine, which provides the various X calls required to display it, and then there's some optional editing routines that are used inside the Widget Editor, so it's easy to create the actual instruction from the editor.

So in order to register new instructions in the display list, we first create a unique ASCII name for the particular instruction, then we create a non-conflicting opcode or index for that particular instruction, and then we define and list the various routines required to implement the particular instruction.

This is sort of a simplified example of the type of ASCII file that would be used to register for instructions for a particular display list, where you have on the left, you have the particular opcode, then you have the index, then you have the binary load instruction, the resize, and the display. These three routines are actually the only ones really necessary for the actual runtime application. The Widget Editor uses the ASCII representations, and then when it's saved, converts into a binary representation, which is more efficient for running the application on time.

Now I'm going to talk about the pickling of the widgets. First, I'd like to define a few terms. The first is the notion of an obedient widget. Right now we have something called a button box widget. And in theory, if you have a button box widget with a bunch of children, you can just have those children unmanaged, and the geometry manager shouldn't shift them around. But at least in the latest or one of the releases a little while back, if you do that, you end up with a little 10 by 10 square, and it doesn't do you much good.

So I basically created an obedient widget, which, when you place a widget on top of it, it doesn't rearrange it at all. When you do scale that widget, it just scales all the widgets inside it uniformly.

The primary widgets are basically all widgets that are on top of this obedient widget. Oh, let me also back up and say, the top level widget in our current Xtoolkit doesn't provide multiple children. So we have to have this button box or this obedient widget on top of that to provide the multiple children that's required. So the primary widgets are all children below that level or that one level below that.

This allows us to have a two-tiered directory structure for the user interface. The first level gives us all the application information in terms of an archive file, where we can store a binary representation and have a concatenated archive representation, and also all the subdirectories for all the primary widgets.

We don't continue the directory hierarchy beyond this level, because we find that becomes a little bit more difficult for the user to peruse through. And usually at this level of breakdown, it's quite easy for the user to look at an ASCII file, see what children it has, and then open up one of those children, and see what children it has, and do that all in the same directory. So it's a nice level of partitioning without making it too difficult on the user.

As I've sort mentioned a little bit, there's two types of representation provided. One is the ASCII representation, which can be edited by an ASCII editor if necessary. This is partly used, so that people that if that widget isn't working right or they just don't understand what's going on, they can always look at the ASCII representation and then decide or see just what's going on. The binary representation is used for performance inside the application, so that when it's slow, it doesn't take a great deal of time for the application to load all these user interfaces.

And to further improve the efficiency, we can concatenate all of these binary representations together with the archive facility, so that you only have to do one file open to read in all the widgets that you're using.

Here's a brief example of the ASCII storage layout. The first thing you can see-- well, maybe I'll use this thing. And maybe I won't. Oh. I'll use my pen. Don't want to go blind trying to turn it on.

The first thing is the semantic information of--

AUDIENCE: [INAUDIBLE]

CARLING: Oh, OK. Just shooting it at the moon, I guess.

The first piece of information is the semantic information or the class information about the widget. The second piece of information is the general widget's layout structure or where the widget is placed. This is relative to its parent widget.

The third piece of information is basically-- and then the rest of this piece right here is all related to the display list information. The first piece tells us how long the display list is going to be in the binary format. And then we have basically something similar to X calls, although these can be flexible, that actually define the graphical attributes of the particular widget.

And then finally, we have some structural information, which provides us with the children for the particular widget. And notice I have a command here with some children on it.

OK, now I'm going to talk a little bit about some of the extensions that I'd like to see put in which would help aid realism. The first is what I call an indirect visual response. And in this type of widget, the actual widget that you're pressing doesn't change its graphical representation greatly. It may provide sort of a highlighted, unhighlighted, but the set, unset wouldn't be implemented.

It would basically then kick off to another widget, which actually changes its state graphically. And this is certainly similar to when you have a receiver or something, and you hit the power button, and then the little red light goes on. And it just provides a mechanism to add more modeling capabilities.

Also, here you can see the use that we added this extra rectangle, a few vectors here. And that sort of stuff, just-- these are the sort of embellishments I'm talking about, just to give it more of a real feel.

I was debating whether to show this one. This is basically a composite command class widget, where you have a subwidget, which is doing essentially the same thing as the indirect visual response. The reason I didn't want to show it is because I can actually do this with the multiple display list. There could be cases where instead of it just being something simple like this, you might want to have a numeric or some other sort of widget on top of it. So in general, I believe it would be important to loosen up a little bit on the structure of some of these lower-level widgets, so that they could handle at least one child widget possibly with a very simple obedient geometry manager.

OK, there's a few other considerations. In terms of things like Laboratory Workbench, it's important that there's a mechanism, so that the application can provide events to the widgets in a very efficient manner. And also, it would be nice for input widgets to actually send data not only to the application, but also back to other widgets. And to do this transparently from the application, so that these sorts of capabilities can be modeled within the Widget Editor.

And finally, standard definitions should be provided, so that if you have two different types of two-dimensional graphs but that represent the data in a different format, it would be easy to swap one widget out with another widget without having to go back into the application and change the event structure or change other structures.

And yet again, here's sort of a further extension to the sort of generic widget mechanism, and that would be a mechanism where the semantics of the widget are the same, but the actual display routine used may be quite different. So you may have a display routine that uses the display list, one that uses pixmaps or a little film animation or one that uses a hierarchical 3D representation to actually store it. And it would be nice if the application, again, didn't have to really understand these things, but that there was a easy way to swap them out if the user desired or the application user interface developer desired.

There's a few areas of further work. Currently, graphical context, which in X10 just weren't so bad, creates some problems. So initially, they'll just be sort of a minimal implementation of this. The one place I use Pixmaps is for the insensitive widget, where I just set up the gray pixmap and then run through it. But otherwise, I limit myself to basically the foreground and background colors, although the border widths in some of those would be quite easy to implement.

And there's no support right now for the pixmap instructions.

And the indirect visual response, if I have a chance and have the time, I'd like to get that implemented. But certainly, it be useful to have.

And output widgets just need to have a capability to provide real time output capabilities. And the output widgets would be log, histogram, pie charts, stuff like that.

So in summary, the Widget Editor makes it much easier to create the user interface. The display list gives us much more flexibility over the graphical freedom of the particular widgets. The display list and the storage capabilities make it easy to share user interfaces across applications. And keeping the user interface separate from the application also allows the end user to have much greater control over the user interface.

And one other area I should mention that was mentioned in the toolkit talk is the issue of color maps. And that's one area where work needs to be done. Right now I just stick with the standard color maps that the system has, and use them. But for instance, when I was showing those backlighted widgets and stuff like that, it would be nice if there was structure in the color map, so that you would know what colors to use, to use tint lighting and the various visual effects like that.

Do I still have time? Two minutes. OK.

There's a few areas that I'll just go over briefly. One is, similar to the constraint widget, instead of doing uniform scaling, you could also do scaling of the information inside the widget, where you use the notion of anchors or a similar notion, where you actually tie a particular graphical attributes of one part of the widget to another part of the widget, so that you can scale things inside the widget non-uniformly.

An example of this would be if you had a picture frame around a widget, and then you wanted to resize that widget, you may not want the picture frame to actually resize, but just to have the lines on the sides and the bottom to stretch out.

And that's basically it. Is there any questions?

[APPLAUSE]

OK.

AUDIENCE: Well, the widgets you've created in the Widget Editor, how will they be made available to the X community?

CARLING: Yeah, I'm actually going to try to provide a fair amount of this as public domain software. The big problem I have is--

[APPLAUSE]

One problem is by doing this talk, I ended up spending three weeks trying to get the slides together. So I was hoping to have it all on the next release of the tape. And I'll try to have some of it on that release. But I do expect to provide a simple Widget Editor and provide these sort of capabilities, so that others can use them.

And actually, we're doing this in part because we're a small company. If we tried to build our own toolkit that goes one direction, and the rest of the standard goes another direction, that just makes it more difficult on us. So we'd like to see the standard use some of the capabilities we use that hopefully will help out everybody.

Any other questions? OK. OK, go ahead.

AUDIENCE: What are the performance costs of using pickled widgets instead of hard-coding?

CARLING: Say that again?

AUDIENCE: How much does it cost you to use pickled widgets instead of just hard-coding?

CARLING: Oh, OK. Well, one thing is, by using-- well, first, back in the old days when we first implemented it, we used just ASCII widgets. And then it would take 10 seconds or 15 seconds for it to load. And we're talking about 100 or 200 widgets. Well, that was no good.

Then we went to binary widgets. Well, then we were down to four seconds or maybe three seconds. It's still no good.

So then we went to this single archive file, where all the widgets are basically in one file. And actually, I shouldn't say all the widgets. Only the widgets that are really used initially in the system, things like help buttons or documentation boxes, can be demand-loaded. So this binary form, the binary representation can be demand-loaded. So not all widgets have to be in the archive file.

But we get about, oh, I would say, under a half a second to load about 100 widgets. But this is using our old system. In fact, one thing I'm concerned about is going to be the performance characteristics under the Xtoolkit. And I haven't gotten that completely-- haven't had any very large examples to really see what that's going to do.

AUDIENCE: But once you have it loaded, what does it cost? Is it any slower than just directly calling the widgets?

CARLING: I don't think so at all. Because basically, the only real difference is traversing the display list. And that's very efficient. And the values are right there. And so I see, actually, no real performance degradation at all there. Yes. The

AUDIENCE: The questions I had are also about performance. It sounds like you've already answered my first question, which is that-- you're saying that the fact that you're exposed processing is now an interpretive process doesn't cause major performance loss.

CARLING: No. Well, in the current implementation, I use JMP tables, so that that index basically immediately jumps to the functions required.

AUDIENCE: OK.

CARLING: So it's a very quick reference.

AUDIENCE: The second is that it looks like a display list approach inherently says that you only do whole widget redisplays, not rectangle redisplays or partial exposures of any kind.

CARLING: I missed that again.

AUDIENCE: You can't deal with partial exposures with a display list probably, right?

CARLING: Right.

AUDIENCE: OK.

CARLING: Any other questions? OK. Thank you for listening.

[APPLAUSE]

MODERATOR: OK. Back at 2 o'clock sharp. Look at the back of your map for lunch places. There are a lot of you. Maybe you want to try and stage how you eat. Not all of those places are big enough to hold you all.

[AUDIENCE CHATTER]

AUDIENCE: I have 20 copies of each paper. That's all I have. I have 20 copies of each. [INAUDIBLE]

PRESENTER: This is the low-tech version of some of the stuff we had this morning. This is a good deal less sophisticated than the neat things we saw, but it has some advantages I think we'll see.

[? SWIFT ?] isn't a bird, in this case, it's the SPSS Window Facility Toolkit. Our group at TRW is called the System Productivity Program soon to change its name. And we have a whole collection of tools that we have called the Software Productivity System, which includes editors and form fill tools and things like that.

This past year we decided that since we were starting to migrate over to Sun workstations and other workstations, that it would be nice to take our applications and get them to run more or less as Window environments rather than on vanilla terminals running under curses, which is the way they were originally written.

Because of the time and the way that X11 was distributed, it was necessary to use the old toolkit and run it under X10.4. Obviously the next step is to go to X11.

The ideas of the [? SWIFT ?] toolkit was to give specific user interface to the applications programmers. The toolkit provides its own idea of what a dialog box looks like, and so on, on the theory that if you turn everybody into an amateur widget maker, you end up with amateurish widgets.

So all the user interface pop-up things are preprogrammed. And as a result, we have what we hope is a very simple user interface that hides X as much as possible. This enables our applications programmer to recast these curses applications in terms of a Window system. On the other hand, as a minus, you obviously get a very stereotyped set of applications that you can produce with this kit.

The idea is basically to try to give a sort of, kind of, hemi, semi, demi, Mac-like sort of user interface. And the whole idea was inspired by the SimpleTools Macintosh library that [? Erik ?] produced.

Once again, this was just the old X11 toolkit. And we tried not to do much of anything, because we were in a hurry. So we only used the existing widgets. The editable text widget source code, not source code, but source code, from XMH and [INAUDIBLE] that got incorporated into it. The cascaded menu widget that was in the old toolkit from HP was incorporated into it. And alert boxes and dialog boxes were basically just form widgets.

We've seen a lot of hello worlds. It's kind of like Name That Tune. I can hello that world in only five lines of code, which is a slightly contrived example, because the main event loop is kind of concealed in the alert call there that that pops up the hello world alert box. So that's slightly contrived, but we'll see a more reasonable example somewhat longer later on.

Because it's on the old toolkit widgets, virtually everything you see in the user interface right now is what you get from the old toolkit widgets. In the next version, we will produce our own widgets that come closer to exactly what we want as standard across our applications. So you have text panes and message panes, which are text widgets in both cases. Message panes, the program interface, is a printf-type call. Form panes, graphic panes, button box panes, and pop-up items.

Menus, which each pane can have its own menu that pops up when you hit the right mouse button. Cascaded menus, of course. And you can turn on or off menu items during the course of the operation. So the menus are dynamic in that sense.

More stuff. Pop-up boxes, file selection boxes a la Macintosh, which are kind of fun, alert boxes, which display short messages and let the user acknowledge or negatively acknowledge, and dialog boxes, which are straight out of the old toolkit dialog box widget.

Here's a sample of this sort of thing we get. Kind of recursive, because that's my travel plan. One of our SPP tools was a calendar tool. And the form fill in program that the calendar tool uses was redone as an X application using the SWIFT library. And this is the sort of thing it produced. This was originally done as a curses-based application. And a whole lot of control stuff went away. You didn't have to hit very strange command keys to move around from one field to another. You just had to move a mouse.

The SWIFT was debugged using a very simple MacPaint preview program. So here's a pop-up menu example over there. And you've all seen the menu widget, I hope, by now. And so there's nothing surprising there. If you Click on Quit, then I guess, in this case, you would X terminate.

[GROANING]

Just wanted to see if you were paying attention. Here's an alert box in case you ask for a file that you can't open, and it requires an acknowledgment before it goes away.

Here's a dialog box. This is our Ada interface to X.

[LAUGHTER]

And once again, it gives you a space to type something in, and print or cancel it.

And the file selection box, which is actually kind of fun. And it works pretty much like the Macintosh file selection box. It lists the directories that are in your current directory. It gives you a directory name where you are. Lets you click on one of the selections in the directory. That's a scrolled window, by the way. So if there are more things in the directory, you can scroll up or down. And when you click, the file name goes into the box down there. And you can click the Read File button or change your mind entirely or you can simply type into the box.

Also, there is, as we'll see later, hooks in there, so that the application programmer can easily select just which file names get displayed, similarly to the way Macintosh applications select their choice of things to go up into a file selection window.

So I'm going to go very quickly through the routines that are in the library. And you'll see that they're all pretty simple and fairly self-explanatory. And we don't need the details here anyway. So I'll go through them very quickly. There's nothing terribly surprising here worth mentioning. There is a default generic tool icon, or the application can set its own icon based on the bitmap file.

Each tool is divided up into one or more panes using, obviously, the pane widget. These are all vertically paned at the present. So if you want to divide into panes, you say add_pane, and say what kind of text pane, whether it's text, form, graphic, which is just a generic X window, or button box, not another paned window.

There are three kinds of panes that require additional information to initialize them. Text pane requires some mode information and what the source of the text is. Message panes can pass input that is typed by the user back to an application function, so that function can be passed in with the message pane call.

And graph pane is what in technical terms is called a hack, and is just there to allow generic X window to be used by the application. When an expose event or something like that gets sent to that window, the application is expected to know how to redraw it, and supplies a routine to do it.

Because one of our big applications is a fill in-type, fill in the blanks-type application, form panes became very important to us. And you have two things in them. You have text fields and labels. Text fields are rather complicated things, unfortunately. And this is about the longest call in the library. So you supply the name of the pane in which they belong.

The namespace in which all these things live, by the way, consist of character strings, which are supplied by the application at the beginning. So you supply the name of the pane and the field, and whether the user can type into it or just read it, what its source is, a minor visual aspect of whether it's highlighted or not, how it's scrolled. In the case of a field that is in a form, when it gets larger, the field itself might want to become larger, or it might want to scroll, or it might want to just remain the same size, and not allow more than one line or so. So that works in there, too.

And finally, you need its location, which is specified relative to some other widget that's in that form, either text field or label. Label fields are much simpler. All you supply is a string, whether it's highlighted, and where it is, and what its name is.

I'm not a big fan of button boxes. I think they get overused. So there can only be one button box per tool. And that's real easy to change if somebody shows that I'm an idiot. So the one thing that does is it removes one argument from the box button call. So all you do is supply the label for the button, the callback routine that's associated with it, and what gets passed to the callback routine.

The comment about XMH, if you saw the XMH interface, it has no less than three panels of buttons on the screen. Those can be, I think, eliminated largely by pop-up menus and by file selection boxes.

Once the event loop starts going, then there are three operations that can be performed. If the application's changed a string that's associated with a text widget, the text widget sort of has to be told that that string's been changed. That's what text_update does. A text widget that's associated with a file might want to be saved back to that file at some point. And the last two routines do that.

Message windows are very simple. You can do a printf to them. And I took the dumbest possible approach to dealing with printf and variable arguments and stuff like that. You get four of them. The idea is to keep it simple, right?

Text fields that are in a form have pretty much the same operations on them as text panes or tool windows. Unfortunately because they live in a different namespace, they have their own set of calls.

Specifying menus is done with a very simple call. You give the name of the menu. For each item in the menu, you give its name, the string that is going to appear in the label, what type of menu item it is, and what happens when you touch it.

Label items don't do much of anything, except they appear in reverse video. Those are usually the first item in the menu. Button items are just command buttons. Cascade items from HP. If you move to the right-hand end of them, they pop up another menu, which is named by the last argument. And deact items don't appear at all. And that's how you can turn menu items on. These

The menu call can be done at any time during the run. It doesn't have to happen before you start up the event.

So here's a real simple menu. No surprises here. And here's what it looks like.

The file selection boxes get popped up by a function called getfilename. There are four arguments. And one is the prompting message. Another is the default filename that's going to appear in the type-in box, unless you do something to change it.

Predicate is the name of a function written by the application, which takes a filename as an argument and returns true if that filename should be displayed. And that can be an arbitrarily simple or complicated program. It might just check to see if the name ends with .c or it might actually open the file and see if it has an appropriate magic number or something like that. And that's what [INAUDIBLE] will appear in that scrolled menu.

And at the bottom we see an example of how it might be done. It returns NULL if the Cancel button is hit. Otherwise, it returns the name that was typed.

Alert boxes just pop up a message, and let the user acknowledge or negatively acknowledge. There are four different types of them, which appear slightly different on the screen. And the first kind is distinguished by the fact that it only has a single button. You can't negatively acknowledge it.

Dialog boxes are produced by the function called prompt, which prompts the user with a message. Default and a list of buttons. The list of buttons is a single string which contains the buttons separated by colons. And when the function comes back, it returns what the user typed into the dialog box and a colon and which button he hit. And then there are two other functions called dialog field and dialog button to pick that result apart, and can be checked to see both which button he hit and what he typed.

So here's hello world in a slightly more reasonable form. It has two functions that are invoked by menu items, one for saying hello and one for getting out of the program. And the main window is a message-type window, which is the second to the last argument of tool setup. And whenever the hi button is hit, then another printf adds hello to that message window, which, of course, will scroll, and so on. And when the Quit button is hit, of course, there is an alert box that pops up and makes sure he really wanted to do that.

OK, when it was debugged, the MacPaint previewer you saw before was used. And it started off as an X10.4 application. And it had 150 lines of code. No toolkit calls. No nothing. 150 lines of code, plus the MacPaint stuff itself. And didn't do much of anything. When it was all done, it had 135 lines of code, and it allowed you to ask for new files, and read them in, and all sorts of swell stuff.

That was encouraging. So we took our X-naive application programmer, who knew all about our fill in library, and had him rework it. And in two weeks, he finished, which was very encouraging. He didn't have to learn virtually anything about X which was a good idea, because the X manuals are kind of cumbersome, and the X toolkit is largely documented in C.

[LAUGHTER]

The biggest problems he had with it were the fact that since it was a curses application, he couldn't really do character-based addressing the way he would sort of like to. He kind of had to do dead reckoning on pixels. Also, the text widget sometimes didn't behave as he would expect.

Obviously, the next thing for us to do is to do it over again in X11. The idea when it's done over in X11 is that our application programmers never notice that we've done that. We hope to have hidden X well enough that it doesn't matter which X or which toolkit it's running on as far as the application programmer's interface to it.

Also, most of the pop-up items really want to be their own widgets rather than being hacked on top of the existing ones.

Of course, we'd like additional things, too. I'd like to talk to the gentleman from CMU who had the pixel-based buttons. Those would be nice for us.

Real graphic applications, obviously, are completely unsupported by this. You have to resort to raw X, some assortment of simple at least operations that can be done in graphic windows and kept track of by the toolkit, and redrawn by the toolkit, would really be useful.

And then we ought to look into, if we've really hidden X well enough, then it shouldn't matter whether X is under it or SunView is under it or NeWS is under it or it's running on a Macintosh. So we ought to look into at least seeing if we can make our applications very, very portable using this toolkit. And we hope we can. We're trying to keep it as simple as possible for just that reason.

One other thing that we really need, and I guess I should have mentioned it earlier, of course, is pop-up windows that give you all the switches and knob-type setting things. We don't have any facilities for that in the current toolkit, and we're definitely going to need that. And that's all I have to say.

[APPLAUSE]

AUDIENCE: How long did it take you to do the first implementation under X, what is it, 10.4?

PRESENTER: Started at the beginning of September, and was finished enough for the guy to start working on the application around the middle of November.

BLEWETT: Let's see, my talk is on SWIFT, the SPS-- oh, no, no, never mind.

[LAUGHTER]

MODERATOR: I'm trying to get it up there high, because they tend to-- just clip it on the belt.

My name is Bob [? Schaffler. ?] Oh, no, that's more delusions of grandeur, I guess. Or not, maybe, huh?

I'm going to tell you about CounterPoint, which is a little prototype system that a couple of us have done at AT&T Bell Laboratories. Here's the list of characters. I'm Doug Blewett. And Marcel Meth and Mike Wish also work at AT&T at Bell Labs. Dat Nguyen a MIT co-op student that helped us with the reason and utility package part of it. So that's the talk.

So this is kind of a disclaimer slide I've got here. AT&T will have an X-based product out in the summer. And this is what we call our 6386 box. So it's a 83, 86 box.

And we have a whole bunch of people here from AT&T. And if you actually want to talk to the developers who are doing that, I'm sure they wouldn't be too hard to scare up. And I'd sure connect you with any of them if anybody's interested in it.

Well, part of the reason that I bring that up is that I want to make clear that CounterPoint is only a research tool. You There's no notion to sell this thing. We just did this for fun. It's not my fault, you know, all those things. Don't tell my mother. So I'll say that over and over again a number of other times. It's just a small project that a couple of us have hacked together.

Now, one of the things I'm kind of interested in is, how many people have actually used the Xtoolkit, Xtk? Raise your hand. Huh, not bad.

Now, how many people actually like it-- keep your hand up-- of those who-- so if you've got two hands up, you've liked it and used it. Do we have anybody with just one hand up? Strangle those people. Who are you?

I really like the toolkit. Did these guys have their hands up down here, these guys from DEC? Oh, yeah. Ah, they're getting paid for it, you know? You can't trust them.

So here's a list of motivations. A motivation slide. And so we have motivation for us and for you.

This is what the system actually looks like. I don't know why I feel obliged to use that.

Now I'm going to show you a little videotape, kind of a funny videotape that I hacked together one morning about-- actually, I think I started at 3:30, and I finished at 5:30. So it's mainly incoherent, but you'll like it, I think.

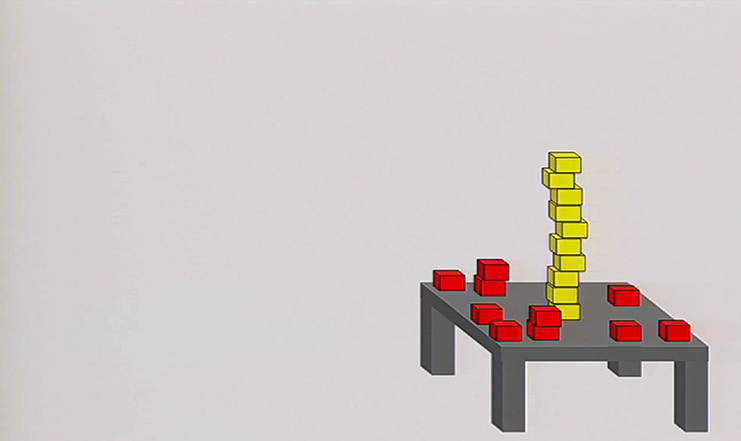

So we have three major motivations. And I think there are three things that you could possibly get out of this talk. One is that at AT&T Bell Laboratories and research, especially, there's one thing that we like, and that's small is beautiful. And so we want to continue this notion of small communicating processes. And so everything in CounterPoint is a process. And so this is the display that I'm covering up here. So each of these things is a separate process, a real to life Unix process, and these are just regular old widget demo programs. Some of them we've stolen from those guys at DEC and others we've written from scratch entirely ourselves.

This piece is the Reasoning Utility Package, which follows that small process model that Thompson and Richie are always pushing on us. So it's about an 8-megabyte process.

[LAUGHTER]

And we actually have schemes for turning each of those into an 8-megabyte process. But that's another story, I guess. And then we have an application here that communicates to those widgets through the Reasoning Utility Package. All of these things are hooked together with IPC. And we actually have some interest in interprocess communication.

The demo that you'll see today is using internet datagrams. And we use those because they don't soak up a file descriptor. One of the problems you have here is you run out of file descriptors. So that's extending the Unix philosophy of small communicating processes.

We also do kind of a nice job of blending in truth maintenance techniques. I hope you'll see them in there. And I think we also have some interesting widgets, which are kind of fun toys, which I hope you'll like.

The Reasoning Utility Package, so it's called RUP, and it's a package that was written by David McAllester. And it's a truth maintenance system, which is just one of the schemes for doing knowledge representation.

And truth maintenance, for those who aren't up on that end of the business tends to be kind of the winning edge for knowledge representation. So it's a nice scheme. And it tends to be very fast. You'll see in the demo that the messages that we're sending around are flying out of the Reasoning Utility Package quite quickly. And it's doing a lot of reasoning for each message that it sends out.

On top of the Reasoning Utility Package, we've added a layer that does constraint propagation, similar to that of Abelson, Susmann, and Susmann. So it's constraint propagation. So when I put in an X value, if I have A equals X plus Y, and Y is defined, A automatically gets generated, that sort of thing.

And we have code attached. So one of the nice things about the Reasoning Utility Package is that you can have notifiers. So that whenever a variable is-- or when a particular condition arises, it'll fire off a bit of code for you. So we use that quite heavily to send messages around to our widgets. You'll see some of that later.

We've added the following functions to the Reasoning Utility Package. So the notion here is that we set up an assertion. And we say that A and B are equal. And if a or B gets assigned a new value, then it'll generate the other term. And likewise, we have plus, minus, times.